|

遇到这种情况,是不是要重新格式化HDFS?(希望大神指点,谢谢!)

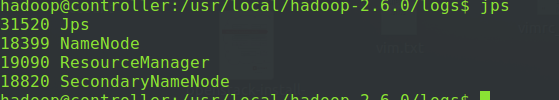

其中namenode能正常启动:

namenode

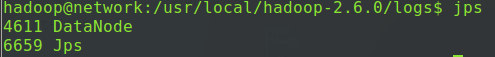

datanode中nodemanager没有启动成功

datanode

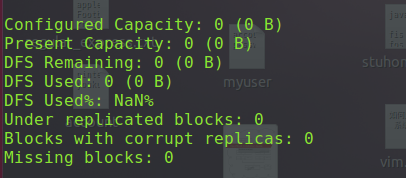

由于hadoop集群中所有的数据块都丢失了,我执行了hdfsfsck -delete 之后,执行 hadoopdfsadmin -report 如下图:

dfsadmin

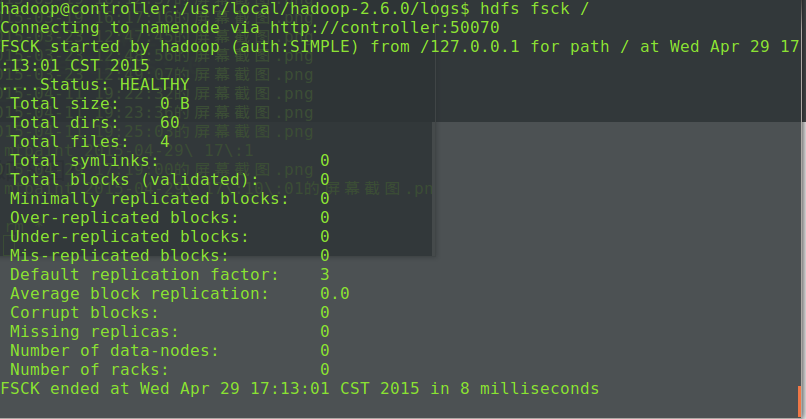

执行hdfsfsck / 如下图:

fsck

namenode上的日志信息如下(没有error):

6942015-04-29 17:25:54,529 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 6952015-04-29 17:26:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 6962015-04-29 17:26:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 6972015-04-29 17:26:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 6982015-04-29 17:26:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 1 millisecond(s). 6992015-04-29 17:27:24,529 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7002015-04-29 17:27:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 7012015-04-29 17:27:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7022015-04-29 17:27:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 7032015-04-29 17:28:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7042015-04-29 17:28:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 1 millisecond(s). 7052015-04-29 17:28:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7062015-04-29 17:28:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 7072015-04-29 17:29:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7082015-04-29 17:29:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 1 millisecond(s). 7092015-04-29 17:29:54,529 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7102015-04-29 17:29:54,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s). 7112015-04-29 17:30:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Rescanning after 30000 mill iseconds 7122015-04-29 17:30:24,530 INFOorg.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor:Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s).

datanode上的日志信息如下(没有error):

2015-04-2917:33:48,060 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 0 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:49,060 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 1 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:50,061 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 2 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:51,061 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 3 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:52,062 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 4 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:53,063 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 5 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:54,063 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 6 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:55,064 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 7 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:56,064 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 8 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS) 2015-04-2917:33:57,065 INFO org.apache.hadoop.ipc.Client: Retrying connect toserver: controller/192.168.1.186:9000. Already tried 9 time(s); retrypolicy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10,sleepTime=1000 MILLISECONDS)

来自群组: Hadoop技术组 |  /2

/2