本帖最后由 levycui 于 2017-10-10 13:47 编辑

问题导读:

1、如何理解TensorFlow中张量?

2、TensorFlow中如何创建张量?

3、如何理解占位符和变量?

4、如何创建和初始化变量?

上一篇:TensorFlow ML cookbook 第一章1、2节 TensorFlow如何工作

张量声明

张量是TensorFlow用于在计算图上操作的主要数据结构。 我们可以将这些张量声明为变量,或者将它们作为占位符进行馈送。 首先我们必须知道如何创建张量。

准备好

当我们创建一个张量并声明它是一个变量时,TensorFlow在我们的计算图中创建了几个图形结构。 同样重要的是要指出,只要创建一个张量,TensorFlow就不会对计算图添加任何东西。 TensorFlow只有在创建可用的张量之后才能做到这一点。 有关更多信息,请参阅下一节变量和占位符。

如何做

这里我们将介绍在TensorFlow中创建张量的主要方法:

1、固定张量:创建零填充张量。 使用以下内容:

[mw_shl_code=python,true]zero_tsr = tf.zeros([row_dim, col_dim]) [/mw_shl_code]

. 创建一个填充的张量。 使用以下内容:

[mw_shl_code=python,true]ones_tsr = tf.ones([row_dim, col_dim]) [/mw_shl_code]

. 创建一个常量填充张量。 使用以下内容:

[mw_shl_code=python,true]filled_tsr = tf.fill([row_dim, col_dim], 42) [/mw_shl_code]

. 从现有常数中创建张量。 使用以下内容:

[mw_shl_code=python,true]constant_tsr = tf.constant([1,2,3]) [/mw_shl_code]

请注意,tf.constant()函数可以用于将值广播到数组中,通过写入tf.constant(42,[row_dim,col_dim])来模拟tf.fill()的行为

2、类似形式的张量:

我们也可以根据其他张量的形式初始化变量,如下所示:

[mw_shl_code=python,true]zeros_similar = tf.zeros_like(constant_tsr)

ones_similar = tf.ones_like(constant_tsr)[/mw_shl_code]

注意,由于这些张量取决于先前的张量,我们必须按顺序初始化它们。 试图一次性全部初始化所有张量将会导致错误。 请参阅下一章末尾有关变量和占位符的部分。

3、序列张量:

- TensorFlow允许我们指定包含定义间隔的张量。

以下函数的行为与range()输出和numpy的linspace()输出非常相似。 请参阅以下功能:

[mw_shl_code=python,true]linear_tsr = tf.linspace(start = 0,stop = 1,start = 3)[/mw_shl_code]- 所得到的张量是序列[0.0,0.5,1.0]。 请注意,此函数包括指定的停止值。 请参阅以下功能:

[mw_shl_code=python,true]integer_seq_tsr = tf.range(start = 6,limit = 15,delta = 3)[/mw_shl_code]- 结果是序列[6,9,12]。 请注意,此函数不包括限制值。

4、随机张量:

- 以下生成的随机数来自均匀分布:randunif_tsr = tf.random_uniform([row_dim,col_dim],minval = 0,maxval = 1)

- 请注意,该随机均匀分布包括minval,但不在maxval(minval <= x <maxval)的间隔中抽取。

- 要从正态分布中获得随机抽样的张量,如下所示:

[mw_shl_code=python,true]randnorm_tsr = tf.random_normal([row_dim,col_dim],mean = 0.0,stddev = 1.0)[/mw_shl_code]- 还有一些时候,我们希望生成一定范围内的正常随机值。 truncated_normal()函数总是在指定均值的两个标准偏差内选择正常值。 请参阅以下内容:

[mw_shl_code=python,true]runcnorm_tsr = tf.truncated_normal([row_dim,col_dim],mean = 0.0,stddev = 1.0)[/mw_shl_code]- 我们可能也会对数组的条目进行随机化。 要实现这一点,有两个函数可以帮助我们:random_shuffle()和random_crop()。 请参阅以下内容:

[mw_shl_code=python,true]shuffled_output = tf.random_shuffle(input_tensor)

cropped_output = tf.random_crop(input_tensor,crop_size)[/mw_shl_code]- 在本书的后面,我们将替换随机裁剪大小的图像(高,宽,3),其中有三个颜色谱。 要修复cropped_output中的维度,您必须在该维度中给出最大尺寸:

[mw_shl_code=python,true]cropped_image = tf.random_crop(my_image,[height / 2,width / 2,3])[/mw_shl_code]

如何运行

一旦我们决定了如何创建张量,那么我们也可以通过在Variable()函数来创建相应的变量,如下所示。 更多关于这一点在下一节:

[mw_shl_code=python,true]my_var = tf.Variable(tf.zeros([row_dim,col_dim]))[/mw_shl_code]

还有更多

我们不限于内置功能。 我们可以将任何numpy数组转换成Python列表,或者

使用函数convert_to_tensor()对常数进行张量。 注意这个功能也是接受张量作为输入,我们希望对函数内部的计算进行推广。

使用占位符和变量

占位符和变量是在TensorFlow中使用计算图的关键工具。 我们必须了解差异,什么时候最好地利用它们来实现我们的优势。

准备好了

使用数据最重要的区别之一是它是占位符还是变量。 变量是算法的参数,TensorFlow跟踪如何改变这些来优化算法。 占位符是允许您馈送特定类型和形状的数据的对象,并且取决于计算图的结果,例如计算的预期结果。

怎么做

创建变量的主要方法是使用Variable()函数,该函数将张量作为输入并输出一个变量。 这是声明,我们仍然需要初始化变量。 将变量与相应的方法放在计算图上进行初始化。 以下是创建和初始化变量的示例:

[mw_shl_code=python,true]my_var = tf.Variable(tf.zeros([2,3]))

sess = tf.Session()

initialize_op = tf.global_variables_initializer ()

sess.run(initialize_op) [/mw_shl_code]

在创建和初始化变量后,查看计算图形的外观,请参阅本配方中的下一部分。

占位符只是将数据放入图表中。 占位符从会话中的feed_dict参数获取数据。 要在图表中放置占位符,我们必须对占位符执行至少一个操作。 我们初始化图形,将x声明为占位符,并将y定义为x上的身份操作,它只返回x。 然后,我们创建数据以进入x占位符并运行身份操作。 值得注意的是,TensorFlow不会在Feed字典中返回一个自引用的占位符。 代码如下所示,结果图显示在下一节:

[mw_shl_code=python,true]sess = tf.Session()

x = tf.placeholder(tf.float32, shape=[2,2])

y = tf.identity(x)

x_vals = np.random.rand(2,2)

sess.run(y, feed_dict={x: x_vals})

# Note that sess.run(x, feed_dict={x: x_vals}) will result in a self-referencing error. [/mw_shl_code]

如何运行

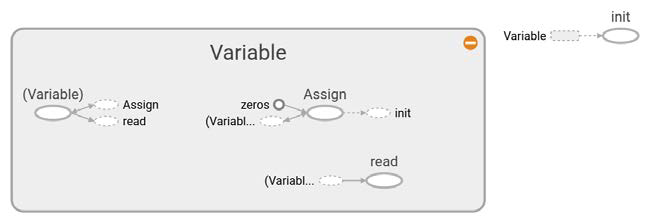

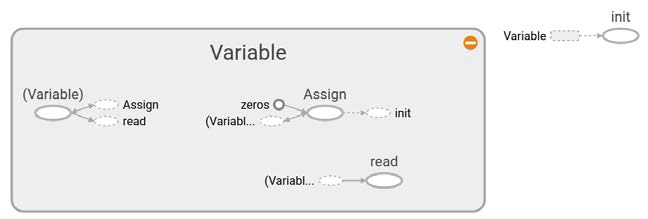

将变量初始化为零张量的计算图如下图所示:

Figure 1: Variable

在图1中,我们可以看到,只有一个变量,初始化为全零,计算图的详细信息。 灰色阴影区域是涉及的操作和常数的非常详细的视图。 具有较少细节的主要计算图是右上角灰色区域外的较小图形。 有关创建和可视化图形的更多详细信息,请参见第10章“将TensorFlow转换为生产”一节。

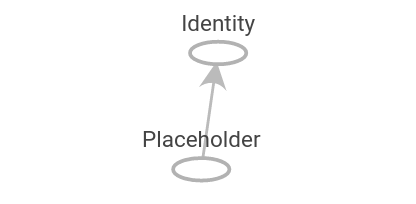

类似地,将numpy阵列馈入占位符的计算图可以在下图中看到:

图2:这是初始化的占位符的计算图。 灰色阴影区域是涉及的操作和常数的非常详细的视图。 具有较少细节的主要计算图是右上方灰色区域外的较小图。

还有更多

在计算图的运行期间,我们必须告诉TensorFlow什么时候初始化我们创建的变量。 必须知道TensorFlow可以初始化变量的时间。 虽然每个变量都有一个初始化器方法,但最常用的方法是使用helper函数,它是global_variables_initializer()。 此函数在图中创建一个初始化所有创建的变量的操作,如下所示:

[mw_shl_code=python,true]initializer_op = tf.global_variables_initializer()[/mw_shl_code]

但是,如果我们要根据初始化另一个变量的结果来初始化变量,我们必须按照我们想要的顺序初始化变量,如下所示:

[mw_shl_code=python,true]sess = tf.Session()

first_var = tf.Variable(tf.zeros([2,3]))

sess.run(first_var.initializer)

second_var = tf.Variable(tf.zeros_like(first_var))

# Depends on first_var

sess.run(second_var.initializer)[/mw_shl_code]

原文:

Declaring Tensors

Tensors are the primary data structure that TensorFlow uses to operate on the computational graph. We can declare these tensors as variables and or feed them in as placeholders. First we must know how to create tensors.

Getting ready

When we create a tensor and declare it to be a variable, TensorFlow creates several graph structures in our computation graph. It is also important to point out that just by creating a tensor, TensorFlow is not adding anything to the computational graph. TensorFlow does this only after creating available out of the tensor. See the next section on variables and placeholders for more information.

How to do it…

1。Here we will cover the main ways to create tensors in TensorFlow:

Fixed tensors: Create a zero filled tensor. Use the following:

zero_tsr = tf.zeros([row_dim, col_dim])

. Create a one filled tensor. Use the following:

ones_tsr = tf.ones([row_dim, col_dim])

. Create a constant filled tensor. Use the following:

filled_tsr = tf.fill([row_dim, col_dim], 42)

. Create a tensor out of an existing constant. Use the following:

constant_tsr = tf.constant([1,2,3])

Note that the tf.constant() function can be used to broadcast a value into an array, mimicking the behavior of tf.fill() by writing tf.constant(42, [row_dim, col_dim])

2.Tensors of similar shape:

We can also initialize variables based on the shape of other tensors, as follows:

zeros_similar = tf.zeros_like(constant_tsr)

ones_similar = tf.ones_like(constant_tsr)

Note, that since these tensors depend on prior tensors, we must initialize them in order. Attempting to initialize all the tensors all at once willwould result in an error. See the section There's more… at the end of the next chapter on variables and placeholders.

3. Sequence tensors:

- TensorFlow allows us to specify tensors that contain defined intervals.

The following functions behave very similarly to the range() outputs and numpy's linspace() outputs. See the following function:

linear_tsr = tf.linspace(start=0, stop=1, start=3)

- The resulting tensor is the sequence [0.0, 0.5, 1.0]. Note that this

function includes the specified stop value. See the following function:

integer_seq_tsr = tf.range(start=6, limit=15, delta=3)

- The result is the sequence [6, 9, 12]. Note that this function does not include the limit value.

4. Random tensors:

- The following generated random numbers are from a uniform distribution:

randunif_tsr = tf.random_uniform([row_dim, col_dim],

minval=0, maxval=1)

- Note that this random uniform distribution draws from the interval that includes the minval but not the maxval (minval <= x < maxval).

- To get a tensor with random draws from a normal distribution, as follows:

randnorm_tsr = tf.random_normal([row_dim, col_dim],mean=0.0, stddev=1.0)

- There are also times when we wish to generate normal random values that are assured within certain bounds. The truncated_normal() function

always picks normal values within two standard deviations of the specified

mean. See the following:

runcnorm_tsr = tf.truncated_normal([row_dim, col_dim],mean=0.0, stddev=1.0)

We might also be interested in randomizing entries of arrays. To accomplish

this, there are two functions that help us: random_shuffle() and

random_crop(). See the following:

shuffled_output = tf.random_shuffle(input_tensor)

cropped_output = tf.random_crop(input_tensor, crop_size)

- Later on in this book, we will be interested in randomly cropping an image of size (height, width, 3) where there are three color spectrums. To fix a dimension in the cropped_output, you must give it the maximum size in that dimension:

cropped_image = tf.random_crop(my_image, [height/2, width/2,3])

How it works…

Once we have decided on how to create the tensors, then we may also create the corresponding variables by wrapping the tensor in the Variable() function, as follows. More on this in the next section:

my_var = tf.Variable(tf.zeros([row_dim, col_dim]))

There's more…

We are not limited to the built-in functions. We can convert any numpy array to a Python list, or

constant to a tensor using the function convert_to_tensor(). Note that this function also

accepts tensors as an input in case we wish to generalize a computation inside a function.

Using Placeholders and Variables

Placeholders and variables are key tools for using computational graphs in TensorFlow. We must understand the difference and when to best use them to our advantage.

Getting ready

One of the most important distinctions to make with the data is whether it is a placeholder or a variable. Variables are the parameters of the algorithm and TensorFlow keeps track of how to change these to optimize the algorithm. Placeholders are objects that allow you to feed in data of a specific type and shape and depend on the results of the computational graph, such as the expected outcome of a computation.

How to do it…

The main way to create a variable is by using the Variable() function, which takes a tensor as an input and outputs a variable. This is the declaration and we still need to initialize the variable. Initializing is what puts the variable with the corresponding methods on the computational graph. Here is an example of creating and initializing a variable:

my_var = tf.Variable(tf.zeros([2,3]))

sess = tf.Session()

initialize_op = tf.global_variables_initializer ()

sess.run(initialize_op)

To see what the computational graph looks like after creating and initializing a variable, see the next part in this recipe.

Placeholders are just holding the position for data to be fed into the graph. Placeholders get data from a feed_dict argument in the session. To put a placeholder in the graph, we must perform at least one operation on the placeholder. We initialize the graph, declare x to be a placeholder, and define y as the identity operation on x, which just returns x. We then create data to feed into the x placeholder and run the identity operation. It is worth noting that TensorFlow will not return a self-referenced placeholder in the feed dictionary. The code is shown here and the resulting graph is shown in the next section:

sess = tf.Session()

x = tf.placeholder(tf.float32, shape=[2,2])

y = tf.identity(x)

x_vals = np.random.rand(2,2)

sess.run(y, feed_dict={x: x_vals})

# Note that sess.run(x, feed_dict={x: x_vals}) will result in a self-referencing error.

How it works…

The computational graph of initializing a variable as a tensor of zeros is shown in the following figure:

Figure 1: Variable

In Figure 1, we can see what the computational graph looks like in detail with just one variable, initialized to all zeros. The grey shaded region is a very detailed view of the operations and constants involved. The main computational graph with less detail is the smaller graph outside of the grey region in the upper right corner. For more details on creating and visualizing graphs, see Chapter 10, Taking TensorFlow to Production, section 1.

Similarly, the computational graph of feeding a numpy array into a placeholder can be seen in the following figure:

Figure 2: Here is the computational graph of a placeholder initialized. The grey shaded region is a very detailed view of the operations and constants involved. The main computational graph with less detail is the smaller graph outside of the grey region in the upper right.

There's more…

During the run of the computational graph, we have to tell TensorFlow when to initialize the variables we have created. TensorFlow must be informed about when it can initialize the variables. While each variable has an initializer method, the most common way to do this is to use the helper function, which is global_variables_initializer(). This function creates an operation in the graph that initializes all the variables we have created, as follows:

initializer_op = tf.global_variables_initializer ()

But if we want to initialize a variable based on the results of initializing another variable, we have to initialize variables in the order we want, as follows:

sess = tf.Session()

first_var = tf.Variable(tf.zeros([2,3]))

sess.run(first_var.initializer)

second_var = tf.Variable(tf.zeros_like(first_var))

# Depends on first_var

sess.run(second_var.initializer)

|

|

/2

/2