问题导读:

1、TensorFlow中有哪些激活函数?

2、如何运行激活函数?

3、TensorFlow有哪些数据源?

4、如何获得及使用数据源?

上一篇:TensorFlow ML cookbook 第一章5、6节 使用矩阵和声明操作

实现激活功能

准备

当我们开始使用神经网络时,我们会定期使用激活函数,因为激活函数是任何神经网络的强制性部分。 激活功能的目标是调整重量和偏差。 在TensorFlow中,激活函数是对张量起作用的非线性运算。 它们是以类似于以前的数学运算的方式运行的函数。 激活函数有很多用途,但是一些主要的概念是在标准化输出的同时,它们将非线性引入到图中。 使用以下命令启动TensorFlow图形:

[mw_shl_code=bash,true]import tensorflow as tf

sess = tf.Session()[/mw_shl_code]

如何做

激活函数位于TensorFlow中的神经网络(nn)库中。 除了使用内置的激活功能外,我们还可以使用TensorFlow操作来设计我们自己的激活功能。 我们可以导入预定义的激活函数(将tensorflow.nn导入为nn)或者在函数调用中显式地写入.nn。 在这里,我们选择明确每个函数调用:

1.被称为ReLU的整流线性单元是将非线性引入到神经网络中的最普遍和最基本的方式。 这个函数只是max(0,x)。 这是连续的,但不平稳。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.relu([-3., 3., 10.])))

[ 0. 3. 10.][/mw_shl_code]

2.有时我们希望限制前面的ReLU激活函数的线性递增部分。 我们可以通过将max(0,x)函数嵌套到min()函数中来实现。 TensorFlow的实现被称为ReLU6函数。 这被定义为min(max(0,x),6)。 这是hard-sigmoid函数的一个版本,计算速度更快,不会受到消失(无限小近零)或爆炸值的影响。 当我们在第8章卷积神经网络和第9章递归神经网络中讨论更深的神经网络时,这将会派上用场。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.relu6([-3., 3., 10.])))

[ 0. 3. 6.][/mw_shl_code]

3.Sigmoid函数是最常见的连续平滑激活函数。 它也被称为逻辑函数,具有1 /(1 + exp(-x))的形式。 由于在训练期间倾向于将反向传播项归零,所以不常使用S形。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.sigmoid([-1., 0., 1.])))

[ 0.26894143 0.5 0.7310586 ][/mw_shl_code]

我们应该知道一些激活函数不是零中心的,例如S形。 这将要求我们在大多数计算图算法中使用数据之前将数据置零。

4.另一个平滑的激活函数是超切线。 超切线函数与sigmoid非常相似,除了不是介于0和1之间的范围,而是介于-1和1之间的范围。函数具有双曲正弦与双曲余弦之比的形式。 但另一种写法是((exp(x) - exp(-x))/(exp(x)+ exp(-x)),如下所示:

[mw_shl_code=bash,true]print(sess.run(tf.nn.tanh([-1., 0., 1.])))

[-0.76159418 0. 0.76159418 ][/mw_shl_code]

5.softsign函数也被用作激活函数。 这个函数的形式是x /(abs(x)+ 1)。 softsign函数应该是对符号函数的连续逼近。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.softsign([-1., 0., -1.])))

[-0.5 0. 0.5][/mw_shl_code]

6.另一个函数softplus是ReLU函数的一个平滑版本。 这个函数的形式是log(exp(x)+ 1)。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.softplus([-1., 0., -1.])))

[ 0.31326166 0.69314718 1.31326163][/mw_shl_code]

softplus随着输入的增加而变为无穷大,而softsign则变为1.然而,随着输入变小,softplus接近零,softsign变为-1。

7.指数线性单位(ELU)与softplus函数非常相似,除了底部渐近线是-1而不是0.如果x <0 else x,则形式为(exp(x)+1)。 它显示如下:

[mw_shl_code=bash,true]print(sess.run(tf.nn.elu([-1., 0., -1.])))

[-0.63212055 0. 1. ][/mw_shl_code]

如何运行

这些激活函数是我们将来在神经网络或其他计算图中引入非线性的方式。 注意在我们的网络中我们使用激活功能的位置很重要。 如果激活函数的范围在0到1之间(sigmoid),那么计算图只能输出0到1之间的值。

如果激活函数在节点内部并隐藏起来,那么我们想要知道范围在我们传递给它的张量上可能会产生的效果。 如果我们的张量的平均值为零,我们将要使用一个激活函数,尽可能地保留零附近的变化。 这意味着我们要选择一个激活函数,如双曲正切(tanh)或softsign。 如果张量全部被调整为正值,那么理想情况下我们将选择一个激活函数来保留正域中的方差。

更多

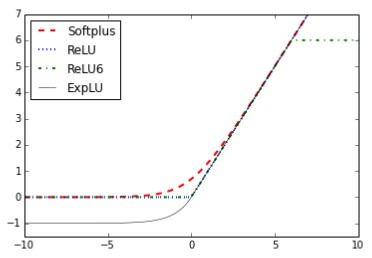

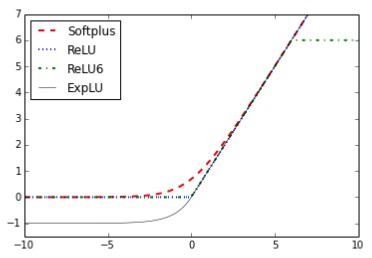

这里有两个图表来说明不同的激活功能。 下图显示了以下函数ReLU,ReLU6,softplus,指数LU,S形,softsign和双曲正切:

图3:softplus,ReLU,ReLU6和指数LU的激活功能

在图3中,我们可以看到四个激活函数softplus,ReLU,ReLU6和指数LU。 这些函数向左变为零,然后线性增加到零的右侧,除了ReLU6,其最大值为6:

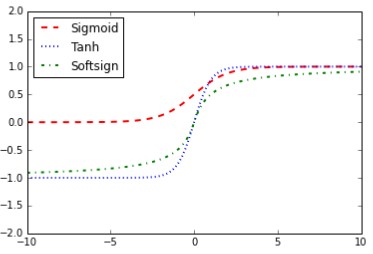

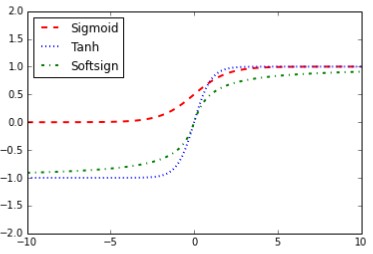

图4:Sigmoid,双曲正切(tanh)和软标志激活函数

在图4中,我们有激活函数sigmoid,双曲正切(tanh)和softsign。 这些激活功能都是平滑的,并具有S形状。 请注意,这些函数有两个水平渐近线。

使用数据源

对于本书的大部分内容,我们将依靠使用数据集来使用机器学习算法。 本节介绍如何通过TensorFlow和Python访问各种数据集。

准备

在TensorFlow中,我们将使用的一些数据集内置到Python库中,其中一些将需要Python脚本下载,另一些将通过Internet手动下载。 几乎所有这些数据集都需要有效的Internet连接才能检索数据。

如何做

1. iris数据:这个数据集可以说是机器学习中使用的最经典的数据集,也许是所有的统计数据。 这是一个数据集,测量萼片长度,萼片宽度,花瓣长度和花瓣宽度的三个不同类型的鸢尾花:Iris setosa, Iris virginica, and Iris versicolor. 总共有150个测量值,每个物种有50个测量值。 要在Python中加载数据集,我们使用Scikit Learn的数据集函数,如下所示:

[mw_shl_code=bash,true]from sklearn import datasets

iris = datasets.load_iris()

print(len(iris.data))

150

print(len(iris.target))

150

print(iris.target[0]) # Sepal length, Sepal width, Petal length, Petal width

[ 5.1 3.5 1.4 0.2]

print(set(iris.target)) # I. setosa, I. virginica, I. versicolor

{0, 1, 2}[/mw_shl_code]

2.出生体重数据:马萨诸塞大学阿默斯特分校编制了许多有趣的统计数据集(1)。 一个这样的数据集是衡量婴儿出生体重和其他人口统计和医疗测量的母亲和家庭的历史。 有11个变量有189个观察值。 这里是如何访问Python中的数据:

[mw_shl_code=bash,true]import requests

birthdata_url = 'https://www.umass.edu/statdata/statdata/data/ lowbwt.dat'

birth_file = requests.get(birthdata_url)

birth_data = birth_file.text.split('\'r\n') [5:]

birth_header = [x for x in birth_data[0].split( '') if len(x)>=1]

birth_data = [[float(x) for x in y.split( ')'' if len(x)>=1] for y in birth_data[1:] if len(y)>=1]

print(len(birth_data))

189

print(len(birth_data[0]))

11[/mw_shl_code]

3.波士顿房屋数据:卡内基梅隆大学在其Statlib图书馆中维护一个数据库库。 这个数据很容易通过加州大学欧文分校的机器学习库(2)获得。 有506房屋价值的观察与各种人口数据和住房属性(14变量)。 这里是如何访问Python中的数据:

[mw_shl_code=bash,true]import requests

housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data'

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV0']

housing_file = requests.get(housing_url)

housing_data = [[float(x) for x in y.split( '') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1]

print(len(housing_data))

506

print(len(housing_data[0]))

14[/mw_shl_code]

4.MNIST手写数据:MNIST(混合国家标准与技术研究院)是较大的NIST手写数据库的子集。 MNIST手写数据集位于Yann LeCun网站(https://yann.lecun.com/exdb/mnist /)上。 它是一个包含7万个单位数字图像(0-9)的数据库,其中约6万个注释用于训练集,10,000个用于测试集。 这个数据集经常用于图像识别,TensorFlow提供了内置函数来访问这些数据。 在机器学习中,提供验证数据以防止过度拟合(目标泄漏)也很重要。 由于这个TensorFlow,把5000个火车集合放到一个验证集中。 这里是如何访问Python中的数据:

[mw_shl_code=bash,true]from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/"," one_hot=True)

print(len(mnist.train.images))

55000

print(len(mnist.test.images))

10000

print(len(mnist.validation.images))

5000

print(mnist.train.labels[1,:]) # The first label is a 3'''

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.][/mw_shl_code]

5.垃圾邮件文本数据。 UCI的机器学习数据库库(2)也包含垃圾邮件文本消息数据集。 我们可以访问这个.zip文件并获取垃圾邮件文本数据,如下所示:

[mw_shl_code=bash,true]import requests

import io

from zipfile import ZipFile

zip_url = 'http://archive.ics.uci.edu/ml/machine-learning-databases/00228/smsspamcollection.zip'

r = requests.get(zip_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('SMSSpamCollection')

text_data = file.decode()

text_data = text_data.encode('ascii',errors='ignore')

text_data = text_data.decode().split(\n')

text_data = [x.split(\t') for x in text_data if len(x)>=1]

[text_data_target, text_data_train] = [list(x) for x in zip(*text_ data)]

print(len(text_data_train))

5574

print(set(text_data_target))

{'ham', 'spam'}

print(text_data_train[1])

Ok lar... Joking wif u oni... [/mw_shl_code]

6.Movie评论数据:Cornell的Bo Pang发布了一个电影评论数据集,将评论分为好或坏(3)。 你可以在网站上找到这些数据,http://www.cs.cornell.edu/people/pabo/movie-review-data/。 要下载,提取和转换这些数据,我们运行以下代码:

[mw_shl_code=bash,true]import requests

import io

import tarfile

movie_data_url = 'http://www.cs.cornell.edu/people/pabo/movie-review-data/rt-polaritydata.tar.gz'

r = requests.get(movie_data_url)

# Stream data into temp object

stream_data = io.BytesIO(r.content)

tmp = io.BytesIO()

while True:

s = stream_data.read(16384)

if not s:

break

tmp.write(s)

stream_data.close()

tmp.seek(0)

# Extract tar file

tar_file = tarfile.open(fileobj=tmp, mode="r:gz")

pos = tar_file.extractfile('rt'-polaritydata/rt-polarity.pos')

neg = tar_file.extractfile('rt'-polaritydata/rt-polarity.neg')

# Save pos/neg reviews (Also deal with encoding)

pos_data = []

for line in pos:

pos_data.append(line.decode('ISO'-8859-1'). encode('ascii',errors='ignore').decode())

neg_data = []

for line in neg:

neg_data.append(line.decode('ISO'-8859-1'). encode('ascii',errors='ignore').decode())

tar_file.close()

print(len(pos_data))

5331

print(len(neg_data))

5331

# Print out first negative review

print(neg_data[0])

simplistic , silly and tedious .[/mw_shl_code]

7.CIFAR-10图像数据:加拿大高级研究所已经发布了一个图像集,其中包含8000万个标记的彩色图像(每个图像缩放为32x32像素)。 有10个不同的目标类(飞机,汽车,鸟类等)。 CIFAR-10是一个拥有60,000个图像的子集。 训练集中有50,000张图片,测试集中有10,000张。 由于我们将以多种方式使用这个数据集,并且因为它是我们较大的数据集之一,所以每次我们需要时都不会运行脚本。 要获得这个数据集,请导航到http://www.cs.toronto.edu/~kriz/cifar.html,然后下载CIFAR-10数据集。 我们将解释如何在适当的章节中使用这个数据集。

8.莎士比亚文本资料:古腾堡计划(5)是一个发行电子版免费书籍的项目。 他们把莎士比亚的所有作品汇编在一起,下面是如何通过Python访问文本文件:

[mw_shl_code=bash,true]import requests

shakespeare_url = 'http://www.gutenberg.org/cache/epub/100/pg100. txt'

# Get Shakespeare text

response = requests.get(shakespeare_url)

shakespeare_file = response.content

# Decode binary into string

shakespeare_text = shakespeare_file.decode('utf-8')

# Drop first few descriptive paragraphs.

shakespeare_text = shakespeare_text[7675:]

print(len(shakespeare_text)) # Number of characters

5582212[/mw_shl_code]

9.英语 - 德语句子翻译数据:Tatoeba项目(http:// tatoeba.org)收集多种语言的句子翻译。 他们的数据已经在Creative Commons License下发布。 根据这些数据,ManyThings.org(http://www.manythings.org)已经在可供下载的文本文件中编译了句子到句子的翻译。 这里我们将使用英文 - 德文翻译文件,但是您可以将URL更改为您想要使用的任何语言:

[mw_shl_code=bash,true]import requests

import io

from zipfile import ZipFile

sentence_url = 'http://www.manythings.org/anki/deu-eng.zip'

r = requests.get(sentence_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('deu.txt''')

# Format Data

eng_ger_data = file.decode()

eng_ger_data = eng_ger_data.encode('ascii''',errors='ignore''')

eng_ger_data = eng_ger_data.decode().split(\n''')

eng_ger_data = [x.split(\t''') for x in eng_ger_data if len(x)>=1]

[english_sentence, german_sentence] = [list(x) for x in zip(*eng_ ger_data)]

print(len(english_sentence))

137673

print(len(german_sentence))

137673

print(eng_ger_data[10])

['I won!, 'Ich habe gewonnen!'][/mw_shl_code]

如何运行

当在配方中使用这些数据集中的一个时,我们将参考本节,并假定数据以前面介绍的方式加载。如果需要进一步的数据转换或预处理,则这些代码将在配方本身中提供。

更多

Hosmer,D.W.,Lemeshow,S.和Sturdivant,R.X.(2013)。应用逻辑回归:第3版。

https://www.umass.edu/statdata/statdata/data/lowbwt.txt

Lichman,M(2013) UCI机器学习库http://archive.ics.uci.edu/ml

加利福尼亚州伊尔文:加州大学信息与计算机科学学院。

Bo Pang,Lillian Lee和Shivakumar Vaithyanathan,Thumbs up?

Sentiment Classification using Machine Learning Techniques,Proceedings of EMNLP 2002. http://www.cs.cornell.edu/people/pabo/movie-review-data/

Krizhevsky(2009年)从微小的图像学习多层特征 http://www.cs.toronto.edu/~kriz/cifar.html

古腾堡工程 2016年4月访问http://www.gutenberg.org/

原文:

Implementing Activation Functions

Getting ready

When we start to use neural networks, we will use activation functions regularly because activation functions are a mandatory part of any neural network. The goal of the activation function is to adjust weight and bias. In TensorFlow, activation functions are non-linear operations that act on tensors. They are functions that operate in a similar way to the previous mathematical operations. Activation functions serve many purposes, but a few main concepts is that they introduce a non-linearity into the graph while normalizing the outputs. Start a TensorFlow graph with the following commands:

import tensorflow as tf

sess = tf.Session()

How to do it…

The activation functions live in the neural network (nn) library in TensorFlow. Besides using built-in activation functions, we can also design our own using TensorFlow operations. We can import the predefined activation functions (import tensorflow.nn as nn) or be explicit and write .nn in our function calls. Here, we choose to be explicit with each function call:

1.The rectified linear unit, known as ReLU, is the most common and basic way to introduce a non-linearity into neural networks. This function is just max(0,x). It is continuous but not smooth. It appears as follows:

print(sess.run(tf.nn.relu([-3., 3., 10.])))

[ 0. 3. 10.]

2.There will be times when we wish to cap the linearly increasing part of the preceding ReLU activation function. We can do this by nesting the max(0,x) function into a min() function. The implementation that TensorFlow has is called the ReLU6 function. This is defined as min(max(0,x),6). This is a version of the hard-sigmoid function and is computationally faster, and does not suffer from vanishing (infinitesimally near zero) or exploding values. This will come in handy when we discuss deeper neural networks in Chapters 8, Convolutional Neural Networks and Chapter 9, Recurrent Neural Networks. It appears as follows:

print(sess.run(tf.nn.relu6([-3., 3., 10.])))

[ 0. 3. 6.]

3.The sigmoid function is the most common continuous and smooth activation function. It is also called a logistic function and has the form 1/(1+exp(-x)). The sigmoid is not often used because of the tendency to zero-out the back propagation terms during training. It appears as follows:

print(sess.run(tf.nn.sigmoid([-1., 0., 1.])))

[ 0.26894143 0.5 0.7310586 ]

We should be aware that some activation functions are not zero centered, such as the sigmoid. This will require us to zero-mean the data prior to using it in most computational graph algorithms.

4.Another smooth activation function is the hyper tangent. The hyper tangent function is very similar to the sigmoid except that instead of having a range between 0 and 1, it has a range between -1 and 1. The function has the form of the ratio of the hyperbolic sine over the hyperbolic cosine. But another way to write this is ((exp(x)- exp(-x))/(exp(x)+exp(-x)). It appears as follows:

print(sess.run(tf.nn.tanh([-1., 0., 1.])))

[-0.76159418 0. 0.76159418 ]

5.The softsign function also gets used as an activation function. The form of this function is x/(abs(x) + 1). The softsign function is supposed to be a continuous approximation to the sign function. It appears as follows:

print(sess.run(tf.nn.softsign([-1., 0., -1.])))

[-0.5 0. 0.5]

6.Another function, the softplus, is a smooth version of the ReLU function. The form of this function is log(exp(x) + 1). It appears as follows:

print(sess.run(tf.nn.softplus([-1., 0., -1.])))

[ 0.31326166 0.69314718 1.31326163]

The softplus goes to infinity as the input increases whereas the softsign goes to 1. As the input gets smaller, however, the softplus approaches zero and the softsign goes to -1.

7.The Exponential Linear Unit (ELU) is very similar to the softplus function except that the bottom asymptote is -1 instead of 0. The form is (exp(x)+1) if x < 0 else x. It appears as follows:

print(sess.run(tf.nn.elu([-1., 0., -1.])))

[-0.63212055 0. 1. ]

How it works…

These activation functions are the way that we introduce nonlinearities in neural networks or other computational graphs in the future. It is important to note where in our network we are using activation functions. If the activation function has a range between 0 and 1 (sigmoid), then the computational graph can only output values between 0 and 1.

If the activation functions are inside and hidden between nodes, then we want to be aware of the effect that the range can have on our tensors as we pass them through. If our tensors were scaled to have a mean of zero, we will want to use an activation function that preserves as much variance as possible around zero. This would imply we want to choose an activation function such as the hyperbolic tangent (tanh) or softsign. If the tensors are all scaled to be positive, then we would ideally choose an activation function that preserves variance in the positive domain.

There's more…

Here are two graphs that illustrate the different activation functions. The following figure shows the following functions ReLU, ReLU6, softplus, exponential LU, sigmoid, softsign, and the hyperbolic tangent:

Figure 3: Activation functions of softplus, ReLU, ReLU6, and exponential LU

In Figure 3, we can see four of the activation functions, softplus, ReLU, ReLU6, and exponential LU. These functions flatten out to the left of zero and linearly increase to the right of zero, with the exception of ReLU6, which has a maximum value of 6:

Figure 4: Sigmoid, hyperbolic tangent (tanh), and softsign activation function

In Figure 4, we have the activation functions sigmoid, hyperbolic tangent (tanh), and softsign. These activation functions are all smooth and have a S n shape. Note that there are two horizontal asymptotes for these functions.

Working with Data Sources

For most of this book, we will rely on the use of datasets to fit machine learning algorithms. This section has instructions on how to access each of these various datasets through TensorFlow and Python.

Getting ready

In TensorFlow some of the datasets that we will use are built in to Python libraries, some will require a Python script to download, and some will be manually downloaded through the Internet. Almost all of these datasets require an active Internet connection to retrieve data.

How to do it…

1.Iris data: This dataset is arguably the most classic dataset used in machine learning and maybe all of statistics. It is a dataset that measures sepal length, sepal width, petal length, and petal width of three different types of iris flowers: Iris setosa, Iris virginica, and Iris versicolor. There are 150 measurements overall, 50 measurements of each species. To load the dataset in Python, we use Scikit Learn's dataset function, as follows:

from sklearn import datasets

iris = datasets.load_iris()

print(len(iris.data))

150

print(len(iris.target))

150

print(iris.target[0]) # Sepal length, Sepal width, Petal length, Petal width

[ 5.1 3.5 1.4 0.2]

print(set(iris.target)) # I. setosa, I. virginica, I. versicolor

{0, 1, 2}

2.Birth weight data: The University of Massachusetts at Amherst has compiled many statistical datasets that are of interest (1). One such dataset is a measure of child birth weight and other demographic and medical measurements of the mother and family history. There are 189 observations of 11 variables. Here is how to access the data in Python:

import requests

birthdata_url = 'https://www.umass.edu/statdata/statdata/data/ lowbwt.dat'

birth_file = requests.get(birthdata_url)

birth_data = birth_file.text.split('\'r\n') [5:]

birth_header = [x for x in birth_data[0].split( '') if len(x)>=1]

birth_data = [[float(x) for x in y.split( ')'' if len(x)>=1] for y in birth_data[1:] if len(y)>=1]

print(len(birth_data))

189

print(len(birth_data[0]))

11

3.Boston Housing data: Carnegie Mellon University maintains a library of datasets in their Statlib Library. This data is easily accessible via The University of California at Irvine's Machine-Learning Repository (2). There are 506 observations of house worth along with various demographic data and housing attributes (14 variables). Here is how to access the data in Python:

import requests

housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data'

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV0']

housing_file = requests.get(housing_url)

housing_data = [[float(x) for x in y.split( '') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1]

print(len(housing_data))

506

print(len(housing_data[0]))

14

4.MNIST handwriting data: MNIST (Mixed National Institute of Standards and Technology) is a subset of the larger NIST handwriting database. The MNIST handwriting dataset is hosted on Yann LeCun's website (https://yann.lecun. com/exdb/mnist/). It is a database of 70,000 images of single digit numbers (0-9) with about 60,000 annotated for a training set and 10,000 for a test set. This dataset is used so often in image recognition that TensorFlow provides built-in functions to access this data. In machine learning, it is also important to provide validation data to prevent overfitting (target leakage). Because of this TensorFlow, sets aside 5,000 of the train set into a validation set. Here is how to access the data in Python:

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/"," one_hot=True)

print(len(mnist.train.images))

55000

print(len(mnist.test.images))

10000

print(len(mnist.validation.images))

5000

print(mnist.train.labels[1,:]) # The first label is a 3'''

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]

5.Spam-ham text data. UCI's machine -learning data set library (2) also holds a spam-ham text message dataset. We can access this .zip file and get the spam-ham text data as follows:

import requests

import io

from zipfile import ZipFile

zip_url = 'http://archive.ics.uci.edu/ml/machine-learning-databases/00228/smsspamcollection.zip'

r = requests.get(zip_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('SMSSpamCollection')

text_data = file.decode()

text_data = text_data.encode('ascii',errors='ignore')

text_data = text_data.decode().split(\n')

text_data = [x.split(\t') for x in text_data if len(x)>=1]

[text_data_target, text_data_train] = [list(x) for x in zip(*text_ data)]

print(len(text_data_train))

5574

print(set(text_data_target))

{'ham', 'spam'}

print(text_data_train[1])

Ok lar... Joking wif u oni...

6.Movie review data: Bo Pang from Cornell has released a movie review dataset that classifies reviews as good or bad (3). You can find the data on the website, http:// www.cs.cornell.edu/people/pabo/movie-review-data/. To download, extract, and transform this data, we run the following code:

import requests

import io

import tarfile

movie_data_url = 'http://www.cs.cornell.edu/people/pabo/movie-review-data/rt-polaritydata.tar.gz'

r = requests.get(movie_data_url)

# Stream data into temp object

stream_data = io.BytesIO(r.content)

tmp = io.BytesIO()

while True:

s = stream_data.read(16384)

if not s:

break

tmp.write(s)

stream_data.close()

tmp.seek(0)

# Extract tar file

tar_file = tarfile.open(fileobj=tmp, mode="r:gz")

pos = tar_file.extractfile('rt'-polaritydata/rt-polarity.pos')

neg = tar_file.extractfile('rt'-polaritydata/rt-polarity.neg')

# Save pos/neg reviews (Also deal with encoding)

pos_data = []

for line in pos:

pos_data.append(line.decode('ISO'-8859-1'). encode('ascii',errors='ignore').decode())

neg_data = []

for line in neg:

neg_data.append(line.decode('ISO'-8859-1'). encode('ascii',errors='ignore').decode())

tar_file.close()

print(len(pos_data))

5331

print(len(neg_data))

5331

# Print out first negative review

print(neg_data[0])

simplistic , silly and tedious .

7.CIFAR-10 image data: The Canadian Institute For Advanced Research has released an image set that contains 80 million labeled colored images (each image is scaled to 32x32 pixels). There are 10 different target classes (airplane, automobile, bird, and so on). The CIFAR-10 is a subset that has 60,000 images. There are 50,000 images in the training set, and 10,000 in the test set. Since we will be using this dataset in multiple ways, and because it is one of our larger datasets, we will not run a script each time we need it. To get this dataset, please navigate to http://www. cs.toronto.edu/~kriz/cifar.html, and download the CIFAR-10 dataset. We will address how to use this dataset in the appropriate chapters.

8.The works of Shakespeare text data: Project Gutenberg (5) is a project that releases electronic versions of free books. They have compiled all of the works of Shakespeare together and here is how to access the text file through Python:

import requests

shakespeare_url = 'http://www.gutenberg.org/cache/epub/100/pg100. txt'

# Get Shakespeare text

response = requests.get(shakespeare_url)

shakespeare_file = response.content

# Decode binary into string

shakespeare_text = shakespeare_file.decode('utf-8')

# Drop first few descriptive paragraphs.

shakespeare_text = shakespeare_text[7675:]

print(len(shakespeare_text)) # Number of characters

5582212

9.English-German sentence translation data: The Tatoeba project (http:// tatoeba.org) collects sentence translations in many languages. Their data has been released under the Creative Commons License. From this data, ManyThings.org (http://www.manythings.org) has compiled sentence-to-sentence translations in text files available for download. Here we will use the English-German translation file, but you can change the URL to whatever languages you would like to use:

import requests

import io

from zipfile import ZipFile

sentence_url = 'http://www.manythings.org/anki/deu-eng.zip'

r = requests.get(sentence_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('deu.txt''')

# Format Data

eng_ger_data = file.decode()

eng_ger_data = eng_ger_data.encode('ascii''',errors='ignore''')

eng_ger_data = eng_ger_data.decode().split(\n''')

eng_ger_data = [x.split(\t''') for x in eng_ger_data if len(x)>=1]

[english_sentence, german_sentence] = [list(x) for x in zip(*eng_ ger_data)]

print(len(english_sentence))

137673

print(len(german_sentence))

137673

print(eng_ger_data[10])

['I won!, 'Ich habe gewonnen!']

How it works…

When it comes time to use one of these datasets in a recipe, we will refer you to this section and assume that the data is loaded in such a way as described in the preceding text. If further data transformation or pre-processing is needed, then such code will be provided in the recipe itself.

See also

Hosmer, D.W., Lemeshow, S., and Sturdivant, R. X. (2013). Applied Logistic Regression: 3rd Edition. https://www.umass.edu/statdata/statdata/data/ lowbwt.txt

Lichman, M. (2013). UCI Machine Learning Repository. http://archive.ics. uci.edu/ml. Irvine, CA: University of California, School of Information and Computer Science.

Bo Pang, Lillian Lee, and Shivakumar Vaithyanathan, Thumbs up? Sentiment Classification using Machine Learning Techniques, Proceedings of EMNLP 2002. http://www.cs.cornell.edu/people/pabo/movie-review-data/

Krizhevsky. (2009). Learning Multiple Layers of Features from Tiny Images. http:// www.cs.toronto.edu/~kriz/cifar.html

Project Gutenberg. Accessed April 2016. http://www.gutenberg.org/.

|

/2

/2