本帖最后由 levycui 于 2018-1-24 13:50 编辑

问题导读:

1、如何在训练机器学习模型时使用反向传播?

2、如何更新变量并使损失函数最小化?

3、如何使用梯度下降?

4、如何解决梯度下降算法可能会卡住或减速问题?

上一篇:TensorFlow ML cookbook 第二章3、4节 使用多个图层和实施损失函数

使用TensorFlow的好处之一是它可以跟踪操作并根据反向传播自动更新模型变量。 在这个章节中,我们将介绍如何在训练机器学习模型时使用这个方面。

准备

现在我们将介绍如何改变模型中的变量,使损失函数最小化。 我们已经学会了如何使用对象和操作,并创建损失函数来测量我们的预测和目标之间的距离。 现在我们只需要告诉TensorFlow如何通过我们的计算图来传播错误,来更新变量并使损失函数最小化。 这是通过声明一个优化函数完成的。 一旦我们声明了一个优化函数,TensorFlow将会遍历并计算出图中所有计算的反向传播项。 当我们输入数据并使损失函数最小化时,TensorFlow将相应地修改图中的变量。

对于这个配方,我们将做一个非常简单的回归算法。 我们将从正常样本中随机抽样,平均值为1,标准偏差为0.1。 然后,我们将通过一个操作来运行这些数字,这个操作就是将它们乘以一个变量A.由此,损失函数将是输出与目标之间的L2范数,这将始终是值10.理论上, 因为我们的数据意味着1,所以A的最佳值将是数字10。

第二个例子是一个非常简单的二进制分类算法。 这里我们将从两个正态分布N(-1,1)和N(3,1)中生成100个数字。 所有从N(-1,1)开始的数字将在目标类0中,并且N(3,1)中的所有数将在目标类1中。用于区分这些数的模型将是翻译的S形函数。 换句话说,模型将是sigmoid(x + A),其中A是我们将要适应的变量。 理论上,A将等于-1。 我们得到这个数字,因为如果m1和m2是两个正常函数的平均值,那么把它们加等于零的值就是 - (m1 + m2)/ 2。 在第二个例子中,我们将看到TensorFlow如何到达这个数字。

虽然指定好的学习速率有助于算法的收敛,但我们还必须指定一种优化。 从前面的两个例子,我们使用标准的梯度下降。 这是用TensorFlow函数GradientDescentOptimizer()实现的。

如何做

下面是回归示例的工作原理:

1.首先加载数值Python包,numpy和tensorflow:

[mw_shl_code=python,true]import numpy as np

import tensorflow as tf[/mw_shl_code]

2.现在我们开始一个图表会话:

[mw_shl_code=python,true]sess = tf.Session()[/mw_shl_code]

3.接下来我们创建数据,占位符和A变量:

[mw_shl_code=python,true]x_vals = np.random.normal(1, 0.1, 100)

y_vals = np.repeat(10., 100)

x_data = tf.placeholder(shape=[1], dtype=tf.float32)

y_target = tf.placeholder(shape=[1], dtype=tf.float32)

A = tf.Variable(tf.random_normal(shape=[1]))[/mw_shl_code]

4.我们将乘法运算添加到我们的图中:

[mw_shl_code=python,true]my_output = tf.mul(x_data, A)[/mw_shl_code]

5.接下来我们在乘法输出和目标数据之间添加我们的L2损失函数:

[mw_shl_code=python,true]loss = tf.square(my_output - y_target)[/mw_shl_code]

6.在我们运行任何东西之前,我们必须初始化变量:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init)[/mw_shl_code]

7.现在我们必须声明一个方法来优化图中的变量。 我们声明一个优化器算法。 大多数优化算法需要知道在每次迭代中步进多少。 这个距离是由学习率控制的。 如果我们的学习速度太大,我们的算法可能会超过最小值,但是如果我们的学习速率太小,out算法可能花费太长时间来收敛。 这与消失和爆炸梯度问题有关。 学习率对融合有很大的影响,我们将在本节末尾讨论这个问题。 虽然在这里我们使用标准梯度下降算法,但有许多不同的优化算法,它们的运行方式不同,可以根据问题做得更好或更差。 有关不同优化算法的详细概述,请参阅本文末尾的“See Also”部分中的Sebastian Ruder的论文:

[mw_shl_code=python,true]my_opt = tf.train.GradientDescentOptimizer(learning_rate=0.02)

train_step = my_opt.minimize(loss)[/mw_shl_code]

8.最后一步是循环训练算法,并告诉TensorFlow多次训练。 我们将这样做101次,每25次迭代打印一次结果。 为了训练,我们将选择一个随机的x和y条目并通过图表输入。 TensorFlow将自动计算损失,并稍微改变A偏差以最小化损失:

[mw_shl_code=python,true]for i in range(100):

rand_index = np.random.choice(100)

rand_x = [x_vals[rand_index]]

rand_y = [y_vals[rand_index]]

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

if (i+1)%25==0:

print('Step #' + str(i+1) + ' A = ' + str(sess.run(A)))

print('Loss = ' + str(sess.run(loss, feed_dict={x_data: rand_x, y_target: rand_y})))

Here is the output:

Step #25 A = [ 6.23402166]

Loss = 16.3173

Step #50 A = [ 8.50733757]

Loss = 3.56651

Step #75 A = [ 9.37753201]

Loss = 3.03149

Step #100 A = [ 9.80041122]

Loss = 0.0990248 [/mw_shl_code]

9.现在我们来介绍一下简单分类例子的代码。 如果我们先重置图形,我们可以使用相同的TensorFlow脚本。 记住,我们将试图找到一个最佳翻译,A将把这两个分布转化为原点,而sigmoid函数将把这两个分解成两个不同的类。

10.首先我们重置图形并重新初始化图表会话:

[mw_shl_code=python,true]from tensorflow.python.framework import ops

ops.reset_default_graph()

sess = tf.Session()[/mw_shl_code]

11.接下来我们将创建两个不同的正态分布N(-1,1)和N(3,1)的数据。 我们还将生成目标标签,数据占位符和偏差变量A:

[mw_shl_code=python,true]x_vals = np.concatenate((np.random.normal(-1, 1, 50), np.random. normal(3, 1, 50)))

y_vals = np.concatenate((np.repeat(0., 50), np.repeat(1., 50)))

x_data = tf.placeholder(shape=[1], dtype=tf.float32)

y_target = tf.placeholder(shape=[1], dtype=tf.float32)

A = tf.Variable(tf.random_normal(mean=10, shape=[1]))[/mw_shl_code]

12.接下来,我们将翻译操作添加到图表中。 请记住,我们不必把这个包装在sigmoid函数中,因为丢失函数会为我们做这件事:

[mw_shl_code=python,true]my_output = tf.add(x_data, A) [/mw_shl_code]

13.由于特定的损失函数需要批量的数据有一个额外的维度与它们相关联(一个附加维度是批号),我们将使用函数expand_dims()添加一个额外的维度在下一节中,我们将 讨论如何在培训中使用可变大小的批次。 现在,我们将再次使用一个随机数据点:

[mw_shl_code=python,true]my_output_expanded = tf.expand_dims(my_output, 0)

y_target_expanded = tf.expand_dims(y_target, 0) [/mw_shl_code]

14.接下来我们将初始化我们的一个变量,A:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init) [/mw_shl_code]

15.现在我们宣布我们的损失函数。 我们将使用一个交叉熵和未缩放的logits,用sigmoid函数转换它们。 TensorFlow在神经网络包nn.sigmoid_cross_ entropy_with_logits()中为我们提供了这一切功能。 如前所述,它期望论据具有特定的维度,所以我们必须相应地使用扩大的产出和目标:

xentropy = tf.nn.sigmoid_cross_entropy_with_logits( my_output_ expanded, y_target_expanded)

16.就像回归的例子一样,我们需要在图中添加一个优化器函数,以便TensorFlow知道如何更新图中的偏差变量:

[mw_shl_code=python,true]my_opt = tf.train.GradientDescentOptimizer(0.05)

train_step = my_opt.minimize(xentropy)[/mw_shl_code]

17.最后,我们循环数百次随机选择的数据点,并相应地更新变量A. 每200次迭代,我们将打印出A的值和损失:

[mw_shl_code=python,true]for i in range(1400):

rand_index = np.random.choice(100)

rand_x = [x_vals[rand_index]]

rand_y = [y_vals[rand_index]]

sess.run(train_step, feed_dict={x_data: rand_x, y_target:rand_y})

if (i+1)%200==0:

print('Step #' + str(i+1) + ' A = ' + str(sess.run(A)))

print('Loss = ' + str(sess.run(xentropy, feed_dict={x_data: rand_x, y_target: rand_y})))

Step #200 A = [ 3.59597969]

Loss = [[ 0.00126199]]

Step #400 A = [ 0.50947344]

Loss = [[ 0.01149425]]

Step #600 A = [-0.50994617]

Loss = [[ 0.14271219]]

Step #800 A = [-0.76606178]

Loss = [[ 0.18807337]]

Step #1000 A = [-0.90859312]

Loss = [[ 0.02346182]]

Step #1200 A = [-0.86169094]

Loss = [[ 0.05427232]]

Step #1400 A = [-1.08486211]

Loss = [[ 0.04099189]][/mw_shl_code]

如何运行

作为一个回顾,对于这两个例子,我们做了以下几点:

1.创建数据。

2.初始化占位符和变量。

3.创建了一个损失函数。

4.定义一个优化算法。

5.最后,迭代随机数据样本来迭代更新我们的变量。

更多

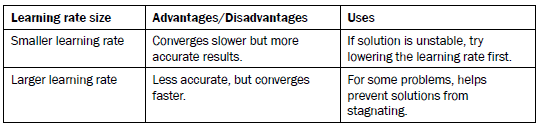

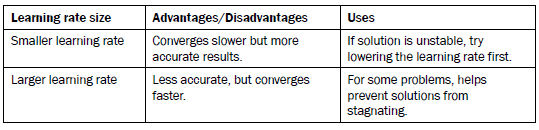

我们之前已经提到,优化算法对学习速率的选择是敏感的。 以简明的方式总结这个选择的效果是重要的:

有时标准梯度下降算法可能会卡住或减速。当优化卡在平坦点时,会发生这种情况。为了解决这个问题,还有另外一种算法考虑了动量项,它增加了前一步的梯度下降值的一部分。 TensorFlow内置了MomentumOptimizer()函数。

另一个变种是改变我们模型中每个变量的优化步骤。理想情况下,我们希望对较小的移动变量采取较大的步骤,对较快的变化变量采取较短的步骤。我们不会涉及到这种方法的数学,但是这个想法的一个通用实现被称为Adagrad算法。该算法考虑了变量梯度的整个历史。同样,TensorFlow中的函数被称为AdagradOptimizer()。

有时,Adagrad因为考虑到整个历史而迫使梯度过早地过零。解决方法是限制我们使用的步骤数。这样做被称为Adadelta算法。我们可以通过使用函数AdadeltaOptimizer()来应用它。

还有一些不同的梯度下降算法的其他实现。对于这些,我们将引用读者的TensorFlow文档在:https://www.tensorflow.org/api_docs/python/train/optimizers。

也可以看看

有关优化算法和学习率的一些参考资料,请参阅以下文章和文章:

Kingma, D., Jimmy, L. Adam: A Method for Stochastic Optimization. ICLR 2015. https://arxiv.org/pdf/1412.6980.pdf

Ruder, S. An Overview of Gradient Descent Optimization Algorithms. 2016. https://arxiv.org/pdf/1609.04747v1.pdf

Zeiler, M. ADADelta: An Adaptive Learning Rate Method. 2012. http://www.matthewzeiler.com/pubs/googleTR2012/googleTR2012.pdf

原文:

Implementing Back Propagation

One of the benefits of using TensorFlow, is that it can keep track of operations and automatically update model variables based on back propagation. In this recipe, we will introduce how to use this aspect to our advantage when training machine learning models.

Getting ready

Now we will introduce how to change our variables in the model in such a way that a loss function is minimized. We have learned about how to use objects and operations, and create loss functions that will measure the distance between our predictions and targets. Now we just have to tell TensorFlow how to back propagate errors through our computational graph to update the variables and minimize the loss function. This is done via declaring an optimization function. Once we have an optimization function declared, TensorFlow will go through and figure out the back propagation terms for all of our computations in the graph. When we feed data in and minimize the loss function, TensorFlow will modify our variables in the graph accordingly.

For this recipe, we will do a very simple regression algorithm. We will sample random numbers from a normal, with mean 1 and standard deviation 0.1. Then we will run the numbers through one operation, which will be to multiply them by a variable, A. From this, the loss function will be the L2 norm between the output and the target, which will always be the value 10. Theoretically, the best value for A will be the number 10 since our data will have mean 1.

The second example is a very simple binary classification algorithm. Here we will generate 100 numbers from two normal distributions, N(-1,1) and N(3,1). All the numbers from N(-1, 1) will be in target class 0, and all the numbers from N(3, 1) will be in target class 1. The model to differentiate these numbers will be a sigmoid function of a translation. In other words, the model will be sigmoid (x + A) where A is a variable we will fit. Theoretically, A will be equal to -1. We arrive at this number because if m1 and m2 are the means of the two normal functions, the value added to them to translate them equidistant to zero will be –(m1+m2)/2. We will see how TensorFlow can arrive at that number in the second example.

While specifying a good learning rate helps the convergence of algorithms, we must also specify a type of optimization. From the preceding two examples, we are using standard gradient descent. This is implemented with the TensorFlow function GradientDescentOptimizer().

How to do it…

Here is how the regression example works:

1. We start by loading the numerical Python package, numpy and tensorflow:

import numpy as np

import tensorflow as tf

2. Now we start a graph session:

sess = tf.Session()

3. Next we create the data, placeholders, and the A variable:

x_vals = np.random.normal(1, 0.1, 100)

y_vals = np.repeat(10., 100)

x_data = tf.placeholder(shape=[1], dtype=tf.float32)

y_target = tf.placeholder(shape=[1], dtype=tf.float32)

A = tf.Variable(tf.random_normal(shape=[1]))

4. We add the multiplication operation to our graph:

my_output = tf.mul(x_data, A)

5. Next we add our L2 loss function between the multiplication output and the target

data:

loss = tf.square(my_output - y_target)

6. Before we can run anything, we have to initialize the variables:

init = tf.initialize_all_variables()

sess.run(init)

7. Now we have to declare a way to optimize the variables in our graph. We declare

an optimizer algorithm. Most optimization algorithms need to know how far to step

in each iteration. This distance is controlled by the learning rate. If our learning

rate is too big, our algorithm might overshoot the minimum, but if our learning rate

is too small, out algorithm might take too long to converge; this is related to the

vanishing and exploding gradient problem. The learning rate has a big influence

on convergence and we will discuss this at the end of the section. While here we

use the standard gradient descent algorithm, there are many different optimization

algorithms that operate differently and can do better or worse depending on the

problem. For a great overview of different optimization algorithms, see the paper by

Sebastian Ruder in the See Also section at the end of this recipe:

my_opt = tf.train.GradientDescentOptimizer(learning_rate=0.02)

train_step = my_opt.minimize(loss)

8.The final step is to loop through our training algorithm and tell TensorFlow to train many times. We will do this 101 times and print out results every 25th iteration. To train, we will select a random x and y entry and feed it through the graph. TensorFlow will automatically compute the loss, and slightly change the A bias to minimize the loss:

for i in range(100):

rand_index = np.random.choice(100)

rand_x = [x_vals[rand_index]]

rand_y = [y_vals[rand_index]]

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

if (i+1)%25==0:

print('Step #' + str(i+1) + ' A = ' + str(sess.run(A)))

print('Loss = ' + str(sess.run(loss, feed_dict={x_data: rand_x, y_target: rand_y})))

Here is the output:

Step #25 A = [ 6.23402166]

Loss = 16.3173

Step #50 A = [ 8.50733757]

Loss = 3.56651

Step #75 A = [ 9.37753201]

Loss = 3.03149

Step #100 A = [ 9.80041122]

Loss = 0.0990248

9.Now we will introduce the code for the simple classification example. We can use the same TensorFlow script if we reset the graph first. Remember we will attempt to find an optimal translation, A that will translate the two distributions to the origin and the sigmoid function will split the two into two different classes.

10.First we reset the graph and reinitialize the graph session:

from tensorflow.python.framework import ops

ops.reset_default_graph()

sess = tf.Session()

11.Next we will create the data from two different normal distributions, N(-1, 1) and N(3, 1). We will also generate the target labels, placeholders for the data, and the bias variable, A:

x_vals = np.concatenate((np.random.normal(-1, 1, 50), np.random. normal(3, 1, 50)))

y_vals = np.concatenate((np.repeat(0., 50), np.repeat(1., 50)))

x_data = tf.placeholder(shape=[1], dtype=tf.float32)

y_target = tf.placeholder(shape=[1], dtype=tf.float32)

A = tf.Variable(tf.random_normal(mean=10, shape=[1]))

12.Next we add the translation operation to the graph. Remember that we do not have to wrap this in a sigmoid function because the loss function will do that for us:

my_output = tf.add(x_data, A)

13.Because the specific loss function expects batches of data that have an extra dimension associated with them (an added dimension which is the batch number), we will add an extra dimension to the output with the function, expand_dims() In the next section we will discuss how to use variable sized batches in training. For now, we will again just use one random data point at a time:

my_output_expanded = tf.expand_dims(my_output, 0)

y_target_expanded = tf.expand_dims(y_target, 0)

14.Next we will initialize our one variable, A:

init = tf.initialize_all_variables()

sess.run(init)

15.Now we declare our loss function. We will use a cross entropy with unscaled logits that transforms them with a sigmoid function. TensorFlow has this all in one function for us in the neural network package called nn.sigmoid_cross_ entropy_with_logits(). As stated before, it expects the arguments to have specific dimensions, so we have to use the expanded outputs and targets accordingly:

xentropy = tf.nn.sigmoid_cross_entropy_with_logits( my_output_ expanded, y_target_expanded)

16.Just like the regression example, we need to add an optimizer function to the graph so that TensorFlow knows how to update the bias variable in the graph:

my_opt = tf.train.GradientDescentOptimizer(0.05)

train_step = my_opt.minimize(xentropy)

17.Finally, we loop through a randomly selected data point several hundred times and

update the variable A accordingly. Every 200 iterations, we will print out the value of

A and the loss:

for i in range(1400):

rand_index = np.random.choice(100)

rand_x = [x_vals[rand_index]]

rand_y = [y_vals[rand_index]]

sess.run(train_step, feed_dict={x_data: rand_x, y_target:

rand_y})

if (i+1)%200==0:

print('Step #' + str(i+1) + ' A = ' + str(sess.run(A)))

print('Loss = ' + str(sess.run(xentropy, feed_dict={x_

data: rand_x, y_target: rand_y})))

Step #200 A = [ 3.59597969]

Loss = [[ 0.00126199]]

Step #400 A = [ 0.50947344]

Loss = [[ 0.01149425]]

Step #600 A = [-0.50994617]

Loss = [[ 0.14271219]]

Step #800 A = [-0.76606178]

Loss = [[ 0.18807337]]

Step #1000 A = [-0.90859312]

Loss = [[ 0.02346182]]

Step #1200 A = [-0.86169094]

Loss = [[ 0.05427232]]

Step #1400 A = [-1.08486211]

Loss = [[ 0.04099189]]

How it works…

As a recap, for both examples, we did the following:

1. Created the data.

2. Initialized placeholders and variables.

3. Created a loss function.

4. Defined an optimization algorithm.

5. And finally, iterated across random data samples to iteratively update our variables.

There's more…

We've mentioned before that the optimization algorithm is sensitive to the choice of the learning rate. It is important to summarize the effect of this choice in a concise manner:

Sometimes the standard gradient descent algorithm can get stuck or slow down significantly.

This can happen when the optimization is stuck in the flat spot of a saddle. To combat

this, there is another algorithm that takes into account a momentum term, which adds on

a fraction of the prior step's gradient descent value. TensorFlow has this built in with the

MomentumOptimizer() function.

Another variant is to vary the optimizer step for each variable in our models. Ideally, we

would like to take larger steps for smaller moving variables and shorter steps for faster

changing variables. We will not go into the mathematics of this approach, but a common

implementation of this idea is called the Adagrad algorithm. This algorithm takes into account

the whole history of the variable gradients. Again, the function in TensorFlow for this is called

AdagradOptimizer().

Sometimes, Adagrad forces the gradients to zero too soon because it takes into account the

whole history. A solution to this is to limit how many steps we use. Doing this is called the

Adadelta algorithm. We can apply this by using the function AdadeltaOptimizer().

There are a few other implementations of different gradient descent algorithms. For these, we

would refer the reader to the TensorFlow documentation at: https://www.tensorflow.

org/api_docs/python/train/optimizers.

See also

For some references on optimization algorithms and learning rates, see the following papers and articles:

Kingma, D., Jimmy, L. Adam: A Method for Stochastic Optimization. ICLR 2015. https://arxiv.org/pdf/1412.6980.pdf

Ruder, S. An Overview of Gradient Descent Optimization Algorithms. 2016. https://arxiv.org/pdf/1609.04747v1.pdf

Zeiler, M. ADADelta: An Adaptive Learning Rate Method. 2012. http://www.

|

/2

/2