本帖最后由 levycui 于 2018-9-11 15:38 编辑

问题导读:

1、如何理解最近邻方法?

2、如何使用最近邻方法来预测住房价值?

3、如何声明目标值的预测和均方误差?

4、如何使用最常见的权重方法?

关注最新经典文章,欢迎关注公众号

上一篇:TensorFlow ML cookbook 第四章4、5节 实现非线性SVM和实现多类SVM

最近邻方法

本章将重点介绍最近邻方法以及如何在TensorFlow中实现它们。 我们将首先介绍该方法并展示如何实现各种形式,本章将以地址匹配和图像识别为例。 这是我们将要介绍的内容:

- 最近邻方法

- 使用基于文本的距离

- 计算混合距离函数

- 使用地址匹配示例

- 使用最近邻进行图像识别

请注意,所有代码均可在线获取https://github.com/nfmcclure/ tensorflow_cookbook.

介绍

最近邻方法基于一个简单的想法。 我们将训练集视为模型,并根据它们与训练集中的点的接近程度对新点进行预测。 最天真的方法是将预测作为最接近的训练数据点类。 但由于大多数数据集包含一定程度的噪声,因此更常见的方法是采用一组k个最近邻居的加权平均值。 该方法称为k近邻(k-NN)。

给出训练数据集

,与相应的目标

,与相应的目标

,,我们可以通过查看一组最近的邻居来对点z进行预测。 实际的预测方法取决于我们是否进行回归(连续)或分类(离散)。

,,我们可以通过查看一组最近的邻居来对点z进行预测。 实际的预测方法取决于我们是否进行回归(连续)或分类(离散)。

对于离散分类目标,预测可以通过加权到预测点的距离加权的最大投票方案给出:

这里,我们的预测f(z)是所有类别j的最大加权值,其中从预测点到训练点的加权距离i由下式给出:

如果点i在类j中,它只是一个指示函数。

如果点i在类j中,它只是一个指示函数。

对于连续回归目标,预测由最接近预测的所有k个点的加权平均值给出:

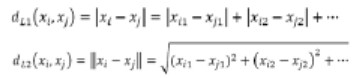

很明显,预测很大程度上取决于距离度量的选择d。

距离度量的通用规范是L1和L2距离:

我们可以选择许多不同规格的距离指标。 在本章中,我们将探讨L1和L2指标以及编辑和文本距离。

我们还必须选择如何加权距离。 对距离进行加权的直接方法是距离本身。 远离我们预测的点应该比较近点的影响小。 最常见的权重方法是通过距离的归一化逆。 我们将在下一个配方中实现此方法。

最近邻方法

我们从本章开始,通过实施最近邻方法来预测住房价值。 这是从最近邻开始的好方法,因为我们将处理数字特征和连续目标。

做好准备

为了说明如何使用最近邻居进行预测在TensorFlow中工作,我们将使用波士顿住房数据集。 在这里,我们将预测邻域住房价值中位数作为几个特征的函数。

由于我们考虑训练集训练模型,我们将找到预测点的k-NN并对目标值进行加权平均。

1.首先,我们将从加载所需的库并开始图形会话开始。 我们将使用请求模块从UCI机器学习库加载必要的波士顿住房数据:

[mw_shl_code=python,true]import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import requests

sess = tf.Session() [/mw_shl_code]

2.接下来,我们将使用请求模块加载数据:

[mw_shl_code=python,true]housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data''

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

cols_used = ['CRIM', 'INDUS', 'NOX', 'RM', 'AGE', 'DIS', 'TAX', 'PTRATIO', 'B', 'LSTAT']

num_features = len(cols_used)

# Request data

housing_file = requests.get(housing_url)

# Parse Data

housing_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1][/mw_shl_code]

3.接下来,我们将数据分为依赖和独立的功能。 我们将预测最后一个变量MEDV,它是房屋组的中值。 我们也不会使用ZN,CHAS和RAD功能,因为它们没有信息或二进制特性:

[mw_shl_code=python,true]y_vals = np.transpose([np.array([y[13] for y in housing_data])])

x_vals = np.array([[x for i,x in enumerate(y) if housing_header in cols_used] for y in housing_data])

x_vals = (x_vals - x_vals.min(0)) / x_vals.ptp(0) [/mw_shl_code]

4.现在我们将x和y值分成训练集和测试集。 我们将通过随机选择大约80%的行来创建训练集,并将剩余的20%留给测试集:

[mw_shl_code=python,true]train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices] [/mw_shl_code]

5.接下来,我们声明我们的k值和批量大小:

[mw_shl_code=python,true]k = 4

batch_size=len(x_vals_test) [/mw_shl_code]

6.接下来我们将申报占位符。 请记住,没有模型变量需要训练,因为模型完全由我们的训练集确定:

[mw_shl_code=python,true]x_data_train = tf.placeholder(shape=[None, num_features], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, num_features], dtype=tf. float32)

y_target_train = tf.placeholder(shape=[None, 1], dtype=tf.float32)

y_target_test = tf.placeholder(shape=[None, 1], dtype=tf.float32) [/mw_shl_code]

7.接下来,我们为一批测试点创建距离函数。 在这里,我们说明了L1距离的使用:

[mw_shl_code=python,true]distance = tf.reduce_sum(tf.abs(tf.sub(x_data_train, tf.expand_ dims(x_data_test,1))), reduction_indices=2)[/mw_shl_code]

8.现在我们创建预测函数。 为此,我们将使用top_k()函数,它返回张量中最大值的值和索引。 由于我们想要最小距离的指数,我们将找到k个最大的负距离。 我们还声明了目标值的预测和均方误差(MSE):

[mw_shl_code=python,true]top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

x_sums = tf.expand_dims(tf.reduce_sum(top_k_xvals, 1),1)

x_sums_repeated = tf.matmul(x_sums,tf.ones([1, k], tf.float32))

x_val_weights = tf.expand_dims(tf.div(top_k_xvals,x_sums_ repeated), 1)

top_k_yvals = tf.gather(y_target_train, top_k_indices)

prediction = tf.squeeze(tf.batch_matmul(x_val_weights,top_k_ yvals), squeeze_dims=[1])

mse = tf.div(tf.reduce_sum(tf.square(tf.sub(prediction, y_target_ test))), batch_size) [/mw_shl_code]

9.测试:

[mw_shl_code=python,true]num_loops = int(np.ceil(len(x_vals_test)/batch_size))

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_vals_train, x_data_test: x_batch, y_target_train: y_vals_train, y_target_test: y_batch})

batch_mse = sess.run(mse, feed_dict={x_data_train: x_vals_ train, x_data_test: x_batch, y_target_train: y_vals_train, y_ target_test: y_batch})

print('Batch #'' + str(i+1) + '' MSE: '' + str(np.round(batch_ mse,3)))

Batch #1 MSE: 23.153[/mw_shl_code]

10.此外,我们还可以查看与预测值相比的实际目标值的直方图。 看待这个的一个原因是要注意这样一个事实:使用平均方法,我们无法预测目标的极端:

[mw_shl_code=python,true]bins = np.linspace(5, 50, 45)

plt.hist(predictions, bins, alpha=0.5, label='Prediction'')

plt.hist(y_batch, bins, alpha=0.5, label='Actual'')

plt.title('Histogram of Predicted and Actual Values'')

plt.xlabel('Med Home Value in $1,000s'')

plt.ylabel('Frequency'')

plt.legend(loc='upper right'')

plt.show()[/mw_shl_code]

图1:k-NN(k = 4)的预测值和实际目标值的直方图。

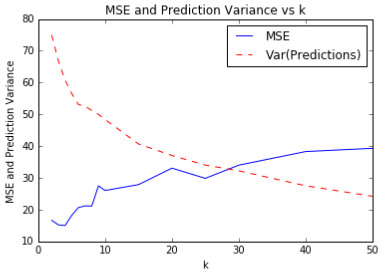

一个难以确定的是k的最佳值。 对于上图和预测,我们使用k = 4作为模型。 我们之所以选择这个,是因为它给了我们最低的MSE。 这通过交叉验证来验证。 如果我们在k的多个值上使用交叉验证,我们将看到k = 4给出了最小的MSE。 我们在下图中显示了这一点。 绘制预测值中的方差也是值得的,以表明它将减少我们平均的邻居:

图2:各种k值的k-NN预测的MSE。 我们还绘制了测试集上预测值的方差。 注意,方差随着k的增加而减小。

怎么运行

使用最近邻算法,模型是训练集。 因此,我们不必在模型中训练任何变量。 唯一的参数k,我们通过交叉验证确定,以最小化我们的MSE。

还有更多

对于k-NN的加权,我们选择直接按距离加权。 我们还可以考虑其他选择。 另一种常见方法是通过反平方距离加权。

原文:

Nearest Neighbor Methods

This chapter will focus on nearest neighbor methods and how to implement them in TensorFlow. We will start with an introduction to the method and show how to implement various forms, and the chapter will end with examples of address matching and image recognition. This is what we will cover:

- Working with Nearest Neighbors

- Working with Text-Based Distances

- Computing Mixed Distance Functions

- Using an Address Matching Example

- Using Nearest Neighbors for Image Recognition

Note that all the code is available online at https://github.com/nfmcclure/ tensorflow_cookbook.

Introduction

Nearest neighbor methods are based on a simple idea. We consider our training set as the model and make predictions on new points based on how close they are to points in the training set. The most naïve way is to make the prediction as the closest training data point class. But since most datasets contain a degree of noise, a more common method would be to take a weighted average of a set of k nearest neighbors. This method is called k-nearest neighbors (k-NN).

Given a training dataset, with corresponding targets, we can make a prediction on a point, z, by looking at a set of nearest neighbors. The actual method of prediction depends on whether or not we are doing regression (continuous ) or classification (discrete ).

For discrete classification targets, the prediction may be given by a maximum voting scheme weighted by the distance to the prediction point:

Here, our prediction, f(z) is the maximum weighted value over all classes, j, where the weighted distance from the prediction point to the training point, i, is given by

is just an indicator function if point i is in class j.

For continuous regression targets, the prediction is given by a weighted average of all k points nearest to the prediction:

It is obvious that the prediction is heavily dependent on the choice of the distance metric, d.

Common specifications of the distance metric are L1 and L2 distances:

There are many different specifications of distance metrics that we can choose. In this chapter, we will explore the L1 and L2 metrics as well as edit and textual distances.

We also have to choose how to weight the distances. A straightforward way to weight the distances is by the distance itself. Points that are further away from our prediction should have less impact than nearer points. The most common way to weight is by the normalized inverse of the distance. We will implement this method in the next recipe.

Working with Nearest Neighbors

We start this chapter by implementing nearest neighbors to predict housing values. This is a great way to start with nearest neighbors because we will be dealing with numerical features and continuous targets.

Getting ready

To illustrate how making predictions with nearest neighbors works in TensorFlow, we will use the Boston housing dataset. Here we will be predicting the median neighborhood housing value as a function of several features.

Since we consider the training set the trained model, we will find the k-NNs to the prediction points and do a weighted average of the target value.

How to do it…

1.First, we will start by loading the required libraries and starting a graph session. We will use the requests module to load the necessary Boston housing data from the UCI machine learning repository:

[mw_shl_code=python,true]import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import requests

sess = tf.Session() [/mw_shl_code]

2.Next, we will load the data using the requests module:

[mw_shl_code=python,true]housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data''

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

cols_used = ['CRIM', 'INDUS', 'NOX', 'RM', 'AGE', 'DIS', 'TAX', 'PTRATIO', 'B', 'LSTAT']

num_features = len(cols_used)

# Request data

housing_file = requests.get(housing_url)

# Parse Data

housing_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1][/mw_shl_code]

3.Next, we separate the data into our dependent and independent features. We will be predicting the last variable, MEDV, which is the median value for the group of houses. We will also not use the features ZN, CHAS, and RAD because of their uninformative or binary nature:

[mw_shl_code=python,true]y_vals = np.transpose([np.array([y[13] for y in housing_data])])

x_vals = np.array([[x for i,x in enumerate(y) if housing_header in cols_used] for y in housing_data])

x_vals = (x_vals - x_vals.min(0)) / x_vals.ptp(0) [/mw_shl_code]

4.Now we split the x and y values into the train and test sets. We will create the training set by selecting about 80% of the rows at random, and leave the remaining 20% for the test set:

[mw_shl_code=python,true]train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices] [/mw_shl_code]

5.Next, we declare our k value and batch size:

[mw_shl_code=python,true]k = 4

batch_size=len(x_vals_test) [/mw_shl_code]

6.We will declare our placeholders next. Remember that there are no model variables to train, as the model is determined exactly by our training set:

[mw_shl_code=python,true]x_data_train = tf.placeholder(shape=[None, num_features], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, num_features], dtype=tf. float32)

y_target_train = tf.placeholder(shape=[None, 1], dtype=tf.float32)

y_target_test = tf.placeholder(shape=[None, 1], dtype=tf.float32) [/mw_shl_code]

7.Next, we create our distance function for a batch of test points. Here, we illustrate the use of the L1 distance:

[mw_shl_code=python,true]distance = tf.reduce_sum(tf.abs(tf.sub(x_data_train, tf.expand_ dims(x_data_test,1))), reduction_indices=2)[/mw_shl_code]

8.Now we create our prediction function. To do this, we will use the top_k(), function, which returns the values and indices of the largest values in a tensor. Since we want the indices of the smallest distances, we will instead find the k-biggest negative distances. We also declare the predictions and the mean squared error (MSE) of the target values:

[mw_shl_code=python,true]top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

x_sums = tf.expand_dims(tf.reduce_sum(top_k_xvals, 1),1)

x_sums_repeated = tf.matmul(x_sums,tf.ones([1, k], tf.float32))

x_val_weights = tf.expand_dims(tf.div(top_k_xvals,x_sums_ repeated), 1)

top_k_yvals = tf.gather(y_target_train, top_k_indices)

prediction = tf.squeeze(tf.batch_matmul(x_val_weights,top_k_ yvals), squeeze_dims=[1])

mse = tf.div(tf.reduce_sum(tf.square(tf.sub(prediction, y_target_ test))), batch_size) [/mw_shl_code]

9.Test:

[mw_shl_code=python,true]num_loops = int(np.ceil(len(x_vals_test)/batch_size))

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_vals_train, x_data_test: x_batch, y_target_train: y_vals_train, y_target_test: y_batch})

batch_mse = sess.run(mse, feed_dict={x_data_train: x_vals_ train, x_data_test: x_batch, y_target_train: y_vals_train, y_ target_test: y_batch})

print('Batch #'' + str(i+1) + '' MSE: '' + str(np.round(batch_ mse,3)))

Batch #1 MSE: 23.153[/mw_shl_code]

10.Additionally, we can also look at a histogram of the actual target values compared with the predicted values. One reason to look at this is to notice the fact that with an averaging method, we have trouble predicting the extreme ends of the targets:

[mw_shl_code=python,true]bins = np.linspace(5, 50, 45)

plt.hist(predictions, bins, alpha=0.5, label='Prediction'')

plt.hist(y_batch, bins, alpha=0.5, label='Actual'')

plt.title('Histogram of Predicted and Actual Values'')

plt.xlabel('Med Home Value in $1,000s'')

plt.ylabel('Frequency'')

plt.legend(loc='upper right'')

plt.show()[/mw_shl_code]

Figure 1: A histogram of the predicted values and actual target values for k-NN (k=4).

11.One hard thing to determine is the best value of k. For the preceding figure and predictions, we used k=4 for our model. We chose this specifically because it gives us the lowest MSE. This is verified by cross validation. If we use cross validation across multiple values of k, we will see that k=4 gives us a minimum MSE. We show this in the following figure. It is also worthwhile to plotting the variance in the predicted values to show that it will decrease the more neighbors we average over:

Figure 2: The MSE for k-NN predictions for various values of k. We also plot the variance of the predicted values on the test set. Note that the variance decreases as k increases.

How it works…

With the nearest neighbors algorithm, the model is the training set. Because of this, we do not have to train any variables in our model. The only parameter, k, we determined via cross-validation to minimize our MSE.

There's more…

For the weighting of the k-NN, we chose to weight directly by the distance. There are other options that we could consider as well. Another common method is to weight by the inverse squared distance.

|

/2

/2