本帖最后由 levycui 于 2018-10-9 16:41 编辑

问题导读:

1、如何在字符串之间使用TensorFlow的文本距离度量?

2、如何计算两个单词“bear”和“beers”之间的编辑距离?

3、如何缩放不同变量的距离函数?

4、如何使用top_k()函数执行操作?

关注最新经典文章,欢迎关注公众号

上一篇:TensorFlow ML cookbook 第五章1节 最近邻方法

使用基于文本的距离

最近的邻居比处理数字更通用。只要我们有一种方法来测量特征之间的距离,我们就可以应用最近邻算法。在本文中,我们将介绍如何使用TensorFlow测量文本距离。

做好准备

在本文中,我们将说明如何在字符串之间使用TensorFlow的文本距离度量,Levenshtein距离(编辑距离)。这将在本章后面重要,因为我们扩展了最近邻居方法以包含带有文本的特征。

Levenshtein距离是从一个字符串到另一个字符串的最小编辑次数。允许的编辑是插入字符,删除字符或用不同的字符替换字符。对于这个配方,我们将使用TensorFlow的Levenshtein距离函数edit_distance()。值得说明这个函数的用法,因为这个函数的用法将适用于后面的章节。

请注意,TensorFlow的edit_distance()函数只接受稀疏张量。我们必须创建我们的字符串作为单个字符的稀疏张量

怎么做…

1.首先,我们加载TensorFlow并初始化图形:

[mw_shl_code=python,true]import tensorflow as tf

sess = tf.Session() [/mw_shl_code]

2.然后我们将展示如何计算两个单词“熊”和“啤酒”之间的编辑距离。 首先,我们将使用Python的'list()'函数从我们的字符串创建一个字符列表。 接下来,我们从该列表中创建一个稀疏的3D矩阵。 我们必须告诉TensorFlow字符索引,矩阵的形状以及张量中我们想要的字符。 在此之后,我们可以决定是否要使用总编辑距离(normalize = False)或标准化编辑距离(normalize = True),其中我们将编辑距离除以第二个单词的长度:

TensorFlow的文档将两个字符串视为提议(假设)字符串和基础事实字符串。 我们将在这里使用h和t张量继续这种表示法。

[mw_shl_code=python,true]hypothesis = list('bear'')

truth = list('beers'')

h1 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3]],

hypothesis, [1,1,1])

t1 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3],[0,0,4]], truth, [1,1,1])

print(sess.run(tf.edit_distance(h1, t1, normalize=False))) [/mw_shl_code]

3.这导致以下输出:

[mw_shl_code=python,true][[ 2.]][/mw_shl_code]

函数SparseTensorValue()是一种在TensorFlow中创建稀疏张量的方法。 它接受我们希望创建的稀疏张量的索引,值和形状。

4.接下来,我们将说明如何比较两个词,熊和啤酒,用另一个词,啤酒。 为了实现这一目标,我们必须复制啤酒以获得相同数量的可比词语:

[mw_shl_code=python,true]hypothesis2 = list('bearbeer')

truth2 = list('beersbeers')

h2 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3], [0,1,0], [0,1,1], [0,1,2], [0,1,3]], hypothesis2, [1,2,4])

t2 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3], [0,0,4], [0,1,0], [0,1,1], [0,1,2], [0,1,3], [0,1,4]], truth2, [1,2,5])

print(sess.run(tf.edit_distance(h2, t2, normalize=True))) [/mw_shl_code]

5.这导致以下输出:

[mw_shl_code=python,true][[ 0.40000001 0.2 ]] [/mw_shl_code]

6.在此示例中显示了将一组单词与另一单词进行比较的更有效方法。 我们预先为假设和基础事实字符串创建索引和字符列表:

[mw_shl_code=python,true]hypothesis_words = ['bear','bar','tensor','flow']

truth_word = ['beers'']

num_h_words = len(hypothesis_words)

h_indices = [[xi, 0, yi] for xi,x in enumerate(hypothesis_words) for yi,y in enumerate(x)]

h_chars = list('''.join(hypothesis_words))

h3 = tf.SparseTensor(h_indices, h_chars, [num_h_words,1,1])

truth_word_vec = truth_word*num_h_words

t_indices = [[xi, 0, yi] for xi,x in enumerate(truth_word_vec) for yi,y in enumerate(x)]

t_chars = list('''.join(truth_word_vec))

t3 = tf.SparseTensor(t_indices, t_chars, [num_h_words,1,1])

print(sess.run(tf.edit_distance(h3, t3, normalize=True))) [/mw_shl_code]

7.这导致以下输出:

[mw_shl_code=python,true][[ 0.40000001]

[ 0.60000002]

[ 0.80000001]

[ 1. ]][/mw_shl_code]

8.现在我们将说明如何使用占位符计算两个单词列表之间的编辑距离。 这个概念是一样的,除了我们将使用SparseTensorValue()而不是稀疏张量。 首先,我们将创建一个从单词列表创建稀疏张量的函数:

[mw_shl_code=python,true]def create_sparse_vec(word_list):

num_words = len(word_list)

indices = [[xi, 0, yi] for xi,x in enumerate(word_list) for yi,y in enumerate(x)]

chars = list('''.join(word_list))

return(tf.SparseTensorValue(indices, chars, [num_words,1,1]))

hyp_string_sparse = create_sparse_vec(hypothesis_words)

truth_string_sparse = create_sparse_vec(truth_word*len(hypothesis_ words))

hyp_input = tf.sparse_placeholder(dtype=tf.string)

truth_input = tf.sparse_placeholder(dtype=tf.string)

edit_distances = tf.edit_distance(hyp_input, truth_input, normalize=True)

feed_dict = {hyp_input: hyp_string_sparse,

truth_input: truth_string_sparse}

print(sess.run(edit_distances, feed_dict=feed_dict)) [/mw_shl_code]

9.这导致以下输出:

[mw_shl_code=python,true][[ 0.40000001]

[ 0.60000002]

[ 0.80000001]

[ 1. ]] [/mw_shl_code]

怎么运行的…

对于这个配方,我们已经证明我们可以使用TensorFlow以多种方式测量文本距离。 这对于在具有文本功能的数据上执行最近邻居非常有用。 当我们执行地址匹配时,我们将在本章后面看到更多内容。

还有更多…

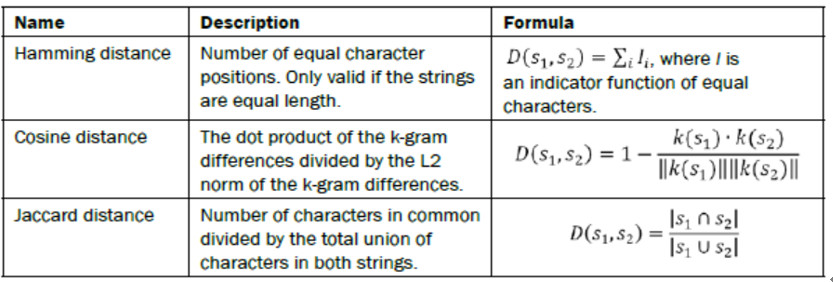

我们应该讨论其他文本距离指标。 这是一个定义表,描述了两个字符串s1和s2之间的其他各种文本距离:

用混合距离函数计算

在处理具有多个特征的数据观察时,我们应该意识到特征可以在不同的尺度上进行不同的缩放。 在这个方案中,我们考虑到这一点,以改善我们的住房价值预测。

做好准备

扩展最近邻居算法以考虑不同缩放的变量非常重要。 在这个例子中,我们将展示如何缩放不同变量的距离函数。 具体来说,我们将距离函数作为特征方差的函数进行缩放。

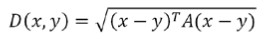

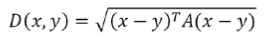

加权距离函数的关键是使用权重矩阵。 用矩阵运算写入的距离函数变为以下公式:

这里,A是对角线权重矩阵,我们用它来缩放每个特征的距离度量。

对于这个配方,我们将尝试在波士顿住房价值数据集上改进我们的MSE。 该数据集是不同尺度上的特征的一个很好的例子,并且最近邻算法将受益于缩放距离函数。

怎么做…

1.首先,我们将加载必要的库并启动图形会话:

[mw_shl_code=python,true]import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import requests [/mw_shl_code]

2.接下来,我们加载数据并将其存储在numpy数组中。 再次注意,我们只会使用某些列进行预测。 我们不使用id变量,也不使用方差非常低的变量:

[mw_shl_code=python,true]housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data''

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

cols_used = ['CRIM', 'INDUS', 'NOX', 'RM', 'AGE', 'DIS', 'TAX', 'PTRATIO', 'B', 'LSTAT']

num_features = len(cols_used)

housing_file = requests.get(housing_url)

housing_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1]

y_vals = np.transpose([np.array([y[13] for y in housing_data])])

x_vals = np.array([[x for i,x in enumerate(y) if housing_header in cols_used] for y in housing_data]) [/mw_shl_code]

3.现在我们用最小 - 最大缩放比例将x值缩放到0到1之间:

[mw_shl_code=python,true]x_vals = (x_vals - x_vals.min(0)) / x_vals.ptp(0) [/mw_shl_code]

4.我们现在创建对角线权重矩阵,通过特征的标准偏差提供距离度量的缩放:

[mw_shl_code=python,true]weight_diagonal = x_vals.std(0)

weight_matrix = tf.cast(tf.diag(weight_diagonal), dtype=tf. float32) [/mw_shl_code]

5.现在我们将数据分成训练和测试集。 我们还声明k,最近邻居的数量,并使批量大小等于测试集大小:

[mw_shl_code=python,true]train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices]

k = 4

batch_size=len(x_vals_test) [/mw_shl_code]

6.我们接下来宣布我们需要的占位符。 我们有四个占位符,即培训和测试集的x输入和y目标:

[mw_shl_code=python,true]x_data_train = tf.placeholder(shape=[None, num_features], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, num_features], dtype=tf. float32)

y_target_train = tf.placeholder(shape=[None, 1], dtype=tf.float32)

y_target_test = tf.placeholder(shape=[None, 1], dtype=tf.float32)[/mw_shl_code]

7.现在我们可以宣布我们的距离函数。 为了便于阅读,我们将距离函数分解为其组件。 请注意,我们必须按批量大小平铺权重矩阵,并使用batch_matmul()函数跨批量大小执行批量矩阵乘法:

[mw_shl_code=python,true]subtraction_term = tf.sub(x_data_train, tf.expand_dims(x_data_ test,1))

first_product = tf.batch_matmul(subtraction_term, tf.tile(tf. expand_dims(weight_matrix,0), [batch_size,1,1]))

second_product = tf.batch_matmul(first_product, tf.transpose(subtraction_term, perm=[0,2,1]))

distance = tf.sqrt(tf.batch_matrix_diag_part(second_product)) [/mw_shl_code]

8.在计算每个测试点的所有训练距离后,我们需要返回顶部k-NN。 我们使用top_k()函数执行此操作。 由于此函数返回最大值,并且我们想要最小距离,因此我们返回最大的负距离值。 然后,我们想要将预测作为前k个邻居的距离的加权平均值:

[mw_shl_code=python,true]top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

x_sums = tf.expand_dims(tf.reduce_sum(top_k_xvals, 1),1)

x_sums_repeated = tf.matmul(x_sums,tf.ones([1, k], tf.float32))

x_val_weights = tf.expand_dims(tf.div(top_k_xvals,x_sums_ repeated), 1)

top_k_yvals = tf.gather(y_target_train, top_k_indices)

prediction = tf.squeeze(tf.batch_matmul(x_val_weights,top_k_ yvals), squeeze_dims=[1])[/mw_shl_code]

9.为了评估我们的模型,我们计算了预测的MSE:

[mw_shl_code=python,true]mse = tf.div(tf.reduce_sum(tf.square(tf.sub(prediction, y_target_ test))), batch_size) [/mw_shl_code]

10.现在我们可以遍历我们的测试批次并计算每个的MSE:

[mw_shl_code=python,true]num_loops = int(np.ceil(len(x_vals_test)/batch_size))

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_vals_train, x_data_test: x_batch, y_target_train: y_vals_train, y_target_test: y_batch})

batch_mse = sess.run(mse, feed_dict={x_data_train: x_vals_ train, x_data_test: x_batch, y_target_train: y_vals_train, y_ target_test: y_batch})

print('Batch #'' + str(i+1) + '' MSE: '' + str(np.round(batch_ mse,3))) [/mw_shl_code]

11.这导致以下输出:

[mw_shl_code=python,true]Batch #1 MSE: 21.322 [/mw_shl_code]

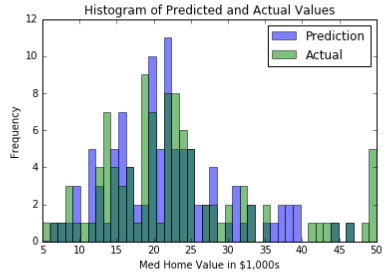

12.作为最终比较,我们可以使用以下代码绘制实际测试集的住房价值分布和测试集的预测:

[mw_shl_code=python,true]bins = np.linspace(5, 50, 45)

plt.hist(predictions, bins, alpha=0.5, label='Prediction'')

plt.hist(y_batch, bins, alpha=0.5, label='Actual'')

plt.title('Histogram of Predicted and Actual Values'')

plt.xlabel('Med Home Value in $1,000s'')

plt.ylabel('Frequency'')

plt.legend(loc='upper right'')

plt.show()[/mw_shl_code]

图3:Boston数据集上预测房屋价值和实际房屋价值的两个直方图。 这次我们为每个特征不同地缩放了距离函数。

怎么运行的…

我们通过引入一种缩放每个特征的距离函数的方法来减少我们在此测试集上的MSE。 在这里,我们通过特征标准偏差的因子来缩放距离函数。 这提供了更准确的视图,用于测量哪些点是最近邻居。 由此我们还将前k个邻居的加权平均值作为距离的函数来获得住房价值预测。

还有更多…

该缩放因子还可以用于最近邻距离计算中的向下加权或向上加权的特征。 这在我们比其他功能更多或更少信任功能的情况下非常有用。

原文:

Working with Text-Based Distances

Nearest neighbors is more versatile than just dealing with numbers. As long as we have a way to measure distances between features, we can apply the nearest neighbors algorithm. In this recipe, we will introduce how to measure text distances with TensorFlow.

Getting ready

In this recipe, we will illustrate how to use TensorFlow's text distance metric, the Levenshtein distance (the edit distance), between strings. This will be important later in this chapter as we expand the nearest neighbor methods to include features with text.

The Levenshtein distance is the minimal number of edits to get from one string to another string. The allowed edits are inserting a character, deleting a character, or substituting a character with a different one. For this recipe, we will use TensorFlow's Levenshtein distance function, edit_distance(). It is worthwhile to illustrate the use of this function because the usage of this function will be applicable to later chapters.

Note that TensorFlow's edit_distance() function only accepts sparse tensors. We will have to create our strings as sparse tensors of individual characters

How to do it…

1.First, we load TensorFlow and initialize a graph:

import tensorflow as tf

sess = tf.Session()

2.Then we will show how to calculate the edit distance between two words, 'bear' and 'beer'. First, we will create a list of characters from our strings with Python's 'list()' function. Next, we create a sparse 3D matrix from that list. We have to tell TensorFlow the character indices, the shape of the matrix, and which characters we want in the tensor. After this we can decide if we would like to go with the total edit distance (normalize=False) or the normalized edit distance (normalize=True), where we divide the edit distance by the length of the second word:

TensorFlow's documentation treats the two strings as a proposed (hypothesis) string and a ground truth string. We will continue this notation here with h and t tensors.

hypothesis = list('bear'')

truth = list('beers'')

h1 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3]],

hypothesis, [1,1,1])

t1 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3],[0,0,4]], truth, [1,1,1])

print(sess.run(tf.edit_distance(h1, t1, normalize=False)))

3.This results in the following output:

[[ 2.]]

The function, SparseTensorValue(), is a way to create a sparse tensor in TensorFlow. It accepts the indices, values, and shape of a sparse tensor we wish to create.

4.Next, we will illustrate how to compare two words, bear and beer, both with another word, beers. In order to achieve this, we must replicate the beers in order to have the same amount of comparable words:

hypothesis2 = list('bearbeer')

truth2 = list('beersbeers')

h2 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3], [0,1,0], [0,1,1], [0,1,2], [0,1,3]], hypothesis2, [1,2,4])

t2 = tf.SparseTensor([[0,0,0], [0,0,1], [0,0,2], [0,0,3], [0,0,4], [0,1,0], [0,1,1], [0,1,2], [0,1,3], [0,1,4]], truth2, [1,2,5])

print(sess.run(tf.edit_distance(h2, t2, normalize=True)))

5.This results in the following output:

[[ 0.40000001 0.2 ]]

6.A more efficient way to compare a set of words against another word is shown in this example. We create the indices and list of characters beforehand for both the hypothesis and ground truth string:

hypothesis_words = ['bear','bar','tensor','flow']

truth_word = ['beers'']

num_h_words = len(hypothesis_words)

h_indices = [[xi, 0, yi] for xi,x in enumerate(hypothesis_words) for yi,y in enumerate(x)]

h_chars = list('''.join(hypothesis_words))

h3 = tf.SparseTensor(h_indices, h_chars, [num_h_words,1,1])

truth_word_vec = truth_word*num_h_words

t_indices = [[xi, 0, yi] for xi,x in enumerate(truth_word_vec) for yi,y in enumerate(x)]

t_chars = list('''.join(truth_word_vec))

t3 = tf.SparseTensor(t_indices, t_chars, [num_h_words,1,1])

print(sess.run(tf.edit_distance(h3, t3, normalize=True)))

7.This results in the following output:

[[ 0.40000001]

[ 0.60000002]

[ 0.80000001]

[ 1. ]]

8.Now we will illustrate how to calculate the edit distance between two word lists using placeholders. The concept is the same, except we will be feeding in SparseTensorValue() instead of sparse tensors. First, we will create a function that creates the sparse tensors from a word list:

def create_sparse_vec(word_list):

num_words = len(word_list)

indices = [[xi, 0, yi] for xi,x in enumerate(word_list) for yi,y in enumerate(x)]

chars = list('''.join(word_list))

return(tf.SparseTensorValue(indices, chars, [num_words,1,1]))

hyp_string_sparse = create_sparse_vec(hypothesis_words)

truth_string_sparse = create_sparse_vec(truth_word*len(hypothesis_ words))

hyp_input = tf.sparse_placeholder(dtype=tf.string)

truth_input = tf.sparse_placeholder(dtype=tf.string)

edit_distances = tf.edit_distance(hyp_input, truth_input, normalize=True)

feed_dict = {hyp_input: hyp_string_sparse,

truth_input: truth_string_sparse}

print(sess.run(edit_distances, feed_dict=feed_dict))

9.This results in the following output:

[[ 0.40000001]

[ 0.60000002]

[ 0.80000001]

[ 1. ]]

How it works…

For this recipe, we have shown that we can measure text distances several ways using TensorFlow. This will be extremely useful for performing nearest neighbors on data that has text features. We will see more of this later in the chapter when we perform address matching.

There's more…

Other text distance metrics exist that we should discuss. Here is a definition table describing other various text distances between two strings, s1 and s2:

Computing with Mixed Distance Functions

When dealing with data observations that have multiple features, we should be aware that features can be scaled differently on different scales. In this recipe, we account for that to improve our housing value predictions.

Getting ready

It is important to extend the nearest neighbor algorithm to take into account variables that are scaled differently. In this example, we will show how to scale the distance function for different variables. Specifically, we will scale the distance function as a function of the feature variance.

The key to weighting the distance function is to use a weight matrix. The distance function written with matrix operations becomes the following formula:

Here, A is a diagonal weight matrix that we use to scale the distance metric for each feature.

For this recipe, we will try to improve our MSE on the Boston housing value dataset. This dataset is a great example of features that are on different scales, and the nearest neighbor algorithm would benefit from scaling the distance function.

How to do it…

1.First, we will load the necessary libraries and start a graph session:

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import requests

sess = tf.Session()

2.Next, we load the data and store it in a numpy array. Again, note that we will only use certain columns for prediction. We do not use id variables nor variables that have very low variance:

housing_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.data''

housing_header = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

cols_used = ['CRIM', 'INDUS', 'NOX', 'RM', 'AGE', 'DIS', 'TAX', 'PTRATIO', 'B', 'LSTAT']

num_features = len(cols_used)

housing_file = requests.get(housing_url)

housing_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in housing_file.text.split('\n') if len(y)>=1]

y_vals = np.transpose([np.array([y[13] for y in housing_data])])

x_vals = np.array([[x for i,x in enumerate(y) if housing_header in cols_used] for y in housing_data])

3.Now we scale the x values to be between zero and 1 with min-max scaling:

x_vals = (x_vals - x_vals.min(0)) / x_vals.ptp(0)

4.We now create the diagonal weight matrix that will provide the scaling of the distance metric by the standard deviation of the features:

weight_diagonal = x_vals.std(0)

weight_matrix = tf.cast(tf.diag(weight_diagonal), dtype=tf. float32)

5.Now we split the data into a training and test set. We also declare k, the amount of nearest neighbors, and make the batch size equal to the test set size:

train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices]

k = 4

batch_size=len(x_vals_test)

6.We declare our placeholders that we need next. We have four placeholders, the x-inputs and y-targets for both the training and test set:

x_data_train = tf.placeholder(shape=[None, num_features], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, num_features], dtype=tf. float32)

y_target_train = tf.placeholder(shape=[None, 1], dtype=tf.float32)

y_target_test = tf.placeholder(shape=[None, 1], dtype=tf.float32)

7.Now we can declare our distance function. For readability, we break up the distance function into its components. Note that we will have to tile the weight matrix by the batch size and use the batch_matmul() function to perform batch matrix multiplication across the batch size:

subtraction_term = tf.sub(x_data_train, tf.expand_dims(x_data_ test,1))

first_product = tf.batch_matmul(subtraction_term, tf.tile(tf. expand_dims(weight_matrix,0), [batch_size,1,1]))

second_product = tf.batch_matmul(first_product, tf.transpose(subtraction_term, perm=[0,2,1]))

distance = tf.sqrt(tf.batch_matrix_diag_part(second_product))

8.After we calculate all the training distances for each test point, we need to return the top k-NNs. We do this with the top_k() function. Since this function returns the largest values, and we want the smallest distances, we return the largest of the negative distance values. We then want to make predictions as the weighted average of the distances of the top k neighbors:

top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

x_sums = tf.expand_dims(tf.reduce_sum(top_k_xvals, 1),1)

x_sums_repeated = tf.matmul(x_sums,tf.ones([1, k], tf.float32))

x_val_weights = tf.expand_dims(tf.div(top_k_xvals,x_sums_ repeated), 1)

top_k_yvals = tf.gather(y_target_train, top_k_indices)

prediction = tf.squeeze(tf.batch_matmul(x_val_weights,top_k_ yvals), squeeze_dims=[1])

9.To evaluate our model, we calculate the MSE of our predictions:

mse = tf.div(tf.reduce_sum(tf.square(tf.sub(prediction, y_target_ test))), batch_size)

10.Now we can loop through our test batches and calculate the MSE for each:

num_loops = int(np.ceil(len(x_vals_test)/batch_size))

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_vals_train, x_data_test: x_batch, y_target_train: y_vals_train, y_target_test: y_batch})

batch_mse = sess.run(mse, feed_dict={x_data_train: x_vals_ train, x_data_test: x_batch, y_target_train: y_vals_train, y_ target_test: y_batch})

print('Batch #'' + str(i+1) + '' MSE: '' + str(np.round(batch_ mse,3)))

11.This results in the following output:

Batch #1 MSE: 21.322

12.As a final comparison, we can plot the distribution of housing values for the actual test set and the predictions on the test set with the following code:

bins = np.linspace(5, 50, 45)

plt.hist(predictions, bins, alpha=0.5, label='Prediction'')

plt.hist(y_batch, bins, alpha=0.5, label='Actual'')

plt.title('Histogram of Predicted and Actual Values'')

plt.xlabel('Med Home Value in $1,000s'')

plt.ylabel('Frequency'')

plt.legend(loc='upper right'')

plt.show()

Figure 3: The two histograms of the predicted and actual housing values on the Boston dataset. This time we have scaled the distance function differently for each feature.

How it works…

We decreased our MSE on the test set here by introducing a method of scaling the distance functions for each feature. Here, we scaled the distance functions by a factor of the feature's standard deviation. This provides a more accurate view of measuring which points are the closest neighbors or not. From this we also took the weighted average of the top k neighbors as a function of distance to get the housing value prediction.

There's more…

This scaling factor can also be used to down-weight or up-weight features in the nearest neighbor distance calculation. This can be useful in situations where we trust features more or less than others.

|

/2

/2