问题导读:

1、如何将地址字符串转换为稀疏向量?

2、如何使用最近邻进行图像识别?

3、如何实现最近k个邻居预测?

4、如何对测试/训练数据集进行随机抽样?

关注最新经典文章,欢迎关注公众号

上一篇:TensorFlow ML cookbook 第五章2、3节 使用基于文本的距离和用混合距离函数计算

使用地址匹配示例

现在我们已经测量了数字和文本距离,我们将花时间学习如何将它们组合起来测量具有文本和数字特征的观测之间的距离。

做好准备

最近邻是用于地址匹配的很好的算法。地址匹配是一种记录匹配,其中我们在多个数据集中有地址,我们希望将它们匹配起来。在地址匹配中,我们可能在地址,不同城市或不同的邮政编码中存在拼写错误,但它们可能都指向相同的地址。在地址的数字和字符组件上使用最近邻居算法可以帮助我们识别实际上相同的地址。

在此示例中,我们将生成两个数据集。每个数据集将包含街道地址和邮政编码。但是,一个数据集在街道地址中存在大量拼写错误。我们将非拼写数据集作为我们的黄金标准,并为每个拼写错误地址返回一个地址,该地址最接近字符串距离(对于街道)和数字距离(对于邮政编码)的函数。

代码的第一部分将侧重于生成两个数据集。然后代码的第二部分将运行测试集并返回训练集中最接近的地址。

怎么做…

1.我们首先加载必要的库:

[mw_shl_code=python,true]import random

import string

import numpy as np

import tensorflow as tf [/mw_shl_code]

2.我们现在将创建参考数据集。 为了显示简洁的输出,我们将只使每个数据集包含10个地址(但它可以运行更多):

[mw_shl_code=python,true]n = 10

street_names = ['abbey', 'baker', 'canal', 'donner', 'elm']

street_types = ['rd', 'st', 'ln', 'pass', 'ave']

rand_zips = [random.randint(65000,65999) for i in range(5)]

numbers = [random.randint(1, 9999) for i in range(n)]

streets = [random.choice(street_names) for i in range(n)]

street_suffs = [random.choice(street_types) for i in range(n)]

zips = [random.choice(rand_zips) for i in range(n)]

full_streets = [str(x) + ' ' + y + ' ' + z for x,y,z in zip(numbers, streets, street_suffs)]

reference_data = [list(x) for x in zip(full_streets,zips)] [/mw_shl_code]

3.要创建测试集,我们需要一个函数,它将在字符串中随机创建拼写错误并返回结果字符串:

[mw_shl_code=python,true]def create_typo(s, prob=0.75):

if random.uniform(0,1) < prob:

rand_ind = random.choice(range(len(s)))

s_list = list(s)

s_list[rand_ind]=random.choice(string.ascii_lowercase)

s = '''.join(s_list)

return(s)

typo_streets = [create_typo(x) for x in streets]

typo_full_streets = [str(x) + ' ' + y + ' ' + z for x,y,z in zip(numbers, typo_streets, street_suffs)]

test_data = [list(x) for x in zip(typo_full_streets,zips)] [/mw_shl_code]

4.现在我们可以初始化图形会话并声明我们需要的占位符。 我们在每个测试和参考集中需要四个占位符,我们需要一个地址和邮政编码占位符:

[mw_shl_code=python,true]sess = tf.Session()

test_address = tf.sparse_placeholder( dtype=tf.string)

test_zip = tf.placeholder(shape=[None, 1], dtype=tf.float32)

ref_address = tf.sparse_placeholder(dtype=tf.string)

ref_zip = tf.placeholder(shape=[None, n], dtype=tf.float32)[/mw_shl_code]

5.现在我们声明数字拉链距离和地址字符串的编辑距离:

[mw_shl_code=python,true]zip_dist = tf.square(tf.sub(ref_zip, test_zip))

address_dist = tf.edit_distance(test_address, ref_address, normalize=True) [/mw_shl_code]

6.我们现在将拉链距离和地址距离转换为相似之处。 对于相似性,当两个输入完全相同时,我们希望相似度为1,当它们非常不同时,我们希望相似度为0。 对于拉链距离,我们可以通过获取距离,从最大值减去,然后除以距离的范围来实现。 对于地址相似性,由于距离已经在0和1之间缩放,我们只需从1中减去它以获得相似性:

[mw_shl_code=python,true]zip_max = tf.gather(tf.squeeze(zip_dist), tf.argmax(zip_dist, 1))

zip_min = tf.gather(tf.squeeze(zip_dist), tf.argmin(zip_dist, 1))

zip_sim = tf.div(tf.sub(zip_max, zip_dist), tf.sub(zip_max, zip_ min))

address_sim = tf.sub(1., address_dist) [/mw_shl_code]

7.结合两个相似度函数,我们采用两者的加权平均值。 对于这个食谱,我们对地址和邮政编码给予同等重视。 我们也可以根据我们对每个功能的信任程度来改变这一点。 然后,我们返回参考集的最高相似度的索引:

[mw_shl_code=python,true]address_weight = 0.5

zip_weight = 1. - address_weight

weighted_sim = tf.add(tf.transpose(tf.mul(address_weight, address_ sim)), tf.mul(zip_weight, zip_sim))

top_match_index = tf.argmax(weighted_sim, 1) [/mw_shl_code]

8.为了在TensorFlow中使用编辑距离,我们必须将地址字符串转换为稀疏向量。 在本章的先前配方中,使用基于文本的距离配方,我们创建了以下功能,并将在此配方中使用它:

[mw_shl_code=python,true]def sparse_from_word_vec(word_vec):

num_words = len(word_vec)

indices = [[xi, 0, yi] for xi,x in enumerate(word_vec) for yi,y in enumerate(x)]

chars = list('''.join(word_vec))

# Now we return our sparse vector

return(tf.SparseTensorValue(indices, chars, [num_words,1,1])) [/mw_shl_code]

9.我们需要将参考数据集中的地址和邮政编码分开,以便在循环测试集时将它们提供给占位符:

[mw_shl_code=python,true]reference_addresses = [x[0] for x in reference_data]

reference_zips = np.array([[x[1] for x in reference_data]])[/mw_shl_code]

10.我们需要使用我们在步骤8中创建的函数创建稀疏张量参考地址集:

[mw_shl_code=python,true]sparse_ref_set = sparse_from_word_vec(reference_addresses)[/mw_shl_code]

11.现在我们可以遍历测试集的每个条目,并返回它最接近的引用集的索引。 我们打印每个条目的测试和参考。 如您所见,我们在此生成的数据集上获得了很好的结果:

[mw_shl_code=python,true]

for i in range(n):

test_address_entry = test_data[0]

test_zip_entry = [[test_data[1]]]

# Create sparse address vectors

test_address_repeated = [test_address_entry] * n

sparse_test_set = sparse_from_word_vec(test_address_repeated)

feeddict={test_address: sparse_test_set,

test_zip: test_zip_entry,

ref_address: sparse_ref_set,

ref_zip: reference_zips}

best_match = sess.run(top_match_index, feed_dict=feeddict)

best_street = reference_addresses[best_match]

[best_zip] = reference_zips[0][best_match]

[[test_zip_]] = test_zip_entry

print('Address: '' + str(test_address_entry) + '', '' + str(test_zip_))

print('Match : '' + str(best_street) + '', '' + str(best_ zip)) [/mw_shl_code]

12.这导致以下输出:

[mw_shl_code=python,true]Address: 8659 beker ln, 65463

Match : 8659 baker ln, 65463

Address: 1048 eanal ln, 65681

Match : 1048 canal ln, 65681

Address: 1756 vaker st, 65983

Match : 1756 baker st, 65983

Address: 900 abbjy pass, 65983

Match : 900 abbey pass, 65983

Address: 5025 canal rd, 65463

Match : 5025 canal rd, 65463

Address: 6814 elh st, 65154

Match : 6814 elm st, 65154

Address: 3057 cagal ave, 65463

Match : 3057 canal ave, 65463

Address: 7776 iaker ln, 65681

Match : 7776 baker ln, 65681

Address: 5167 caker rd, 65154

Match : 5167 baker rd, 65154

Address: 8765 donnor st, 65154

Match : 8765 donner st, 65154 [/mw_shl_code]

这个怎么运作…

在这样的地址匹配问题中要弄清楚的一件事就是权重的值以及如何缩放距离。这可能需要对数据本身进行一些探索和洞察。此外,在处理地址时,我们可能会考虑与此处不同的组件。我们可能会将街道号码视为与街道地址不同的组成部分,甚至可能还有其他组成部分,例如城市和州。处理数字地址组件时,请注意它们可以被视为数字(具有数字距离)或字符(具有编辑距离)。你可以选择如何。另请注意,如果我们认为邮政编码中的拼写错误来自人类输入而不是计算机映射错误,我们可能会考虑使用邮政编码的编辑距离。

为了了解拼写错误如何影响结果,我们鼓励读者更改拼写错误函数以进行更多拼写错误或更频繁的拼写错误,并增加数据集的大小以查看此算法的工作情况。

使用最近邻进行图像识别

做好准备

最近邻也可用于图像识别。图像识别数据集的Hello World是MNIST手写数字数据集。由于我们将在后面的章节中将此数据集用于各种神经网络图像识别算法,因此将结果与非神经网络算法进行比较将会很棒。

MNIST数字数据集由数千个尺寸为28×28像素的标记图像组成。虽然这被认为是小图像,但它对于最近邻居算法总共具有784个像素(或特征)。我们将通过考虑最近k个邻居的模式预测来计算该分类问题的最近邻居预测(在该示例中k = 4)。

怎么做…

1.我们首先加载必要的库。请注意,我们还将导入Python图像库(PIL),以便能够绘制预测输出的样本。 TensorFlow有一个内置的方法来加载我们将使用的MNIST数据集:

[mw_shl_code=python,true]import random

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow.examples.tutorials.mnist import input_data [/mw_shl_code]

2.现在我们开始一个图形会话并以一种热编码形式加载MNIST数据:

[mw_shl_code=python,true]sess = tf.Session()

mnist = input_data.read_data_sets("MNIST_data/"", one_hot=True)[/mw_shl_code]

3.由于MNIST数据集很大并且计算数万个输入上的784个特征之间的距离在计算上会很困难,我们将采样一组较小的图像进行训练。 此外,我们选择一个可被六位整除的测试集编号仅用于绘图目的,因为我们将绘制最后一批六个图像以查看结果的样本:

[mw_shl_code=python,true]train_size = 1000

test_size = 102

rand_train_indices = np.random.choice(len(mnist.train.images), train_size, replace=False)

rand_test_indices = np.random.choice(len(mnist.test.images), test_ size, replace=False)

x_vals_train = mnist.train.images[rand_train_indices]

x_vals_test = mnist.test.images[rand_test_indices]

y_vals_train = mnist.train.labels[rand_train_indices]

y_vals_test = mnist.test.labels[rand_test_indices] [/mw_shl_code]

4.我们声明我们的k值和批量大小:

[mw_shl_code=python,true]k = 4

batch_size=6[/mw_shl_code]

5.现在我们初始化我们将在图表中提供的占位符:

[mw_shl_code=python,true]x_data_train = tf.placeholder(shape=[None, 784], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, 784], dtype=tf.float32)

y_target_train = tf.placeholder(shape=[None, 10], dtype=tf. float32)

y_target_test = tf.placeholder(shape=[None, 10], dtype=tf.float32) [/mw_shl_code]

6.我们声明我们的距离指标。 这里我们将使用L1度量(绝对值):

[mw_shl_code=python,true]distance = tf.reduce_sum(tf.abs(tf.sub(x_data_train, tf.expand_ dims(x_data_test,1))), reduction_indices=2)[/mw_shl_code]

7.现在我们找到最接近的前k个图像并预测模式。 该模式将在一个热编码索引上执行,并且计数最多发生:

[mw_shl_code=python,true]top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

prediction_indices = tf.gather(y_target_train, top_k_indices)

count_of_predictions = tf.reduce_sum(prediction_indices, reduction_indices=1)

prediction = tf.argmax(count_of_predictions, dimension=1) [/mw_shl_code]

8.我们现在可以遍历我们的测试集,计算预测并存储它们:

[mw_shl_code=python,true]num_loops = int(np.ceil(len(x_vals_test)/batch_size))

test_output = []

actual_vals = []

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_ vals_train, x_data_test: x_batch,

y_target_train: y_vals_ train, y_target_test: y_batch})

test_output.extend(predictions)

actual_vals.extend(np.argmax(y_batch, axis=1))[/mw_shl_code]

9.现在我们已经保存了实际和预测的输出,我们可以计算出准确性。 由于我们对测试/训练数据集进行随机抽样,这种情况会发生变化,但我们的最终精确度应该在80%到90%之间:

[mw_shl_code=python,true]accuracy = sum([1./test_size for i in range(test_size) if test_ output==actual_vals])

print('Accuracy on test set: '' + str(accuracy))

Accuracy on test set: 0.8333333333333325 [/mw_shl_code]

10.这是绘制最后一批结果的代码:

[mw_shl_code=python,true]actuals = np.argmax(y_batch, axis=1)

Nrows = 2

Ncols = 3

for i in range(len(actuals)):

plt.subplot(Nrows, Ncols, i+1)

plt.imshow(np.reshape(x_batch, [28,28]), cmap='Greys_r'')

plt.title('Actual: '' + str(actuals) + '' Pred: '' + str(predictions), fontsize=10)

frame = plt.gca()

frame.axes.get_xaxis().set_visible(False)

frame.axes.get_yaxis().set_visible(False)[/mw_shl_code]

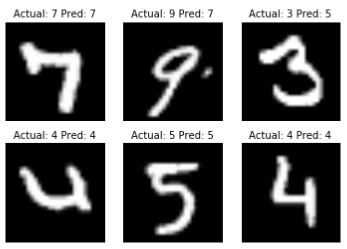

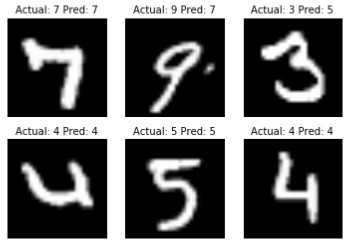

图4:我们运行最近邻居预测的最后一批六张图像。我们可以看到,我们并没有完全正确地获得所有图像

这个怎么运作…

给定足够的计算时间和计算资源,我们可以使测试和训练集更大。这可能会提高我们的准确度,也是防止过度拟合的常用方法。此外,该算法还需要进一步探索理想的k值来进行选择。在数据集上进行一组交叉验证实验后,将选择k值。

还有更多…

我们也可以在这里使用最近邻算法来评估用户看不见的数字。请参阅在线存储库以获取使用此模型评估用户输入数字的方法:https://github.com/nfmcclure/tensorflow_cookbook。

在本章中,我们探讨了如何使用kNN算法进行回归和分类。我们已经讨论了距离函数的不同用法以及如何将它们混合在一起。我们鼓励读者探索不同的距离度量,权重和k值,以优化这些方法的准确性。

原文:

Using an Address Matching Example

Now that we have measured numerical and text distances, we will spend time learning how to combine them to measure distances between observations that have both text and numerical features.

Getting ready

Nearest neighbor is a great algorithm to use for address matching. Address matching is a type of record matching in which we have addresses in multiple datasets and we would like to match them up. In address matching, we may have typos in the address, different cities, or different zip codes, but they may all refer to the same address. Using the nearest neighbor algorithm across the numerical and character components of an address may help us identify addresses that are actually the same.

In this example, we will generate two datasets. Each dataset will comprise a street address and a zip code. But one dataset has a high number of typos in the street address. We will take the non-typo dataset as our gold standard and return one address from it for each typo address that is the closest as a function of the string distance (for the street) and numerical distance (for the zip code).

The first part of the code will focus on generating the two datasets. Then the second part of the code will run through the test set and return the closest address from the training set.

How to do it…

1.We first start by loading the necessary libraries:

import random

import string

import numpy as np

import tensorflow as tf

2.We will now create the reference dataset. To show succinct output, we will only make each dataset comprise of 10 addresses (but it can be run with many more):

n = 10

street_names = ['abbey', 'baker', 'canal', 'donner', 'elm']

street_types = ['rd', 'st', 'ln', 'pass', 'ave']

rand_zips = [random.randint(65000,65999) for i in range(5)]

numbers = [random.randint(1, 9999) for i in range(n)]

streets = [random.choice(street_names) for i in range(n)]

street_suffs = [random.choice(street_types) for i in range(n)]

zips = [random.choice(rand_zips) for i in range(n)]

full_streets = [str(x) + ' ' + y + ' ' + z for x,y,z in zip(numbers, streets, street_suffs)]

reference_data = [list(x) for x in zip(full_streets,zips)]

3.To create the test set, we need a function that will randomly create a typo in a string and return the resulting string:

def create_typo(s, prob=0.75):

if random.uniform(0,1) < prob:

rand_ind = random.choice(range(len(s)))

s_list = list(s)

s_list[rand_ind]=random.choice(string.ascii_lowercase)

s = '''.join(s_list)

return(s)

typo_streets = [create_typo(x) for x in streets]

typo_full_streets = [str(x) + ' ' + y + ' ' + z for x,y,z in zip(numbers, typo_streets, street_suffs)]

test_data = [list(x) for x in zip(typo_full_streets,zips)]

4.Now we can initialize a graph session and declare the placeholders we need. We will need four placeholders in each test and reference set, and we will need an address and zip code placeholder:

sess = tf.Session()

test_address = tf.sparse_placeholder( dtype=tf.string)

test_zip = tf.placeholder(shape=[None, 1], dtype=tf.float32)

ref_address = tf.sparse_placeholder(dtype=tf.string)

ref_zip = tf.placeholder(shape=[None, n], dtype=tf.float32)

5.Now we declare the numerical zip distance and the edit distance for the address string:

zip_dist = tf.square(tf.sub(ref_zip, test_zip))

address_dist = tf.edit_distance(test_address, ref_address, normalize=True)

6.We now convert the zip distance and the address distance into similarities. For the similarities, we want a similarity of 1 when the two inputs are exactly the same and near 0 when they are very different. For the zip distance, we can do this by taking the distances, subtracting from the max, and then dividing by the range of the distances. For the address similarity, since the distance is already scaled between 0 and 1, we just subtract it from 1 to get the similarity:

zip_max = tf.gather(tf.squeeze(zip_dist), tf.argmax(zip_dist, 1))

zip_min = tf.gather(tf.squeeze(zip_dist), tf.argmin(zip_dist, 1))

zip_sim = tf.div(tf.sub(zip_max, zip_dist), tf.sub(zip_max, zip_ min))

address_sim = tf.sub(1., address_dist)

7.To combine the two similarity functions, we take a weighted average of the two. For this recipe, we put equal weight on the address and the zip code. We can also change this depending on how much we trust each feature. We then return the index of the highest similarity of the reference set:

address_weight = 0.5

zip_weight = 1. - address_weight

weighted_sim = tf.add(tf.transpose(tf.mul(address_weight, address_ sim)), tf.mul(zip_weight, zip_sim))

top_match_index = tf.argmax(weighted_sim, 1)

8.In order to use the edit distance in TensorFlow, we have to convert the address strings to a sparse vector. In a prior recipe in this chapter, Working with Text- Based Distances recipe, we created the following function and will use it in this recipe as well:

def sparse_from_word_vec(word_vec):

num_words = len(word_vec)

indices = [[xi, 0, yi] for xi,x in enumerate(word_vec) for yi,y in enumerate(x)]

chars = list('''.join(word_vec))

# Now we return our sparse vector

return(tf.SparseTensorValue(indices, chars, [num_words,1,1]))

9.We need to separate the addresses and zip codes in the reference dataset, so we can feed them into the placeholders when we loop through the test set:

reference_addresses = [x[0] for x in reference_data]

reference_zips = np.array([[x[1] for x in reference_data]])

10.We need to create the sparse tensor set of reference addresses using the function we created in step 8:

sparse_ref_set = sparse_from_word_vec(reference_addresses)

11.Now we can loop though each entry of the test set and return the index of the reference set that it is the closest to. We print off both the test and reference for each entry. As you can see, we have great results on this generated dataset:

for i in range(n):

test_address_entry = test_data[0]

test_zip_entry = [[test_data[1]]]

# Create sparse address vectors

test_address_repeated = [test_address_entry] * n

sparse_test_set = sparse_from_word_vec(test_address_repeated)

feeddict={test_address: sparse_test_set,

test_zip: test_zip_entry,

ref_address: sparse_ref_set,

ref_zip: reference_zips}

best_match = sess.run(top_match_index, feed_dict=feeddict)

best_street = reference_addresses[best_match]

[best_zip] = reference_zips[0][best_match]

[[test_zip_]] = test_zip_entry

print('Address: '' + str(test_address_entry) + '', '' + str(test_zip_))

print('Match : '' + str(best_street) + '', '' + str(best_ zip))

12.This results in the following output:

Address: 8659 beker ln, 65463

Match : 8659 baker ln, 65463

Address: 1048 eanal ln, 65681

Match : 1048 canal ln, 65681

Address: 1756 vaker st, 65983

Match : 1756 baker st, 65983

Address: 900 abbjy pass, 65983

Match : 900 abbey pass, 65983

Address: 5025 canal rd, 65463

Match : 5025 canal rd, 65463

Address: 6814 elh st, 65154

Match : 6814 elm st, 65154

Address: 3057 cagal ave, 65463

Match : 3057 canal ave, 65463

Address: 7776 iaker ln, 65681

Match : 7776 baker ln, 65681

Address: 5167 caker rd, 65154

Match : 5167 baker rd, 65154

Address: 8765 donnor st, 65154

Match : 8765 donner st, 65154

How it works…

One of the hard things to figure out in address matching problems like this is the value of the weights and how to scale the distances. This may take some exploration and insight into the data itself. Also, when dealing with addresses we may consider different components than we did here. We may consider the street number a separate component from the street address, or even have other components, such as city and state. When dealing with numerical address components, note that they can be treated as numbers (with a numerical distance) or as characters (with an edit distance). It is up to you to choose how. Also note that we might consider using an edit distance with the zip code if we think that typos in the zip code come from human entry and not, say, computer mapping errors.

To get a feel for how typos affect the results, we encourage the reader to change the typo function to make more typos or more frequent typos and increase the dataset's size to see how well this algorithm works.

Using Nearest Neighbors for Image Recognition

Getting ready

Nearest neighbors can also be used for image recognition. The Hello World of image recognition datasets is the MNIST handwritten digit dataset. Since we will be using this dataset for various neural network image recognition algorithms in later chapters, it will be great to compare the results to a non-neural network algorithm.

The MNIST digit dataset is composed of thousands of labeled images that are 28x28 pixels in size. Although this is considered to be a small image, it has a total of 784 pixels (or features) for the nearest neighbor algorithm. We will compute the nearest neighbor prediction for this categorical problem by considering the mode prediction of the nearest k neighbors (k=4 in this example).

How to do it…

1.We start by loading the necessary libraries. Note that we will also import the Python Image Library (PIL) to be able to plot a sample of the predicted outputs. And TensorFlow has a built-in method to load the MNIST dataset that we will use:

import random

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow.examples.tutorials.mnist import input_data

2.Now we start a graph session and load the MNIST data in a one hot encoded form:

sess = tf.Session()

mnist = input_data.read_data_sets("MNIST_data/"", one_hot=True)

3.Because the MNIST dataset is large and computing the distances between 784 features on tens of thousands of inputs would be computationally hard, we will sample a smaller set of images to train on. Also, we choose a test set number that is divisible by six six only for plotting purposes, as we will plot the last batch of six images to see a sample of the results:

train_size = 1000

test_size = 102

rand_train_indices = np.random.choice(len(mnist.train.images), train_size, replace=False)

rand_test_indices = np.random.choice(len(mnist.test.images), test_ size, replace=False)

x_vals_train = mnist.train.images[rand_train_indices]

x_vals_test = mnist.test.images[rand_test_indices]

y_vals_train = mnist.train.labels[rand_train_indices]

y_vals_test = mnist.test.labels[rand_test_indices]

4.We declare our k value and batch size:

k = 4

batch_size=6

5.Now we initialize our placeholders that we will feed in the graph:

x_data_train = tf.placeholder(shape=[None, 784], dtype=tf.float32)

x_data_test = tf.placeholder(shape=[None, 784], dtype=tf.float32)

y_target_train = tf.placeholder(shape=[None, 10], dtype=tf. float32)

y_target_test = tf.placeholder(shape=[None, 10], dtype=tf.float32)

6.We declare our distance metric. Here we will use the L1 metric (absolute value):

distance = tf.reduce_sum(tf.abs(tf.sub(x_data_train, tf.expand_ dims(x_data_test,1))), reduction_indices=2)

7.Now we find the top k images that are the closest and predict the mode. The mode will be performed on one hot encoded indices and counting which occurs the most:

top_k_xvals, top_k_indices = tf.nn.top_k(tf.neg(distance), k=k)

prediction_indices = tf.gather(y_target_train, top_k_indices)

count_of_predictions = tf.reduce_sum(prediction_indices, reduction_indices=1)

prediction = tf.argmax(count_of_predictions, dimension=1)

8.We can now loop through our test set, compute the predictions, and store them:

num_loops = int(np.ceil(len(x_vals_test)/batch_size))

test_output = []

actual_vals = []

for i in range(num_loops):

min_index = i*batch_size

max_index = min((i+1)*batch_size,len(x_vals_train))

x_batch = x_vals_test[min_index:max_index]

y_batch = y_vals_test[min_index:max_index]

predictions = sess.run(prediction, feed_dict={x_data_train: x_ vals_train, x_data_test: x_batch,

y_target_train: y_vals_ train, y_target_test: y_batch})

test_output.extend(predictions)

actual_vals.extend(np.argmax(y_batch, axis=1))

9.Now that we have saved the actual and predicted output, we can calculate the accuracy. This will change due to our random sampling of the test/training datasets, but we should end up with accuracies of around 80% to 90%:

accuracy = sum([1./test_size for i in range(test_size) if test_ output==actual_vals])

print('Accuracy on test set: '' + str(accuracy))

Accuracy on test set: 0.8333333333333325

10.Here is the code to plot the last batch results:

actuals = np.argmax(y_batch, axis=1)

Nrows = 2

Ncols = 3

for i in range(len(actuals)):

plt.subplot(Nrows, Ncols, i+1)

plt.imshow(np.reshape(x_batch, [28,28]), cmap='Greys_r'')

plt.title('Actual: '' + str(actuals) + '' Pred: '' + str(predictions), fontsize=10)

frame = plt.gca()

frame.axes.get_xaxis().set_visible(False)

frame.axes.get_yaxis().set_visible(False)

Figure 4: The last batch of six images we ran our nearest neighbor prediction on. We can see that we do not get all of the images exactly correct

How it works…

Given enough computation time and computational resources, we could have made the test and training sets bigger. This probably would have increased our accuracy, and also is a common way to prevent overfitting. Also, this algorithm warrants further exploration on the ideal k value to choose. The k value would be chosen after a set of cross-validation experiments on the dataset.

There's more…

We can also use the nearest neighbor algorithm here for evaluating unseen numbers from the user as well. Please see the online repository for a way to use this model to evaluate user input digits here: https://github.com/nfmcclure/tensorflow_cookbook.

In this chapter, we've explored how to use kNN algorithms for regression and classification. We've talked about the different usage of distance functions and how to mix them together. We encourage the reader to explore different distance metrics, weights, and k values to optimize the accuracy of these methods.

|

/2

/2