日志

Spark SQL与Hive On MapReduce速度比较

|

1.运行spark-sql shell

aboutyun@aboutyun:/opt/spark-1.6.1-bin-hadoop2.6/bin$ spark-sql

spark-sql> create external table cn(x bigint, y bigint, z bigint, k bigint)

> row format delimited fields terminated by ','

> location '/cn';

OK

Time taken: 0.876 seconds

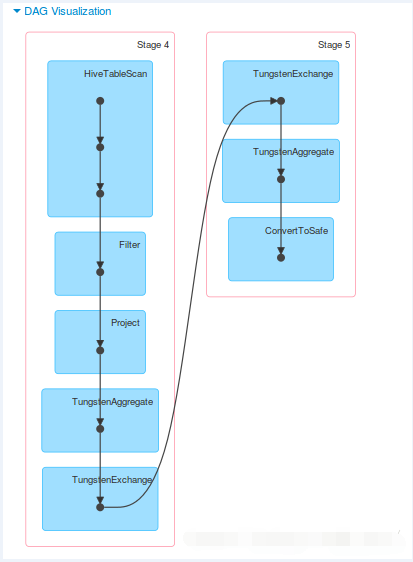

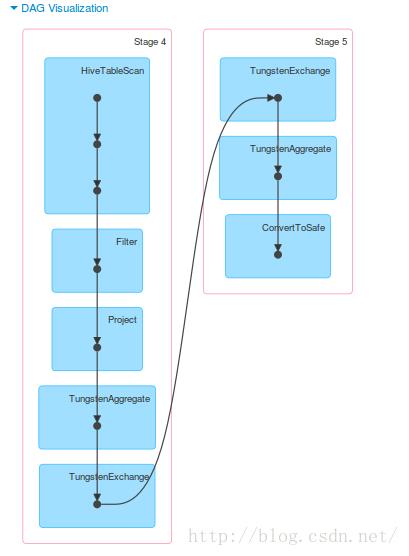

spark-sql> create table p as select y, z, sum(k) as t

> from cn where x>=20141228 and x<=20150110 group by y, z;

Time taken: 20.658 seconds

查看jobs,用了15s,为什么上面显示花了接近21s?我也不清楚,先放下不管。

2.运行Hive

aboutyun@aboutyun:~$ hive

hive> create external table cn(x bigint, y bigint, z bigint, k bigint)

> row format delimited fields terminated by ','

> location '/cn';

OK

Time taken: 0.752 seconds

hive> create table p as select y, z, sum(k) as t

> from cn where x>=20141228 and x<=20150110 group by y, z;

Query ID = guo_20160515161032_2e035fc2-6214-402a-90dd-7acda7d638bf

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1463299536204_0001, Tracking URL = http://drguo:8088/proxy/application_1463299536204_0001/

Kill Command = /opt/Hadoop/hadoop-2.7.2/bin/hadoop job -kill job_1463299536204_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-05-15 16:10:52,353 Stage-1 map = 0%, reduce = 0%

2016-05-15 16:11:03,418 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 8.65 sec

2016-05-15 16:11:13,257 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 13.99 sec

MapReduce Total cumulative CPU time: 13 seconds 990 msec

Ended Job = job_1463299536204_0001

Moving data to: hdfs://drguo:9000/user/hive/warehouse/predict

Table default.predict stats: [numFiles=1, numRows=1486, totalSize=15256, rawDataSize=13770]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 13.99 sec HDFS Read: 16551670 HDFS Write: 15332 SUCCESS

Total MapReduce CPU Time Spent: 13 seconds 990 msec

OK

Time taken: 42.887 seconds

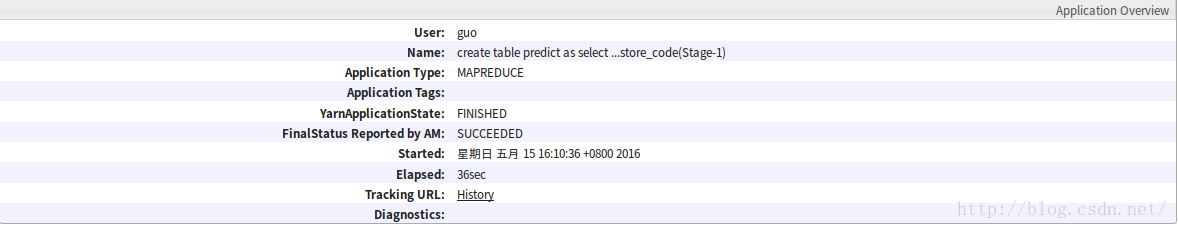

花了接近43s。再去RM看一下,花了36s

结果非常明显

如果按shell里显示花的时间算,21:43

按网页里显示花的时间算,15:36

/2

/2