问题导读:

1、如何以月为单位统计,购买2次以上商品的用户?

2、如何设计用户购买商品明细表(宽表)?

3、如何统计各用户等级对应的复购率前十的商品排行?

4、如何统计每等级用户每产品的下单次数?

上一篇:大数据项目之电商数仓(总结)(四):系统业务数据仓库

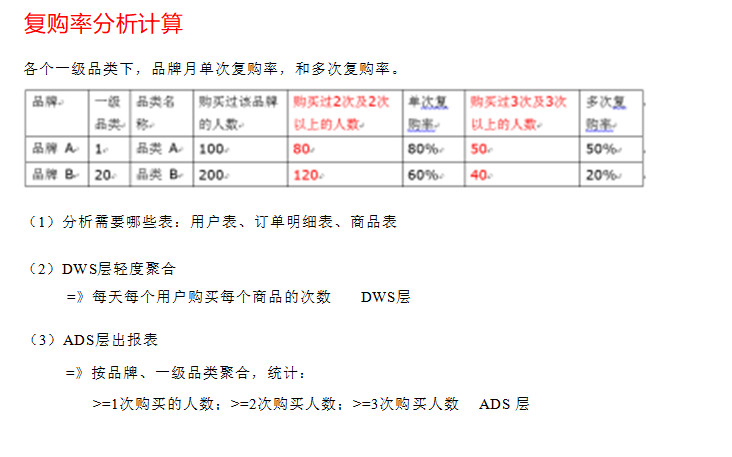

第6章 需求三:品牌复购率

需求:以月为单位统计,购买2次以上商品的用户

6.1 复购率计算分析

6.2 DWS层

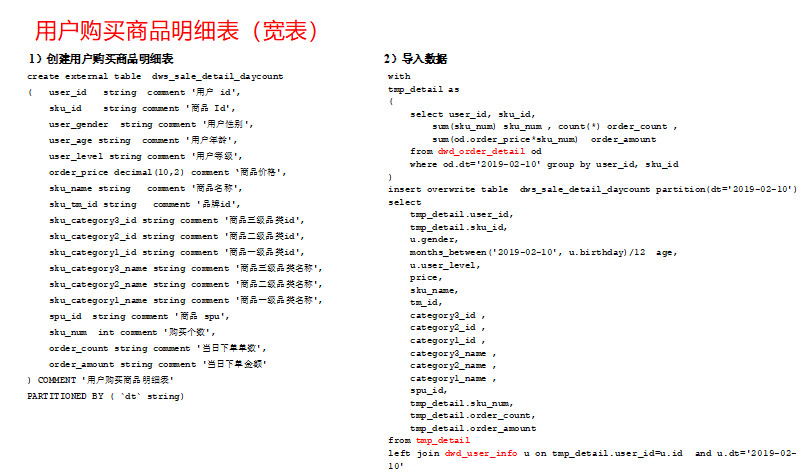

6.2.1 用户购买商品明细表(宽表)

- hive (gmall)>

- drop table if exists dws_sale_detail_daycount;

- create external table dws_sale_detail_daycount

- (

- user_id string comment '用户 id',

- sku_id string comment '商品 Id',

- user_gender string comment '用户性别',

- user_age string comment '用户年龄',

- user_level string comment '用户等级',

- order_price decimal(10,2) comment '商品价格',

- sku_name string comment '商品名称',

- sku_tm_id string comment '品牌id',

- sku_category3_id string comment '商品三级品类id',

- sku_category2_id string comment '商品二级品类id',

- sku_category1_id string comment '商品一级品类id',

- sku_category3_name string comment '商品三级品类名称',

- sku_category2_name string comment '商品二级品类名称',

- sku_category1_name string comment '商品一级品类名称',

- spu_id string comment '商品 spu',

- sku_num int comment '购买个数',

- order_count string comment '当日下单单数',

- order_amount string comment '当日下单金额'

- ) COMMENT '用户购买商品明细表'

- PARTITIONED BY (`dt` string)

- stored as parquet

- location '/warehouse/gmall/dws/dws_user_sale_detail_daycount/'

- tblproperties ("parquet.compression"="snappy");

6.2.2 数据导入

- hive (gmall)>

- with

- tmp_detail as

- (

- select

- user_id,

- sku_id,

- sum(sku_num) sku_num,

- count(*) order_count,

- sum(od.order_price*sku_num) order_amount

- from dwd_order_detail od

- where od.dt='2019-02-10'

- group by user_id, sku_id

- )

- insert overwrite table dws_sale_detail_daycount partition(dt='2019-02-10')

- select

- tmp_detail.user_id,

- tmp_detail.sku_id,

- u.gender,

- months_between('2019-02-10', u.birthday)/12 age,

- u.user_level,

- price,

- sku_name,

- tm_id,

- category3_id,

- category2_id,

- category1_id,

- category3_name,

- category2_name,

- category1_name,

- spu_id,

- tmp_detail.sku_num,

- tmp_detail.order_count,

- tmp_detail.order_amount

- from tmp_detail

- left join dwd_user_info u on tmp_detail.user_id =u.id and u.dt='2019-02-10'

- left join dwd_sku_info s on tmp_detail.sku_id =s.id and s.dt='2019-02-10';

6.2.3 数据导入脚本

1)在/home/kgg/bin目录下创建脚本dws_sale.sh

- [kgg@hadoop102 bin]$ vim dws_sale.sh

- 在脚本中填写如下内容

- #!/bin/bash

-

- # 定义变量方便修改

- APP=gmall

- hive=/opt/module/hive/bin/hive

-

- # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

- if [ -n "$1" ] ;then

- do_date=$1

- else

- do_date=`date -d "-1 day" +%F`

- fi

-

- sql="

-

- set hive.exec.dynamic.partition.mode=nonstrict;

-

- with

- tmp_detail as

- (

- select

- user_id,

- sku_id,

- sum(sku_num) sku_num,

- count(*) order_count,

- sum(od.order_price*sku_num) order_amount

- from "$APP".dwd_order_detail od

- where od.dt='$do_date'

- group by user_id, sku_id

- )

- insert overwrite table "$APP".dws_sale_detail_daycount partition(dt='$do_date')

- select

- tmp_detail.user_id,

- tmp_detail.sku_id,

- u.gender,

- months_between('$do_date', u.birthday)/12 age,

- u.user_level,

- price,

- sku_name,

- tm_id,

- category3_id,

- category2_id,

- category1_id,

- category3_name,

- category2_name,

- category1_name,

- spu_id,

- tmp_detail.sku_num,

- tmp_detail.order_count,

- tmp_detail.order_amount

- from tmp_detail

- left join "$APP".dwd_user_info u

- on tmp_detail.user_id=u.id and u.dt='$do_date'

- left join "$APP".dwd_sku_info s on tmp_detail.sku_id =s.id and s.dt='$do_date';

-

- "

- $hive -e "$sql"

2)增加脚本执行权限

- [kgg@hadoop102 bin]$ chmod 777 dws_sale.sh

3)执行脚本导入数据

- [kgg@hadoop102 bin]$ dws_sale.sh 2019-02-11

4)查看导入数据

- hive (gmall)>

- select * from dws_sale_detail_daycount where dt='2019-02-11' limit 2;

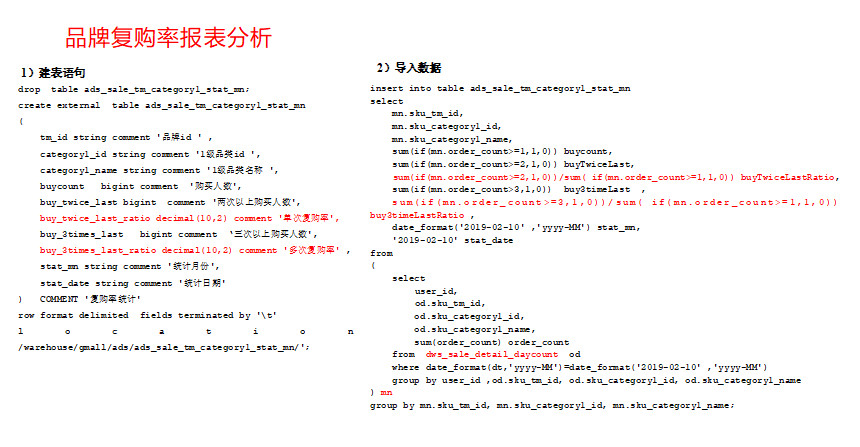

6.3 ADS层品牌复购率

6.3.1 建表语句

- hive (gmall)>

- drop table ads_sale_tm_category1_stat_mn;

- create external table ads_sale_tm_category1_stat_mn

- (

- tm_id string comment '品牌id',

- category1_id string comment '1级品类id ',

- category1_name string comment '1级品类名称 ',

- buycount bigint comment '购买人数',

- buy_twice_last bigint comment '两次以上购买人数',

- buy_twice_last_ratio decimal(10,2) comment '单次复购率',

- buy_3times_last bigint comment '三次以上购买人数',

- buy_3times_last_ratio decimal(10,2) comment '多次复购率',

- stat_mn string comment '统计月份',

- stat_date string comment '统计日期'

- ) COMMENT '复购率统计'

- row format delimited fields terminated by '\t'

- location '/warehouse/gmall/ads/ads_sale_tm_category1_stat_mn/';

6.3.2 数据导入

1)数据导入

- hive (gmall)>

- insert into table ads_sale_tm_category1_stat_mn

- select

- mn.sku_tm_id,

- mn.sku_category1_id,

- mn.sku_category1_name,

- sum(if(mn.order_count>=1,1,0)) buycount,

- sum(if(mn.order_count>=2,1,0)) buyTwiceLast,

- sum(if(mn.order_count>=2,1,0))/sum( if(mn.order_count>=1,1,0)) buyTwiceLastRatio,

- sum(if(mn.order_count>=3,1,0)) buy3timeLast ,

- sum(if(mn.order_count>=3,1,0))/sum( if(mn.order_count>=1,1,0)) buy3timeLastRatio ,

- date_format('2019-02-10' ,'yyyy-MM') stat_mn,

- '2019-02-10' stat_date

- from

- (

- select

- user_id,

- sd.sku_tm_id,

- sd.sku_category1_id,

- sd.sku_category1_name,

- sum(order_count) order_count

- from dws_sale_detail_daycount sd

- where date_format(dt,'yyyy-MM')=date_format('2019-02-10' ,'yyyy-MM')

- group by user_id, sd.sku_tm_id, sd.sku_category1_id, sd.sku_category1_name

- ) mn

- group by mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name;

2)查询导入数据

- hive (gmall)> select * from ads_sale_tm_category1_stat_mn;

6.3.3 数据导入脚本

1)在/home/kgg/bin目录下创建脚本ads_sale.sh

- [kgg@hadoop102 bin]$ vim ads_sale.sh

- 在脚本中填写如下内容

- #!/bin/bash

-

- # 定义变量方便修改

- APP=gmall

- hive=/opt/module/hive/bin/hive

-

- # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

- if [ -n "$1" ] ;then

- do_date=$1

- else

- do_date=`date -d "-1 day" +%F`

- fi

-

- sql="

-

- set hive.exec.dynamic.partition.mode=nonstrict;

-

- insert into table "$APP".ads_sale_tm_category1_stat_mn

- select

- mn.sku_tm_id,

- mn.sku_category1_id,

- mn.sku_category1_name,

- sum(if(mn.order_count>=1,1,0)) buycount,

- sum(if(mn.order_count>=2,1,0)) buyTwiceLast,

- sum(if(mn.order_count>=2,1,0))/sum( if(mn.order_count>=1,1,0)) buyTwiceLastRatio,

- sum(if(mn.order_count>=3,1,0)) buy3timeLast,

- sum(if(mn.order_count>=3,1,0))/sum( if(mn.order_count>=1,1,0)) buy3timeLastRatio ,

- date_format('$do_date' ,'yyyy-MM') stat_mn,

- '$do_date' stat_date

- from

- (

- select

- user_id,

- od.sku_tm_id,

- od.sku_category1_id,

- od.sku_category1_name,

- sum(order_count) order_count

- from "$APP".dws_sale_detail_daycount od

- where date_format(dt,'yyyy-MM')=date_format('$do_date' ,'yyyy-MM')

- group by user_id, od.sku_tm_id, od.sku_category1_id, od.sku_category1_name

- ) mn

- group by mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name;

-

- "

- $hive -e "$sql"

2)增加脚本执行权限

- [kgg@hadoop102 bin]$ chmod 777 ads_sale.sh

3)执行脚本导入数据

- [kgg@hadoop102 bin]$ ads_sale.sh 2019-02-11

4)查看导入数据

- hive (gmall)>

- select * from ads_sale_tm_category1_stat_mn limit 2;

第7章 需求四:各用户等级对应的复购率前十的商品排行

7.1 DWS层

使用用户购买明细表宽表(dws_sale_detail_daycount)作为DWS数据

7.2 ADS层

7.2.1 建表语句

- drop table ads_ul_rep_ratio;

- create table ads_ul_rep_ratio(

- user_level string comment '用户等级' ,

- sku_id string comment '商品id',

- buy_count bigint comment '购买总人数',

- buy_twice_count bigint comment '两次购买总数',

- buy_twice_rate decimal(10,2) comment '二次复购率',

- rank string comment '排名' ,

- state_date string comment '统计日期'

- ) COMMENT '复购率统计'

- row format delimited fields terminated by '\t'

- location '/warehouse/gmall/ads/ads_ul_rep_ratio/';

7.2.2 导入数据

- with

- tmp_count as(

- select -- 每个等级内每个用户对每个产品的下单次数

- user_level,

- user_id,

- sku_id,

- sum(order_count) order_count

- from dws_sale_detail_daycount

- where dt<='2019-10-05'

- group by user_level, user_id, sku_id

- )

- insert overwrite table ads_ul_rep_ratio

- select

- *

- from(

- select

- user_level,

- sku_id,

- sum(if(order_count >=1, 1, 0)) buy_count,

- sum(if(order_count >=2, 1, 0)) buy_twice_count,

- sum(if(order_count >=2, 1, 0)) / sum(if(order_count >=1, 1, 0)) * 100 buy_twice_rate,

- row_number() over(partition by user_level order by sum(if(order_count >=2, 1, 0)) / sum(if(order_count >=1, 1, 0)) desc) rn,

- '2019-10-05'

- from tmp_count

- group by user_level, sku_id

- ) t1

- where rn<=10

7.2.2 导入数据脚本

1)在/home/kgg/bin目录下创建脚本ads_ul_rep_ratio.sh

- [kgg@hadoop102 bin]$ vim ads_ul_rep_ratio.sh

- 在脚本中填写如下内容

- #!/bin/bash

-

- db=gmall

- hive=/opt/module/hive-1.2.1/bin/hive

- hadoop=/opt/module/hadoop-2.7.2/bin/hadoop

-

- if [[ -n $1 ]]; then

- do_date=$1

- else

- do_date=`date -d '-1 day' +%F`

- fi

-

- sql="

- use gmall;

- with

- tmp_count as(

- select -- 每等级用户每产品的下单次数

- user_level,

- sku_id,

- sum(order_count) order_count

- from dws_sale_detail_daycount

- where dt<='$do_date'

- group by user_level, sku_id

- )

- insert overwrite table ads_ul_rep_ratio

- select

- *

- from(

- select

- user_level,

- sku_id,

- sum(if(order_count >=1, 1, 0)) buy_count,

- sum(if(order_count >=2, 1, 0)) buy_twice_count,

- sum(if(order_count >=2, 1, 0)) / sum(if(order_count >=1, 1, 0)) * 100 buy_twice_rate,

- row_number() over(partition by user_level order by sum(if(order_count >=2, 1, 0)) / sum(if(order_count >=1, 1, 0)) desc) rn,

- '$do_date'

- from tmp_count

- group by user_level, sku_id

- ) t1

- where rn<=10

- "

-

- $hive -e "$sql"

2)增加脚本执行权限

- [kgg@hadoop102 bin]$ chmod 777 ads_user_total_count.sh

3)脚本使用

- [kgg@hadoop102 module]$ ads_user_total_count.sh 2019-02-20

4)查询结果

- hive (gmall)> select * from ads_user_total_count;

5)脚本执行时间

复制代码

最新经典文章,欢迎关注公众号

|

/2

/2