本帖最后由 levycui 于 2019-1-1 16:25 编辑

问题导读:

1、如何声明一个随机过滤器并创建卷积层?

2、如何声明一个maxpool图层函数?

3、如何声明maxpool窗口的宽度和高度?

4、如何使用min-max进行标准化为0到1之间?

上一篇:TensorFlow ML cookbook 第六章2、3节 运行门和激活功能、实现单层神经网络

实现不同的层次

了解如何实现不同的层非常重要。在之前的配方中,我们实现了完全连接的层。我们将扩展我们对此配方中各层的知识。

做好准备

我们已经探索了如何在数据输入和完全连接的隐藏层之间建立连接。 TensorFlow中有更多类型的层是内置函数。最常用的层是卷积层和maxpool层。我们将向您展示如何使用输入数据和完全连接的数据创建和使用此类图层。首先,我们将研究如何在一维数据上使用这些图层,然后在二维数据上使用这些图层。

虽然神经网络可以以任何方式分层,但最常见的用途之一是使用卷积层和完全连接的层来首先创建特征。如果我们有太多的功能,通常会有一个maxpool图层。在这些层之后,通常引入非线性层作为激活函数。我们将在第8章卷积神经网络中考虑的卷积神经网络(CNN)通常具有卷积,最大化,激活,卷积,最大化,激活等形式。

怎么做…

我们将首先看一维数据。 我们为此任务生成随机数据数组:

1.我们首先加载我们需要的库并开始图形会话:

[mw_shl_code=python,true]import tensorflow as tf

import numpy as np

sess = tf.Session() [/mw_shl_code]

2.现在我们可以初始化我们的数据(长度为25的NumPy数组)并创建我们将通过它提供的占位符:

[mw_shl_code=python,true]data_size = 25

data_1d = np.random.normal(size=data_size)

x_input_1d = tf.placeholder(dtype=tf.float32, shape=[data_size]) [/mw_shl_code]

3.我们现在将定义一个将形成卷积层的函数。 然后我们将声明一个随机过滤器并创建卷积层:

[mw_shl_code=python,true]def conv_layer_1d(input_1d, my_filter):

# Make 1d input into 4d

input_2d = tf.expand_dims(input_1d, 0)

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform convolution

convolution_output = tf.nn.conv2d(input_4d, filter=my_filter, strides=[1,1,1,1], padding="VALID")

# Now drop extra dimensions

conv_output_1d = tf.squeeze(convolution_output)

return(conv_output_1d)

my_filter = tf.Variable(tf.random_normal(shape=[1,5,1,1]))

my_convolution_output = conv_layer_1d(x_input_1d, my_filter) [/mw_shl_code]

4.TensorFlow的激活函数默认采用元素方式。 这意味着我们只需要在感兴趣的层上调用激活函数。 我们通过创建激活函数然后在图形上初始化它来完成此操作:

[mw_shl_code=python,true]def activation(input_1d):

return(tf.nn.relu(input_1d))

my_activation_output = activation(my_convolution_output) [/mw_shl_code]

5.现在我们将声明一个maxpool图层函数。 此函数将在我们的一维向量上的移动窗口上创建一个maxpool。 对于此示例,我们将其初始化为宽度为5:

[mw_shl_code=python,true]def max_pool(input_1d, width):

# First we make the 1d input into 4d.

input_2d = tf.expand_dims(input_1d, 0)

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform the max pool operation

pool_output = tf.nn.max_pool(input_4d, ksize=[1, 1, width, 1], strides=[1, 1, 1, 1], padding='VALID')

pool_output_1d = tf.squeeze(pool_output)

return(pool_output_1d)

my_maxpool_output = max_pool(my_activation_output, width=5)[/mw_shl_code]

6.我们将要连接的最后一层是完全连接的层。 我们想要创建一个多功能函数,输入一维数组并输出指示的数值。 还记得要用1D数组进行矩阵乘法,我们必须将维度扩展为2D:

[mw_shl_code=python,true]def fully_connected(input_layer, num_outputs):

# Create weights

weight_shape = tf.squeeze(tf.pack([tf.shape(input_layer), [num_outputs]]))

weight = tf.random_normal(weight_shape, stddev=0.1)

bias = tf.random_normal(shape=[num_outputs])

# Make input into 2d

input_layer_2d = tf.expand_dims(input_layer, 0)

# Perform fully connected operations

full_output = tf.add(tf.matmul(input_layer_2d, weight), bias)

# Drop extra dimensions

full_output_1d = tf.squeeze(full_output)

return(full_output_1d)

my_full_output = fully_connected(my_maxpool_output, 5) [/mw_shl_code]

7.现在我们将初始化所有变量并运行图形并打印每个层的输出:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init)

feed_dict = {x_input_1d: data_1d}

# Convolution Output

print('Input = array of length 25'')

print('Convolution w/filter, length = 5, stride size = 1, results in an array of length 21:'')

print(sess.run(my_convolution_output, feed_dict=feed_dict))

# Activation Output

print('\nInput = the above array of length 21'')

print('ReLU element wise returns the array of length 21:'')

print(sess.run(my_activation_output, feed_dict=feed_dict))

# Maxpool Output

print('\nInput = the above array of length 21'')

print('MaxPool, window length = 5, stride size = 1, results in the array of length 17:'')

print(sess.run(my_maxpool_output, feed_dict=feed_dict))

# Fully Connected Output

print('\nInput = the above array of length 17'')

print('Fully connected layer on all four rows with five outputs:'')

print(sess.run(my_full_output, feed_dict=feed_dict))[/mw_shl_code]

8.这导致以下输出:

[mw_shl_code=python,true]Input = array of length 25

Convolution w/filter, length = 5, stride size = 1, results in an array of length 21:

[-0.91608119 1.53731811 -0.7954089 0.5041104 1.88933098

-1.81099761 0.56695032 1.17945457 -0.66252393 -1.90287709

0.87184119 0.84611893 -5.25024986 -0.05473572 2.19293165

-4.47577858 -1.71364677 3.96857905 -2.0452652 -1.86647367

-0.12697852]

Input = the above array of length 21

ReLU element wise returns the array of length 21:

[ 0. 1.53731811 0. 0.5041104 1.88933098

0. 0. 1.17945457 0. 0.

0.87184119 0.84611893 0. 0. 2.19293165

0. 0. 3.96857905 0. 0.

0. ]

Input = the above array of length 21

MaxPool, window length = 5, stride size = 1, results in the array of length 17:

[ 1.88933098 1.88933098 1.88933098 1.88933098 1.88933098

1.17945457 1.17945457 1.17945457 0.87184119 0.87184119

2.19293165 2.19293165 2.19293165 3.96857905 3.96857905

3.96857905 3.96857905]

Input = the above array of length 17

Fully connected layer on all four rows with five outputs:

[ 1.23588216 -0.42116445 1.44521213 1.40348077 -0.79607368][/mw_shl_code]

我们现在将以相同的顺序考虑相同类型的层,但是对于二维数据:

1.我们将从清除和重置计算图开始:

[mw_shl_code=python,true]ops.reset_default_graph()

sess = tf.Session() [/mw_shl_code]

2.首先,我们将输入数组初始化为10x10矩阵,然后我们将为具有相同形状的图形初始化占位符:

[mw_shl_code=python,true]data_size = [10,10]

data_2d = np.random.normal(size=data_size)

x_input_2d = tf.placeholder(dtype=tf.float32, shape=data_size) [/mw_shl_code]

3.正如在一维示例中一样,我们声明了卷积层函数。 由于我们的数据已经具有高度和宽度,我们只需要将它扩展为二维(批量大小为1,通道大小为1),以便我们可以使用conv2d()函数对其进行操作。 对于滤波器,我们将使用随机2x2滤波器,在两个方向上跨越两个,并使用有效填充(无填充)。 因为我们的输入矩阵是10x10,我们的卷积输出将是5x5:

[mw_shl_code=python,true]def conv_layer_2d(input_2d, my_filter):

# First, change 2d input to 4d

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform convolution

convolution_output = tf.nn.conv2d(input_4d, filter=my_filter, strides=[1,2,2,1], padding="VALID")

# Drop extra dimensions

conv_output_2d = tf.squeeze(convolution_output)

return(conv_output_2d)

my_filter = tf.Variable(tf.random_normal(shape=[2,2,1,1]))

my_convolution_output = conv_layer_2d(x_input_2d, my_filter) [/mw_shl_code]

4.激活功能在逐个元素的基础上工作,所以现在我们可以创建激活操作并在图形上初始化它:

[mw_shl_code=python,true]def activation(input_2d):

return(tf.nn.relu(input_2d))

my_activation_output = activation(my_convolution_output) [/mw_shl_code]

5.我们的maxpool图层与一维图案非常相似,只是我们必须声明maxpool窗口的宽度和高度。 就像我们的卷积2D图层一样,我们这次只需要将它们扩展为二维:

[mw_shl_code=python,true]def max_pool(input_2d, width, height):

# Make 2d input into 4d

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform max pool

pool_output = tf.nn.max_pool(input_4d, ksize=[1, height, width, 1], strides=[1, 1, 1, 1], padding='VALID')

# Drop extra dimensions

pool_output_2d = tf.squeeze(pool_output)

return(pool_output_2d)

my_maxpool_output = max_pool(my_activation_output, width=2, height=2)[/mw_shl_code]

6.我们的完全连接层与一维输出非常相似。 我们还应该注意到,此层的2D输入被视为一个对象,因此我们希望每个条目都连接到每个输出。 为了实现这一点,我们完全展平了二维矩阵,然后将其展开以进行矩阵乘法:

[mw_shl_code=python,true]def fully_connected(input_layer, num_outputs):

# Flatten into 1d

flat_input = tf.reshape(input_layer, [-1])

# Create weights

weight_shape = tf.squeeze(tf.pack([tf.shape(flat_input), [num_ outputs]]))

weight = tf.random_normal(weight_shape, stddev=0.1)

bias = tf.random_normal(shape=[num_outputs])

# Change into 2d

input_2d = tf.expand_dims(flat_input, 0)

# Perform fully connected operations

full_output = tf.add(tf.matmul(input_2d, weight), bias)

# Drop extra dimensions

full_output_2d = tf.squeeze(full_output)

return(full_output_2d)

my_full_output = fully_connected(my_maxpool_output, 5) [/mw_shl_code]

7.我们现在将初始化变量并为我们的操作创建一个feed字典:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init)

feed_dict = {x_input_2d: data_2d} [/mw_shl_code]

8.以下是我们如何查看每个层的输出:

[mw_shl_code=python,true]# Convolution Output

print('Input = [10 X 10] array'')

print('2x2 Convolution, stride size = [2x2], results in the [5x5] array:'')

print(sess.run(my_convolution_output, feed_dict=feed_dict))

# Activation Output

print('\nInput = the above [5x5] array'')

print('ReLU element wise returns the [5x5] array:'')

print(sess.run(my_activation_output, feed_dict=feed_dict))

# Max Pool Output

print('\nInput = the above [5x5] array'')

print('MaxPool, stride size = [1x1], results in the [4x4] array:'')

print(sess.run(my_maxpool_output, feed_dict=feed_dict))

# Fully Connected Output

print('\nInput = the above [4x4] array'')

print('Fully connected layer on all four rows with five outputs:'')

print(sess.run(my_full_output, feed_dict=feed_dict)) [/mw_shl_code]

9.这导致以下输出:

[mw_shl_code=python,true]Input = [10 X 10] array

2x2 Convolution, stride size = [2x2], results in the [5x5] array:

[[ 0.37630892 -1.41018617 -2.58821273 -0.32302785 1.18970704]

[-4.33685207 1.97415686 1.0844903 -1.18965471 0.84643292]

[ 5.23706436 2.46556497 -0.95119286 1.17715418 4.1117816 ]

[ 5.86972761 1.2213701 1.59536231 2.66231227 2.28650784]

[-0.88964868 -2.75502229 4.3449688 2.67776585 -2.23714781]]

Input = the above [5x5] array

ReLU element wise returns the [5x5] array:

[[ 0.37630892 0. 0. 0. 1.18970704]

[ 0. 1.97415686 1.0844903 0. 0.84643292]

[ 5.23706436 2.46556497 0. 1.17715418 4.1117816 ]

[ 5.86972761 1.2213701 1.59536231 2.66231227 2.28650784]

[ 0. 0. 4.3449688 2.67776585 0. ]]

Input = the above [5x5] array

MaxPool, stride size = [1x1], results in the [4x4] array:

[[ 1.97415686 1.97415686 1.0844903 1.18970704]

[ 5.23706436 2.46556497 1.17715418 4.1117816 ]

[ 5.86972761 2.46556497 2.66231227 4.1117816 ]

[ 5.86972761 4.3449688 4.3449688 2.67776585]]

Input = the above [4x4] array

Fully connected layer on all four rows with five outputs:

[-0.6154139 -1.96987963 -1.88811922 0.20010889 0.32519674] [/mw_shl_code]

这个怎么运作…

我们现在可以看到如何在TensorFlow中使用一维和二维数据中的卷积和maxpool图层。无论输入的形状如何,我们最终都得到相同的大小输出。这对于说明神经网络层的灵活性很重要。本节还应该再次向我们强调形状和大小在神经网络操作中的重要性。

使用多层神经网络

现在,我们将使用Low Birthweight数据集上的多层神经网络将我们对不同层的知识应用于实际数据。

做好准备

现在我们知道如何创建神经网络并使用图层,我们将应用此方法来预测低出生体重数据集中的出生体重。我们将创建一个具有三个隐藏层的神经网络。低出生体重数据集包括实际出生体重和指标变量,如果出生体重高于或低于2,500克。在这个例子中,我们将目标设为实际出生体重(回归),然后在最后查看分类的准确性,让我们看看我们的模型是否可以确定出生体重是否<2,500克。

怎么做…

1.首先,我们首先加载库并初始化我们的计算图:

[mw_shl_code=python,true]import tensorflow as tf

import matplotlib.pyplot as plt

import requests

import numpy as np

sess = tf.Session() [/mw_shl_code]

2.现在我们将使用请求模块从网站加载数据。 在此之后,我们将数据拆分为感兴趣的特征和目标值:

[mw_shl_code=python,true]birthdata_url = 'https://www.umass.edu/statdata/statdata/data/ lowbwt.dat'

birth_file = requests.get(birthdata_url)

birth_data = birth_file.text.split('\r\n')[5:]

birth_header = [x for x in birth_data[0].split(' ') if len(x)>=1]

birth_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in birth_data[1:] if len(y)>=1]

y_vals = np.array([x[10] for x in birth_data])

cols_of_interest = ['AGE', 'LWT', 'RACE', 'SMOKE', 'PTL', 'HT', 'UI', 'FTV']

x_vals = np.array([[x[ix] for ix, feature in enumerate(birth_ header) if feature in cols_of_interest] for x in birth_data]) [/mw_shl_code]

3.为了帮助重复性,我们为NumPy和TensorFlow设置了随机种子。 然后我们声明我们的批量大小:

[mw_shl_code=python,true]seed = 3

tf.set_random_seed(seed)

np.random.seed(seed)

batch_size = 100 [/mw_shl_code]

4.接下来我们将数据分成80-20列车测试分组。 在此之后,我们将使用min-max缩放将我们的输入要素标准化为0到1之间:

[mw_shl_code=python,true]train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices]

def normalize_cols(m):

col_max = m.max(axis=0)

col_min = m.min(axis=0)

return (m-col_min) / (col_max - col_min)

x_vals_train = np.nan_to_num(normalize_cols(x_vals_train))

x_vals_test = np.nan_to_num(normalize_cols(x_vals_test))[/mw_shl_code]

5.由于我们将有多个具有相似初始化变量的图层,我们将创建一个函数来初始化权重和偏差:

[mw_shl_code=python,true]def init_weight(shape, st_dev):

weight = tf.Variable(tf.random_normal(shape, stddev=st_dev))

return(weight)

def init_bias(shape, st_dev):

bias = tf.Variable(tf.random_normal(shape, stddev=st_dev))

return(bias) [/mw_shl_code]

6.接下来我们将初始化占位符。 将有八个输入功能和一个输出,以克为单位的出生体重:

[mw_shl_code=python,true]x_data = tf.placeholder(shape=[None, 8], dtype=tf.float32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32) [/mw_shl_code]

7.对于所有三个隐藏层,完全连接的层将使用三次。 为了防止重复代码,我们将创建一个在初始化模型时使用的图层函数:

[mw_shl_code=python,true]def fully_connected(input_layer, weights, biases):

layer = tf.add(tf.matmul(input_layer, weights), biases)

return(tf.nn.relu(layer))[/mw_shl_code]

8.我们现在要创建我们的模型。 对于每个层(和输出层),我们将初始化权重矩阵,偏置矩阵和完全连接的层。 对于此示例,我们将使用大小为25,10和3的隐藏层:

[mw_shl_code=python,true]# Create second layer (25 hidden nodes)

weight_1 = init_weight(shape=[8, 25], st_dev=10.0)

bias_1 = init_bias(shape=[25], st_dev=10.0)

layer_1 = fully_connected(x_data, weight_1, bias_1)

# Create second layer (10 hidden nodes)

weight_2 = init_weight(shape=[25, 10], st_dev=10.0)

bias_2 = init_bias(shape=[10], st_dev=10.0)

layer_2 = fully_connected(layer_1, weight_2, bias_2)

# Create third layer (3 hidden nodes)

weight_3 = init_weight(shape=[10, 3], st_dev=10.0)

bias_3 = init_bias(shape=[3], st_dev=10.0)

layer_3 = fully_connected(layer_2, weight_3, bias_3)

# Create output layer (1 output value)

weight_4 = init_weight(shape=[3, 1], st_dev=10.0)

bias_4 = init_bias(shape=[1], st_dev=10.0)

final_output = fully_connected(layer_3, weight_4, bias_4) [/mw_shl_code]

9.我们现在将使用L1损失函数(绝对值),声明我们的优化器(Adam优化),并初始化我们的变量:

[mw_shl_code=python,true]loss = tf.reduce_mean(tf.abs(y_target - final_output))

my_opt = tf.train.AdamOptimizer(0.05)

train_step = my_opt.minimize(loss)

init = tf.initialize_all_variables()

sess.run(init)[/mw_shl_code]

10.接下来我们将训练我们的模型进行200次迭代。 我们还将包含用于存储列车和测试损失的代码,选择随机批量大小,并且每25代打印一次状态:

[mw_shl_code=python,true]# Initialize the loss vectors

loss_vec = []

test_loss = []

for i in range(200):

# Choose random indices for batch selection

rand_index = np.random.choice(len(x_vals_train), size=batch_ size)

# Get random batch

rand_x = x_vals_train[rand_index]

rand_y = np.transpose([y_vals_train[rand_index]])

# Run the training step

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

# Get and store the train loss

temp_loss = sess.run(loss, feed_dict={x_data: rand_x, y_ target: rand_y})

loss_vec.append(temp_loss)

# Get and store the test loss

test_temp_loss = sess.run(loss, feed_dict={x_data: x_vals_ test, y_target: np.transpose([y_vals_test])})

test_loss.append(test_temp_loss)

if (i+1)%25==0:

print('Generation: ' + str(i+1) + '. Loss = ' + str(temp_ loss))[/mw_shl_code]

11.这导致以下输出:

[mw_shl_code=python,true]Generation: 25. Loss = 5922.52

Generation: 50. Loss = 2861.66

Generation: 75. Loss = 2342.01

Generation: 100. Loss = 1880.59

Generation: 125. Loss = 1394.39

Generation: 150. Loss = 1062.43

Generation: 175. Loss = 834.641

Generation: 200. Loss = 848.54 [/mw_shl_code]

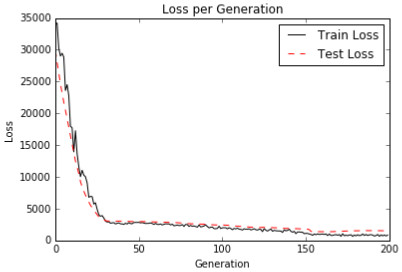

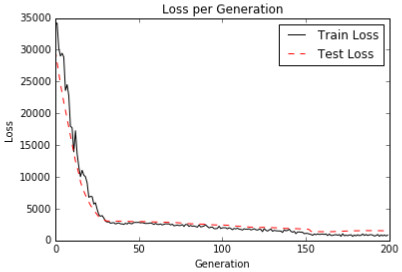

12.这是一段代码,用matplotlib绘制火车和测试损失:

[mw_shl_code=python,true]plt.plot(loss_vec, 'k-', label='Train Loss')

plt.plot(test_loss, 'r--', label='Test Loss')

plt.title('Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.show()[/mw_shl_code]

图6:在这里,我们绘制了我们训练的神经网络的训练和测试损失,以克数表示出生体重。 请注意,只有在大约30代之后,我们才能找到一个好的模型。

13.现在我们想将我们的出生体重结果与我们之前的后勤结果进行比较。 在逻辑线性回归中(参见第3章“线性回归”中的“实施逻辑回归”配方),经过数千次迭代后,我们实现了大约60%的准确度。 为了将此与我们在此处所做的进行比较,我们将输出列车/测试回归结果,并通过创建指标(如果它们高于或低于2,500克)将其转换为分类结果。 以下是到达的代码,找出该模型的准确性可能是多少:

[mw_shl_code=python,true]actuals = np.array([x[1] for x in birth_data])

test_actuals = actuals[test_indices]

train_actuals = actuals[train_indices]

test_preds = [x[0] for x in sess.run(final_output, feed_dict={x_ data: x_vals_test})]

train_preds = [x[0] for x in sess.run(final_output, feed_dict={x_ data: x_vals_train})]

test_preds = np.array([1.0 if x<2500.0 else 0.0 for x in test_ preds])

train_preds = np.array([1.0 if x<2500.0 else 0.0 for x in train_ preds])

# Print out accuracies

test_acc = np.mean([x==y for x,y in zip(test_preds, test_ actuals)])

train_acc = np.mean([x==y for x,y in zip(train_preds, train_ actuals)])

print('On predicting the category of low birthweight from regression output (<2500g):'')

print('Test Accuracy: {}''.format(test_acc))

print('Train Accuracy: {}''.format(train_acc)) [/mw_shl_code]

14.这导致以下输出:

[mw_shl_code=python,true]Test Accuracy: 0.5526315789473685

Train Accuracy: 0.6688741721854304 [/mw_shl_code]

这个怎么运作…

在这个配方中,我们创建了一个回归神经网络,其中包含三个完全连接的隐藏层,以预测低出生体重数据集的出生体重。 当将其与物流输出进行比较以预测高于或低于2,500克时,我们获得了类似的结果并且在更少的几代中实现了它们。 在下一个方案中,我们将尝试通过使其成为多层逻辑型神经网络来改进逻辑回归。

原文:

Implementing Different Layers

It is important to know how to implement different layers. In the prior recipe, we implemented fully connected layers. We will expand our knowledge of various layers in this recipe.

Getting ready

We have explored how to connect between data inputs and a fully connected hidden layer. There are more types of layers that are built-in functions inside TensorFlow. The most popular layers that are used are convolutional layers and maxpool layers. We will show you how to create and use such layers with input data and with fully connected data. First we will look at how to use these layers on one-dimensional data, and then on two-dimensional data.

While neural networks can be layered in any fashion, one of the most common uses is to use convolutional layers and fully connected layers to first create features. If we have too many features, it is common to have a maxpool layer. After these layers, non-linear layers are commonly introduced as activation functions. Convolutional neural networks (CNNs), which we will consider in Chapter 8, Convolutional Neural Networks, usually have the form Convolutional, maxpool, activation, convolutional, maxpool, activation, and so on.

How to do it…

We will first look at one-dimensional data. We generate a random array of data for this task:

1.We'll start by loading the libraries we need and starting a graph session:

import tensorflow as tf

import numpy as np

sess = tf.Session()

2.Now we can initialize our data (NumPy array of length 25) and create the placeholder that we will feed it through:

data_size = 25

data_1d = np.random.normal(size=data_size)

x_input_1d = tf.placeholder(dtype=tf.float32, shape=[data_size])

3.We will now define a function that will make a convolutional layer. Then we will declare a random filter and create the convolutional layer:

def conv_layer_1d(input_1d, my_filter):

# Make 1d input into 4d

input_2d = tf.expand_dims(input_1d, 0)

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform convolution

convolution_output = tf.nn.conv2d(input_4d, filter=my_filter, strides=[1,1,1,1], padding="VALID")

# Now drop extra dimensions

conv_output_1d = tf.squeeze(convolution_output)

return(conv_output_1d)

my_filter = tf.Variable(tf.random_normal(shape=[1,5,1,1]))

my_convolution_output = conv_layer_1d(x_input_1d, my_filter)

4.TensorFlow's activation functions will act element-wise by default. This means we just have to call our activation function on the layer of interest. We do this by creating an activation function and then initializing it on the graph:

def activation(input_1d):

return(tf.nn.relu(input_1d))

my_activation_output = activation(my_convolution_output)

5.Now we'll declare a maxpool layer function. This function will create a maxpool on a moving window across our one-dimensional vector. For this example, we will initialize it to have a width of 5:

def max_pool(input_1d, width):

# First we make the 1d input into 4d.

input_2d = tf.expand_dims(input_1d, 0)

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform the max pool operation

pool_output = tf.nn.max_pool(input_4d, ksize=[1, 1, width, 1], strides=[1, 1, 1, 1], padding='VALID')

pool_output_1d = tf.squeeze(pool_output)

return(pool_output_1d)

my_maxpool_output = max_pool(my_activation_output, width=5)

6.The final layer that we will connect is the fully connected layer. We want to create a versatile function that inputs a 1D array and outputs the number of values indicated. Also remember that to do matrix multiplication with a 1D array, we must expand the dimensions into 2D:

def fully_connected(input_layer, num_outputs):

# Create weights

weight_shape = tf.squeeze(tf.pack([tf.shape(input_layer), [num_outputs]]))

weight = tf.random_normal(weight_shape, stddev=0.1)

bias = tf.random_normal(shape=[num_outputs])

# Make input into 2d

input_layer_2d = tf.expand_dims(input_layer, 0)

# Perform fully connected operations

full_output = tf.add(tf.matmul(input_layer_2d, weight), bias)

# Drop extra dimensions

full_output_1d = tf.squeeze(full_output)

return(full_output_1d)

my_full_output = fully_connected(my_maxpool_output, 5)

7.Now we'll initialize all the variables and run the graph and print the output of each of the layers:

init = tf.initialize_all_variables()

sess.run(init)

feed_dict = {x_input_1d: data_1d}

# Convolution Output

print('Input = array of length 25'')

print('Convolution w/filter, length = 5, stride size = 1, results in an array of length 21:'')

print(sess.run(my_convolution_output, feed_dict=feed_dict))

# Activation Output

print('\nInput = the above array of length 21'')

print('ReLU element wise returns the array of length 21:'')

print(sess.run(my_activation_output, feed_dict=feed_dict))

# Maxpool Output

print('\nInput = the above array of length 21'')

print('MaxPool, window length = 5, stride size = 1, results in the array of length 17:'')

print(sess.run(my_maxpool_output, feed_dict=feed_dict))

# Fully Connected Output

print('\nInput = the above array of length 17'')

print('Fully connected layer on all four rows with five outputs:'')

print(sess.run(my_full_output, feed_dict=feed_dict))

8.This results in the following output:

Input = array of length 25

Convolution w/filter, length = 5, stride size = 1, results in an array of length 21:

[-0.91608119 1.53731811 -0.7954089 0.5041104 1.88933098

-1.81099761 0.56695032 1.17945457 -0.66252393 -1.90287709

0.87184119 0.84611893 -5.25024986 -0.05473572 2.19293165

-4.47577858 -1.71364677 3.96857905 -2.0452652 -1.86647367

-0.12697852]

Input = the above array of length 21

ReLU element wise returns the array of length 21:

[ 0. 1.53731811 0. 0.5041104 1.88933098

0. 0. 1.17945457 0. 0.

0.87184119 0.84611893 0. 0. 2.19293165

0. 0. 3.96857905 0. 0.

0. ]

Input = the above array of length 21

MaxPool, window length = 5, stride size = 1, results in the array of length 17:

[ 1.88933098 1.88933098 1.88933098 1.88933098 1.88933098

1.17945457 1.17945457 1.17945457 0.87184119 0.87184119

2.19293165 2.19293165 2.19293165 3.96857905 3.96857905

3.96857905 3.96857905]

Input = the above array of length 17

Fully connected layer on all four rows with five outputs:

[ 1.23588216 -0.42116445 1.44521213 1.40348077 -0.79607368]

We will now consider the same types of layers in an equivalent order but for two-dimensional data:

1.We will start by clearing and resetting the computational graph:

ops.reset_default_graph()

sess = tf.Session()

2.First of all, we will initialize our input array to be a 10x10 matrix, and then we will initialize a placeholder for the graph with the same shape:

data_size = [10,10]

data_2d = np.random.normal(size=data_size)

x_input_2d = tf.placeholder(dtype=tf.float32, shape=data_size)

3.Just as in the one-dimensional example, we declare a convolutional layer function. Since our data has a height and width already, we just need to expand it in two dimensions (a batch size of 1, and a channel size of 1) so that we can operate on it with the conv2d() function. For the filter, we will use a random 2x2 filter, stride two in both directions, and use valid padding (no zero padding). Because our input matrix is 10x10, our convolutional output will be 5x5:

def conv_layer_2d(input_2d, my_filter):

# First, change 2d input to 4d

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform convolution

convolution_output = tf.nn.conv2d(input_4d, filter=my_filter, strides=[1,2,2,1], padding="VALID")

# Drop extra dimensions

conv_output_2d = tf.squeeze(convolution_output)

return(conv_output_2d)

my_filter = tf.Variable(tf.random_normal(shape=[2,2,1,1]))

my_convolution_output = conv_layer_2d(x_input_2d, my_filter)

4.The activation function works on an element-wise basis, so now we can create an activation operation and initialize it on the graph:

def activation(input_2d):

return(tf.nn.relu(input_2d))

my_activation_output = activation(my_convolution_output)

5.Our maxpool layer is very similar to the one-dimensional case except we have to declare a width and height for the maxpool window. Just like our convolutional 2D layer, we only have to expand our into in two dimensions this time:

def max_pool(input_2d, width, height):

# Make 2d input into 4d

input_3d = tf.expand_dims(input_2d, 0)

input_4d = tf.expand_dims(input_3d, 3)

# Perform max pool

pool_output = tf.nn.max_pool(input_4d, ksize=[1, height, width, 1], strides=[1, 1, 1, 1], padding='VALID')

# Drop extra dimensions

pool_output_2d = tf.squeeze(pool_output)

return(pool_output_2d)

my_maxpool_output = max_pool(my_activation_output, width=2, height=2)

6.Our fully connected layer is very similar to the one-dimensional output. We should also note here that the 2D input to this layer is considered as one object, so we want each of the entries connected to each of the outputs. In order to accomplish this, we fully flatten out the two-dimensional matrix and then expand it for matrix multiplication:

def fully_connected(input_layer, num_outputs):

# Flatten into 1d

flat_input = tf.reshape(input_layer, [-1])

# Create weights

weight_shape = tf.squeeze(tf.pack([tf.shape(flat_input), [num_ outputs]]))

weight = tf.random_normal(weight_shape, stddev=0.1)

bias = tf.random_normal(shape=[num_outputs])

# Change into 2d

input_2d = tf.expand_dims(flat_input, 0)

# Perform fully connected operations

full_output = tf.add(tf.matmul(input_2d, weight), bias)

# Drop extra dimensions

full_output_2d = tf.squeeze(full_output)

return(full_output_2d)

my_full_output = fully_connected(my_maxpool_output, 5)

7.We'll now initialize our variables and create a feed dictionary for our operations:

init = tf.initialize_all_variables()

sess.run(init)

feed_dict = {x_input_2d: data_2d}

8.And here is how we can see the outputs for each of the layers:

# Convolution Output

print('Input = [10 X 10] array'')

print('2x2 Convolution, stride size = [2x2], results in the [5x5] array:'')

print(sess.run(my_convolution_output, feed_dict=feed_dict))

# Activation Output

print('\nInput = the above [5x5] array'')

print('ReLU element wise returns the [5x5] array:'')

print(sess.run(my_activation_output, feed_dict=feed_dict))

# Max Pool Output

print('\nInput = the above [5x5] array'')

print('MaxPool, stride size = [1x1], results in the [4x4] array:'')

print(sess.run(my_maxpool_output, feed_dict=feed_dict))

# Fully Connected Output

print('\nInput = the above [4x4] array'')

print('Fully connected layer on all four rows with five outputs:'')

print(sess.run(my_full_output, feed_dict=feed_dict))

9.This results in the following output:

Input = [10 X 10] array

2x2 Convolution, stride size = [2x2], results in the [5x5] array:

[[ 0.37630892 -1.41018617 -2.58821273 -0.32302785 1.18970704]

[-4.33685207 1.97415686 1.0844903 -1.18965471 0.84643292]

[ 5.23706436 2.46556497 -0.95119286 1.17715418 4.1117816 ]

[ 5.86972761 1.2213701 1.59536231 2.66231227 2.28650784]

[-0.88964868 -2.75502229 4.3449688 2.67776585 -2.23714781]]

Input = the above [5x5] array

ReLU element wise returns the [5x5] array:

[[ 0.37630892 0. 0. 0. 1.18970704]

[ 0. 1.97415686 1.0844903 0. 0.84643292]

[ 5.23706436 2.46556497 0. 1.17715418 4.1117816 ]

[ 5.86972761 1.2213701 1.59536231 2.66231227 2.28650784]

[ 0. 0. 4.3449688 2.67776585 0. ]]

Input = the above [5x5] array

MaxPool, stride size = [1x1], results in the [4x4] array:

[[ 1.97415686 1.97415686 1.0844903 1.18970704]

[ 5.23706436 2.46556497 1.17715418 4.1117816 ]

[ 5.86972761 2.46556497 2.66231227 4.1117816 ]

[ 5.86972761 4.3449688 4.3449688 2.67776585]]

Input = the above [4x4] array

Fully connected layer on all four rows with five outputs:

[-0.6154139 -1.96987963 -1.88811922 0.20010889 0.32519674]

How it works…

We can now see how to use the convolutional and maxpool layers in TensorFlow with one-dimensional and two-dimensional data. Regardless of the shape of the input, we ended up with the same size output. This is important to illustrate the flexibility of neural network layers. This section should also impress upon us again the importance of shapes and sizes in neural network operations.

Using a Multilayer Neural Network

We will now apply our knowledge of different layers to real data with using a multilayer neural network on the Low Birthweight dataset.

Getting ready

Now that we know how to create neural networks and work with layers, we will apply this methodology towards predicting the birthweight in the low birthweight dataset. We'll create a neural network with three hidden layers. The low- birthweight dataset includes the actual birthweight and an indicator variable if the birthweight is above or below 2,500 grams. In this example, we'll make the target the actual birthweight (regression) and then see what the accuracy is on the classification at the end, and let's see if our model can identify if the birthweight will be <2,500 grams.

How to do it…

1.First we'll start by loading the libraries and initializing our computational graph:

import tensorflow as tf

import matplotlib.pyplot as plt

import requests

import numpy as np

sess = tf.Session()

2.Now we'll load the data from the website using the requests module. After this, we will split the data into the features of interest and the target value:

birthdata_url = 'https://www.umass.edu/statdata/statdata/data/ lowbwt.dat'

birth_file = requests.get(birthdata_url)

birth_data = birth_file.text.split('\r\n')[5:]

birth_header = [x for x in birth_data[0].split(' ') if len(x)>=1]

birth_data = [[float(x) for x in y.split(' ') if len(x)>=1] for y in birth_data[1:] if len(y)>=1]

y_vals = np.array([x[10] for x in birth_data])

cols_of_interest = ['AGE', 'LWT', 'RACE', 'SMOKE', 'PTL', 'HT', 'UI', 'FTV']

x_vals = np.array([[x[ix] for ix, feature in enumerate(birth_ header) if feature in cols_of_interest] for x in birth_data])

3.To help with repeatability, we set the random seed for both NumPy and TensorFlow. Then we declare our batch size:

seed = 3

tf.set_random_seed(seed)

np.random.seed(seed)

batch_size = 100

4.Next we'll split the data into an 80-20 train-test split. After this, we will normalize our input features to be between zero and one with a min-max scaling:

train_indices = np.random.choice(len(x_vals), round(len(x_ vals)*0.8), replace=False)

test_indices = np.array(list(set(range(len(x_vals))) - set(train_ indices)))

x_vals_train = x_vals[train_indices]

x_vals_test = x_vals[test_indices]

y_vals_train = y_vals[train_indices]

y_vals_test = y_vals[test_indices]

def normalize_cols(m):

col_max = m.max(axis=0)

col_min = m.min(axis=0)

return (m-col_min) / (col_max - col_min)

x_vals_train = np.nan_to_num(normalize_cols(x_vals_train))

x_vals_test = np.nan_to_num(normalize_cols(x_vals_test))

5.Since we will have multiple layers that have similar initialized variables, we will create a function to initialize both the weights and the bias:

def init_weight(shape, st_dev):

weight = tf.Variable(tf.random_normal(shape, stddev=st_dev))

return(weight)

def init_bias(shape, st_dev):

bias = tf.Variable(tf.random_normal(shape, stddev=st_dev))

return(bias)

6.We'll initialize our placeholders next. There will be eight input features and one output, the birthweight in grams:

x_data = tf.placeholder(shape=[None, 8], dtype=tf.float32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32)

7.The fully connected layer will be used three times for all three hidden layers. To prevent repeated code, we will create a layer function to use when we initialize our model:

def fully_connected(input_layer, weights, biases):

layer = tf.add(tf.matmul(input_layer, weights), biases)

return(tf.nn.relu(layer))

8.We'll now create our model. For each layer (and output layer), we will initialize a weight matrix, bias matrix, and the fully connected layer. For this example, we will use hidden layers of sizes 25, 10, and 3:

# Create second layer (25 hidden nodes)

weight_1 = init_weight(shape=[8, 25], st_dev=10.0)

bias_1 = init_bias(shape=[25], st_dev=10.0)

layer_1 = fully_connected(x_data, weight_1, bias_1)

# Create second layer (10 hidden nodes)

weight_2 = init_weight(shape=[25, 10], st_dev=10.0)

bias_2 = init_bias(shape=[10], st_dev=10.0)

layer_2 = fully_connected(layer_1, weight_2, bias_2)

# Create third layer (3 hidden nodes)

weight_3 = init_weight(shape=[10, 3], st_dev=10.0)

bias_3 = init_bias(shape=[3], st_dev=10.0)

layer_3 = fully_connected(layer_2, weight_3, bias_3)

# Create output layer (1 output value)

weight_4 = init_weight(shape=[3, 1], st_dev=10.0)

bias_4 = init_bias(shape=[1], st_dev=10.0)

final_output = fully_connected(layer_3, weight_4, bias_4)

9.We'll now use the L1 loss function (absolute value), declare our optimizer (Adam optimization), and initialize our variables:

loss = tf.reduce_mean(tf.abs(y_target - final_output))

my_opt = tf.train.AdamOptimizer(0.05)

train_step = my_opt.minimize(loss)

init = tf.initialize_all_variables()

sess.run(init)

10.Next we will train our model for 200 iterations. We'll also include code that will store the train and test loss, select a random batch size, and print the status every 25 generations:

# Initialize the loss vectors

loss_vec = []

test_loss = []

for i in range(200):

# Choose random indices for batch selection

rand_index = np.random.choice(len(x_vals_train), size=batch_ size)

# Get random batch

rand_x = x_vals_train[rand_index]

rand_y = np.transpose([y_vals_train[rand_index]])

# Run the training step

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

# Get and store the train loss

temp_loss = sess.run(loss, feed_dict={x_data: rand_x, y_ target: rand_y})

loss_vec.append(temp_loss)

# Get and store the test loss

test_temp_loss = sess.run(loss, feed_dict={x_data: x_vals_ test, y_target: np.transpose([y_vals_test])})

test_loss.append(test_temp_loss)

if (i+1)%25==0:

print('Generation: ' + str(i+1) + '. Loss = ' + str(temp_ loss))

11.This results in the following output:

Generation: 25. Loss = 5922.52

Generation: 50. Loss = 2861.66

Generation: 75. Loss = 2342.01

Generation: 100. Loss = 1880.59

Generation: 125. Loss = 1394.39

Generation: 150. Loss = 1062.43

Generation: 175. Loss = 834.641

Generation: 200. Loss = 848.54

12.Here is a snippet of code that plots the train and test loss with matplotlib:

plt.plot(loss_vec, 'k-', label='Train Loss')

plt.plot(test_loss, 'r--', label='Test Loss')

plt.title('Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.show()

Figure 6: Here we plot the train and test loss for our neural network that we trained to predict the birthweight in grams. Notice that only after about 30 generations we have arrived at a good model.

13.Now we want to compare our birthweight results to our prior logistic results. In logistic linear regression (see the Implementing Logistic Regression recipe in Chapter 3, Linear Regression), we achieved around 60% accuracy after thousands of iterations. To compare this with what we have done here, we will output the train/test regression results and turn them into classification results by creating an indicator if they are above or below 2,500 grams. Here is the code to arrive find out what this model's accuracy is likely to be:

actuals = np.array([x[1] for x in birth_data])

test_actuals = actuals[test_indices]

train_actuals = actuals[train_indices]

test_preds = [x[0] for x in sess.run(final_output, feed_dict={x_ data: x_vals_test})]

train_preds = [x[0] for x in sess.run(final_output, feed_dict={x_ data: x_vals_train})]

test_preds = np.array([1.0 if x<2500.0 else 0.0 for x in test_ preds])

train_preds = np.array([1.0 if x<2500.0 else 0.0 for x in train_ preds])

# Print out accuracies

test_acc = np.mean([x==y for x,y in zip(test_preds, test_ actuals)])

train_acc = np.mean([x==y for x,y in zip(train_preds, train_ actuals)])

print('On predicting the category of low birthweight from regression output (<2500g):'')

print('Test Accuracy: {}''.format(test_acc))

print('Train Accuracy: {}''.format(train_acc))

14.This results in the following output:

Test Accuracy: 0.5526315789473685

Train Accuracy: 0.6688741721854304

How it works…

In this recipe, we created a regression neural network with three fully connected hidden layers to predict the birthweight of the low-birthweight data set. When comparing this to a logistic output to predict above or below 2,500 grams, we achieved similar results and achieved them in fewer generations. In the next recipe, we will try to improve our logistic regression by making it a multiple-layer logistic-type neural network.

关注最新经典文章,欢迎关注公众号

|

/2

/2