问题导读:

1、如何使用Word2vec进行预测?

2、如何声明我们的模型操作和损失函数?

3、如何使用Doc2vec进行情感分析?

4、如何声明Doc2vec索引和目标词索引的占位符?

上一篇:TensorFlow ML cookbook 第七章2、3节 实施TF-IDF及使用Skip-gram嵌入

使用Word2vec进行预测

在本文中,我们使用先前学习的嵌入策略来执行分类。

做好准备

现在我们已经创建并保存了CBOW字嵌入,我们需要使用它们来对电影数据集进行情感预测。 在本文中,我们将学习如何加载和使用先前训练的嵌入,并使用这些嵌入来通过训练逻辑线性模型来预测好的或坏的评论来执行情绪分析。

情感分析是一项非常艰巨的任务,因为人类语言使得很难掌握真正意义的微妙之处和细微差别。 讽刺,笑话和含糊不清的引用都使任务成倍增加。 我们将在电影评论数据集上创建一个简单的逻辑回归,以查看我们是否可以从我们创建并保存在先前配方中的CBOW嵌入中获取任何信息。 由于本文的重点是加载和使用已保存的嵌入,我们不会追求更复杂的模型。

怎么做

1.我们首先加载必要的库并开始图形会话:

[mw_shl_code=python,true]import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import random

import os

import pickle

import string

import requests

import collections

import io

import tarfile

import urllib.request

import text_helpers

from nltk.corpus import stopwords

sess = tf.Session() [/mw_shl_code]

2.现在我们声明模型参数。 我们应该注意,嵌入大小应该与我们用于创建先前CBOW嵌入的嵌入大小相同。 使用以下代码:

[mw_shl_code=python,true]embedding_size = 200

vocabulary_size = 2000

batch_size = 100

max_words = 100

stops = stopwords.words('english') [/mw_shl_code]

3.我们从我们创建的text_helpers.py文件中加载和转换文本数据。 使用以下代码:

[mw_shl_code=python,true]data_folder_name = 'temp'

texts, target = text_helpers.load_movie_data(data_folder_name)

# Normalize text

print('Normalizing Text Data')

texts = text_helpers.normalize_text(texts, stops)

# Texts must contain at least 3 words

target = [target[ix] for ix, x in enumerate(texts) if len(x. split()) > 2]

texts = [x for x in texts if len(x.split()) > 2]

train_indices = np.random.choice(len(target), round(0.8*len(target)), replace=False)

test_indices = np.array(list(set(range(len(target))) - set(train_ indices)))

texts_train = [x for ix, x in enumerate(texts) if ix in train_ indices]

texts_test = [x for ix, x in enumerate(texts) if ix in test_ indices]

target_train = np.array([x for ix, x in enumerate(target) if ix in train_indices])

target_test = np.array([x for ix, x in enumerate(target) if ix in test_indices])

[/mw_shl_code]

4.我们现在加载我们创建的单词字典,同时适合CBOW嵌入。 这对于加载非常重要,因此我们可以从单词到嵌入索引具有相同的精确映射,如下所示:

[mw_shl_code=python,true]dict_file = os.path.join(data_folder_name, 'movie_vocab.pkl')

word_dictionary = pickle.load(open(dict_file, 'rb')) [/mw_shl_code]

5.我们现在可以使用我们的单词字典将我们加载的句子数据转换为数字numpy数组:

[mw_shl_code=python,true]text_data_train = np.array(text_helpers.text_to_numbers(texts_ train, word_dictionary))

text_data_test = np.array(text_helpers.text_to_numbers(texts_test, word_dictionary)) [/mw_shl_code]

6.由于电影评论的长度不同,我们将它们标准化为相同的长度,在我们的例子中,我们将其设置为100个单词。 如果评论少于100个单词,我们将用零填充它。 使用以下代码:

[mw_shl_code=python,true]text_data_train = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_train]])

text_data_test = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_test]]) [/mw_shl_code]

7.现在我们声明我们的模型变量和占位符用于逻辑回归。 使用以下代码:

[mw_shl_code=python,true]A = tf.Variable(tf.random_normal(shape=[embedding_size,1]))

b = tf.Variable(tf.random_normal(shape=[1,1]))

# Initialize placeholders

x_data = tf.placeholder(shape=[None, max_words], dtype=tf.int32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32)[/mw_shl_code]

8.为了让TensorFlow恢复我们之前训练过的嵌入,我们必须先给保存方法一个变量来恢复,所以我们创建一个嵌入变量,其形状与我们将加载的嵌入相同:

[mw_shl_code=python,true]embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0)) [/mw_shl_code]

9.现在我们将嵌入查找功能放在图表上,并对句子中的所有单词进行平均嵌入。 使用以下代码:

[mw_shl_code=python,true]embed = tf.nn.embedding_lookup(embeddings, x_data)

# Take average of all word embeddings in documents

embed_avg = tf.reduce_mean(embed, 1) [/mw_shl_code]

10.接下来,我们声明我们的模型操作和损失函数,记住我们的损失函数已经内置了sigmoid操作,如下所示:

[mw_shl_code=python,true]model_output = tf.add(tf.matmul(embed_avg, A), b)

# Declare loss function (Cross Entropy loss)

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_ logits(model_output, y_target)) [/mw_shl_code]

11.现在我们将预测和准确度函数添加到图表中,以便我们可以在模型训练时评估准确性。 使用以下代码:

[mw_shl_code=python,true]prediction = tf.round(tf.sigmoid(model_output))

predictions_correct = tf.cast(tf.equal(prediction, y_target), tf.float32)

accuracy = tf.reduce_mean(predictions_correct) [/mw_shl_code]

12.我们声明一个优化器函数并初始化以下模型变量:

[mw_shl_code=python,true]my_opt = tf.train.AdagradOptimizer(0.005)

train_step = my_opt.minimize(loss)

init = tf.initialize_all_variables()

sess.run(init) [/mw_shl_code]

13.现在我们有一个随机初始化嵌入,我们可以告诉Saver方法将我们先前的CBOW嵌入加载到嵌入变量中。 使用以下代码:

[mw_shl_code=python,true]model_checkpoint_path = os.path.join(data_folder_name,'cbow_movie_ embeddings.ckpt')

saver = tf.train.Saver({"embeddings": embeddings})

saver.restore(sess, model_checkpoint_path) [/mw_shl_code]

14.现在我们可以开始培养几代人了。 请注意,每100代,我们保存培训和测试损失和准确性。 我们只会每500代打印一次模型状态。 使用以下代码:

[mw_shl_code=python,true]train_loss = []

test_loss = []

train_acc = []

test_acc = []

i_data = []

for i in range(10000):

rand_index = np.random.choice(text_data_train.shape[0], size=batch_size)

rand_x = text_data_train[rand_index]

rand_y = np.transpose([target_train[rand_index]])

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

# Only record loss and accuracy every 100 generations

if (i+1)%100==0:

i_data.append(i+1)

train_loss_temp = sess.run(loss, feed_dict={x_data: rand_x, y_target: rand_y})

train_loss.append(train_loss_temp)

test_loss_temp = sess.run(loss, feed_dict={x_data: text_ data_test, y_target: np.transpose([target_test])})

test_loss.append(test_loss_temp)

train_acc_temp = sess.run(accuracy, feed_dict={x_data: rand_x, y_target: rand_y})

train_acc.append(train_acc_temp)

test_acc_temp = sess.run(accuracy, feed_dict={x_data: text_data_test, y_target: np.transpose([target_test])})

test_acc.append(test_acc_temp)

if (i+1)%500==0:

acc_and_loss = [i+1, train_loss_temp, test_loss_temp, train_acc_temp, test_acc_temp]

acc_and_loss = [np.round(x,2) for x in acc_and_loss]

print('Generation # {}. Train Loss (Test Loss): {:.2f} ({:.2f}). Train Acc (Test Acc): {:.2f} ({:.2f})'.format(*acc_and_ loss)) [/mw_shl_code]

15.这导致以下输出:

[mw_shl_code=python,true]Generation # 500. Train Loss (Test Loss): 0.70 (0.71). Train Acc (Test Acc): 0.52 (0.48)

Generation # 1000. Train Loss (Test Loss): 0.69 (0.72). Train Acc (Test Acc): 0.56 (0.47)

...

Generation # 9500. Train Loss (Test Loss): 0.69 (0.70). Train Acc (Test Acc): 0.57 (0.55)

Generation # 10000. Train Loss (Test Loss): 0.70 (0.70). Train Acc (Test Acc): 0.59 (0.55)[/mw_shl_code]

16.这是我们每100代人节省的训练和测试损失和准确度的代码。 使用以下代码:

[mw_shl_code=python,true]# Plot loss over time

plt.plot(i_data, train_loss, 'k-', label='Train Loss')

plt.plot(i_data, test_loss, 'r--', label='Test Loss', linewidth=4)

plt.title('Cross Entropy Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Cross Entropy Loss')

plt.legend(loc='upper right')

plt.show()

# Plot train and test accuracy

plt.plot(i_data, train_acc, 'k-', label='Train Set Accuracy')

plt.plot(i_data, test_acc, 'r--', label='Test Set Accuracy', linewidth=4)

plt.title('Train and Test Accuracy')

plt.xlabel('Generation')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

plt.show()[/mw_shl_code]

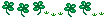

图6:在这里,我们观察了超过10,000代的火车和测试损失。

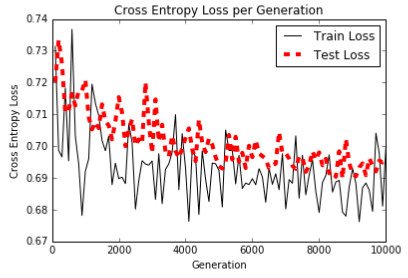

图7:我们可以观察到列车和测试装置的准确度正在缓慢提高10,000代。 值得注意的是,该模型表现非常差,并且仅比随机预测器略好。

这个怎么运作

我们加载了先前的CBOW嵌入,并对评论的平均嵌入进行了逻辑回归。 这里要注意的重要方法是我们如何将模型变量从磁盘加载到当前模型中已经初始化的变量。 我们还必须记住存储和加载我们在训练嵌入之前创建的词汇表。 使用相同的嵌入时,从单词到嵌入索引具有相同的映射非常重要。

还有更多

我们可以看到,我们在预测情绪方面几乎达到了60%的准确率。 例如,要知道伟大这个词背后的含义是一项艰巨的任务; 它可以在审查中用于消极或积极的背景。

为了解决这个问题,我们希望以某种方式为文档本身创建可以解决情绪问题的嵌入。 通常,整个评论是积极的,或者整个评论是否定的。 在使用Doc2vec进行情绪分析配方时,我们可以利用这一点。

使用Doc2vec进行情感分析

既然我们知道如何训练单词嵌入,我们也可以扩展这些方法以进行文档嵌入。 我们将使用TensorFlow探索如何在此配方中执行此操作。

做好准备

在前面关于Word2vec方法的部分中,我们设法捕获了单词之间的位置关系。我们没有做的是捕捉单词与它们来自的文档(或电影评论)之间的关系。捕获文档效果的Word2vec的一个扩展称为Doc2vec。

Doc2vec的基本思想是引入文档嵌入,以及可能有助于捕获文档基调的单词嵌入。例如,只知道电影和爱情彼此相邻的话可能无法帮助我们确定评论的情绪。评论可能是谈论他们如何热爱电影或他们如何不爱电影。但是如果评论足够长并且在文档中找到更多否定词,那么我们可以选择可以帮助我们预测下一个词的整体语气。

Doc2vec只是为文档添加了一个额外的嵌入矩阵,并使用一个单词窗口加上文档索引来预测下一个单词。文档中的所有文字窗口都具有相同的文档索引。值得一提的是,考虑如何将文档嵌入和单词嵌入结合起来是很重要的。我们通过获取总和在单词窗口中组合单词嵌入,并且有两种主要方式将这些嵌入与文档嵌入相结合。通常,文档嵌入要么添加到单词嵌入中,要么连接到单词嵌入的末尾。如果我们添加两个嵌入,我们将文档嵌入大小限制为与嵌入字大小相同的大小。如果我们连接,我们解除了这个限制,但增加了逻辑回归必须处理的变量数量。为了便于说明,我们将展示如何处理此配方中的串联。但总的来说,对于较小的数据集,添加是更好的选择。

第一步是将文档和单词嵌入整合到整个电影评论语料库中,然后我们进行列车测试分割,训练逻辑模型,看看我们是否可以提高预测评论情绪的准确性。

怎么做…

1.我们首先加载必要的库并开始图形会话,如下所示:

[mw_shl_code=python,true]import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import random

import os

import pickle

import string

import requests

import collections

import io

import tarfile

import urllib.request

import text_helpers

from nltk.corpus import stopwords

sess = tf.Session() [/mw_shl_code]

2.我们加载电影评论语料库,就像我们在之前的两个食谱中所做的那样。 使用以下代码:

[mw_shl_code=python,true]data_folder_name = 'temp'

if not os.path.exists(data_folder_name):

os.makedirs(data_folder_name)

texts, target = text_helpers.load_movie_data(data_folder_name) [/mw_shl_code]

3.我们声明模型参数。 请参阅以下内容:

[mw_shl_code=python,true]batch_size = 500

vocabulary_size = 7500

generations = 100000

model_learning_rate = 0.001

embedding_size = 200 # Word embedding size

doc_embedding_size = 100 # Document embedding size

concatenated_size = embedding_size + doc_embedding_size

num_sampled = int(batch_size/2)

window_size = 3 # How many words to consider to the left.

# Add checkpoints to training

save_embeddings_every = 5000

print_valid_every = 5000

print_loss_every = 100

# Declare stop words

stops = stopwords.words('english')

# We pick a few test words.

valid_words = ['love', 'hate', 'happy', 'sad', 'man', 'woman'][/mw_shl_code]

4.我们规范化电影评论并确保每个电影评论都大于所需的窗口大小。 使用以下代码:

[mw_shl_code=python,true]texts = text_helpers.normalize_text(texts, stops)

# Texts must contain at least as much as the prior window size

target = [target[ix] for ix, x in enumerate(texts) if len(x. split()) > window_size]

texts = [x for x in texts if len(x.split()) > window_size]

assert(len(target)==len(texts)) [/mw_shl_code]

5.现在我们创建单词词典。 请务必注意,我们不必创建文档字典。 文件索引只是文件的索引; 每个文档都有一个唯一的索引。 请参阅以下代码:

[mw_shl_code=python,true]word_dictionary = text_helpers.build_dictionary(texts, vocabulary_ size)

word_dictionary_rev = dict(zip(word_dictionary.values(), word_ dictionary.keys()))

text_data = text_helpers.text_to_numbers(texts, word_dictionary)

# Get validation word keys

valid_examples = [word_dictionary[x] for x in valid_words] [/mw_shl_code]

6.接下来我们定义单词嵌入和文档嵌入。 然后我们声明我们的噪声对比损失参数。 使用以下代码:

[mw_shl_code=python,true]embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

doc_embeddings = tf.Variable(tf.random_uniform([len(texts), doc_ embedding_size], -1.0, 1.0))

# NCE loss parameters

nce_weights = tf.Variable(tf.truncated_normal([vocabulary_size, concatenated_size],

stddev=1.0 / np.sqrt(concatenated_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size])) [/mw_shl_code]

7.我们现在声明Doc2vec索引和目标词索引的占位符。 请注意,输入索引的大小是窗口大小加1。 这是因为我们生成的每个数据窗口都会有一个附加的文档索引,如下所示:

[mw_shl_code=python,true]x_inputs = tf.placeholder(tf.int32, shape=[None, window_size + 1])

y_target = tf.placeholder(tf.int32, shape=[None, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)[/mw_shl_code]

8.现在我们必须创建我们的嵌入函数,它将单词嵌入加在一起,然后在最后连接文档嵌入。 使用以下代码:

[mw_shl_code=python,true]embed = tf.zeros([batch_size, embedding_size])

for element in range(window_size):

embed += tf.nn.embedding_lookup(embeddings, x_inputs[:, element])

doc_indices = tf.slice(x_inputs, [0,window_size],[batch_size,1])

doc_embed = tf.nn.embedding_lookup(doc_embeddings,doc_indices)

# concatenate embeddings

final_embed = tf.concat(1, [embed, tf.squeeze(doc_embed)]) [/mw_shl_code]

9.我们还需要声明一组验证词的余弦距离,我们可以经常打印出来以观察Doc2vec模型的进度。 请参阅以下代码:

[mw_shl_code=python,true]loss = tf.reduce_mean(tf.nn.nce_loss(nce_weights, nce_biases,

final_embed, y_target, num_sampled, vocabulary_size))

# Create optimizer

optimizer = tf.train.GradientDescentOptimizer(learning_rate=model_learning_ rate)

train_step = optimizer.minimize(loss) [/mw_shl_code]

10.我们还需要声明一组验证词的余弦距离,我们可以经常打印出来以观察Doc2vec模型的进度。 使用以下代码:

[mw_shl_code=python,true]norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims=True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings, valid_dataset)

similarity = tf.matmul(valid_embeddings, normalized_embeddings, transpose_b=True) [/mw_shl_code]

11.为了以后保存我们的嵌入,我们创建了一个模型保护功能。 然后我们可以初始化变量,这是我们开始训练单词嵌入之前的最后一步:

[mw_shl_code=python,true]saver = tf.train.Saver({"embeddings": embeddings, "doc_ embeddings":

doc_embeddings})

init = tf.initialize_all_variables()

sess.run(init) [/mw_shl_code]

Test

[mw_shl_code=python,true]loss_vec = []

loss_x_vec = []

for i in range(generations):

batch_inputs, batch_labels = text_helpers.generate_batch_ data(text_data, batch_size,

window_size, method='doc2vec')

feed_dict = {x_inputs : batch_inputs, y_target : batch_labels}

# Run the train step

sess.run(train_step, feed_dict=feed_dict)

# Return the loss

if (i+1) % print_loss_every == 0:

loss_val = sess.run(loss, feed_dict=feed_dict)

loss_vec.append(loss_val)

loss_x_vec.append(i+1)

print('Loss at step {} : {}'.format(i+1, loss_val))

# Validation: Print some random words and top 5 related words

if (i+1) % print_valid_every == 0:

sim = sess.run(similarity, feed_dict=feed_dict)

for j in range(len(valid_words)):

valid_word = word_dictionary_rev[valid_examples[j]]

top_k = 5 # number of nearest neighbors

nearest = (-sim[j, :]).argsort()[1:top_k+1]

log_str = "Nearest to {}:".format(valid_word)

for k in range(top_k):

close_word = word_dictionary_rev[nearest[k]]

log_str = '{} {},'.format(log_str, close_word)

print(log_str)

# Save dictionary + embeddings

if (i+1) % save_embeddings_every == 0:

# Save vocabulary dictionary

with open(os.path.join(data_folder_name,'movie_vocab. pkl'), 'wb') as f:

pickle.dump(word_dictionary, f)

# Save embeddings

model_checkpoint_path = os.path.join(os.getcwd(),data_ folder_name,'doc2vec_movie_embeddings.ckpt')

save_path = saver.save(sess, model_checkpoint_path)

print('Model saved in file: {}'.format(save_path))

happy: queen, chaos, them, succumb, elegance,

Nearest to sad: terms, pity, chord, wallet, morality,

Nearest to man: of, teen, an, our, physical,

Nearest to woman: innocuous, scenes, prove, except, lady,

Model saved in file: /.../temp/doc2vec_movie_embeddings.ckpt[/mw_shl_code]

12.现在我们已经训练了Doc2vec嵌入,我们可以在逻辑回归中使用这些嵌入来预测评论情绪。 首先,我们为逻辑回归设置了一些参数。 使用以下代码:

[mw_shl_code=python,true]max_words = 20 # maximum review word length

logistic_batch_size = 500 # training batch size [/mw_shl_code]

13.我们现在将数据集拆分为火车和测试集:

[mw_shl_code=python,true]train_indices = np.sort(np.random.choice(len(target),

round(0.8*len(target)), replace=False))

test_indices = np.sort(np.array(list(set(range(len(target))) – set(train_indices))))

texts_train = [x for ix, x in enumerate(texts) if ix in train_ indices]

texts_test = [x for ix, x in enumerate(texts) if ix in test_ indices]

target_train = np.array([x for ix, x in enumerate(target) if ix in train_indices])

target_test = np.array([x for ix, x in enumerate(target) if ix in test_indices]) [/mw_shl_code]

14.接下来我们将评论转换为数字单词索引,并将每篇评论填充或裁剪为20个单词,如下所示:

[mw_shl_code=python,true]text_data_train = np.array(text_helpers.text_to_numbers(texts_ train, word_dictionary))

text_data_test = np.array(text_helpers.text_to_numbers(texts_test, word_dictionary))

# Pad/crop movie reviews to specific length

text_data_train = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_train]])

text_data_test = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_test]]) [/mw_shl_code]

15.现在我们声明图形中与逻辑回归模型有关的部分。 我们添加数据占位符,变量,模型操作和损失函数,如下所示:

[mw_shl_code=python,true]# Define Logistic placeholders

log_x_inputs = tf.placeholder(tf.int32, shape=[None, max_words + 1])

log_y_target = tf.placeholder(tf.int32, shape=[None, 1])

A = tf.Variable(tf.random_normal(shape=[concatenated_size,1]))

b = tf.Variable(tf.random_normal(shape=[1,1]))

# Declare logistic model (sigmoid in loss function)

model_output = tf.add(tf.matmul(log_final_embed, A), b)

# Declare loss function (Cross Entropy loss)

logistic_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_ logits(model_output,

tf.cast(log_y_target, tf.float32))) [/mw_shl_code]

16.我们需要创建另一个嵌入函数。 前半部分中的嵌入函数在三个单词(和文档索引)的较小窗口上进行训练,以预测下一个单词。 在这里,我们将做同样的事情,但有20字的评论。 使用以下代码:

[mw_shl_code=python,true]# Add together element embeddings in window:

log_embed = tf.zeros([logistic_batch_size, embedding_size])

for element in range(max_words):

log_embed += tf.nn.embedding_lookup(embeddings, log_x_ inputs[:, element])

log_doc_indices = tf.slice(log_x_inputs, [0,max_words],[logistic_ batch_size,1])

log_doc_embed = tf.nn.embedding_lookup(doc_embeddings,log_doc_ indices)

# concatenate embeddings

log_final_embed = tf.concat(1, [log_embed, tf.squeeze(log_doc_ embed)]) [/mw_shl_code]

接下来我们在图上创建一个预测函数和精度方法,以便我们在训练世代中评估模型的性能。 然后我们声明一个优化函数并初始化所有变量:

[mw_shl_code=python,true]prediction = tf.round(tf.sigmoid(model_output))

predictions_correct = tf.cast(tf.equal(prediction, tf.cast(log_y_ target, tf.float32)), tf.float32)

accuracy = tf.reduce_mean(predictions_correct)

# Declare optimizer

logistic_opt = tf.train.GradientDescentOptimizer(learning_ rate=0.01)

logistic_train_step = logistic_opt.minimize(logistic_loss, var_ list=[A, b])

# Intitialize Variables

init = tf.initialize_all_variables()

sess.run(init) [/mw_shl_code]

18.现在我们可以开始进行后勤模型培训了:[mw_shl_code=python,true]train_loss = []

test_loss = []

train_acc = []

test_acc = []

i_data = []

for i in range(10000):

rand_index = np.random.choice(text_data_train.shape[0], size=logistic_batch_size)

rand_x = text_data_train[rand_index]

# Append review index at the end of text data

rand_x_doc_indices = train_indices[rand_index]

rand_x = np.hstack((rand_x, np.transpose([rand_x_doc_ indices])))

rand_y = np.transpose([target_train[rand_index]])

feed_dict = {log_x_inputs : rand_x, log_y_target : rand_y}

sess.run(logistic_train_step, feed_dict=feed_dict)

# Only record loss and accuracy every 100 generations

if (i+1)%100==0:

rand_index_test = np.random.choice(text_data_test. shape[0], size=logistic_batch_size)

rand_x_test = text_data_test[rand_index_test]

# Append review index at the end of text data

rand_x_doc_indices_test = test_indices[rand_index_test]

rand_x_test = np.hstack((rand_x_test, np.transpose([rand_x_doc_indices_test])))

rand_y_test = np.transpose([target_test[rand_index_test]])

test_feed_dict = {log_x_inputs: rand_x_test, log_y_target: rand_y_test}

i_data.append(i+1)

train_loss_temp = sess.run(logistic_loss, feed_dict=feed_ dict)

train_loss.append(train_loss_temp)

test_loss_temp = sess.run(logistic_loss, feed_dict=test_ feed_dict)

test_loss.append(test_loss_temp)

train_acc_temp = sess.run(accuracy, feed_dict=feed_dict)

train_acc.append(train_acc_temp)

test_acc_temp = sess.run(accuracy, feed_dict=test_feed_ dict)

test_acc.append(test_acc_temp)

if (i+1)%500==0:

acc_and_loss = [i+1, train_loss_temp, test_loss_temp, train_acc_temp, test_acc_temp]

acc_and_loss = [np.round(x,2) for x in acc_and_loss]

print('Generation # {}. Train Loss (Test Loss): {:.2f} ({:.2f}). Train Acc (Test Acc): {:.2f} ({:.2f})'.format(*acc_and_ loss)) [/mw_shl_code]

20.这导致以下输出:

[mw_shl_code=python,true]Generation # 500. Train Loss (Test Loss): 5.62 (7.45). Train Acc (Test Acc): 0.52 (0.48)

Generation # 10000. Train Loss (Test Loss): 2.35 (2.51). Train Acc (Test Acc): 0.59 (0.58) [/mw_shl_code]

21.我们还应该注意到,我们在text_helpers.generate_batch_data()函数中创建了一个名为Doc2vec的单独数据批生成方法,我们在本文的第一部分中使用它来训练Doc2vec嵌入。 以下是该函数的摘录:

[mw_shl_code=python,true]def generate_batch_data(sentences, batch_size, window_size, method='skip_gram'):

# Fill up data batch

batch_data = []

label_data = []

while len(batch_data) < batch_size:

# select random sentence to start

rand_sentence_ix = int(np.random.choice(len(sentences), size=1))

rand_sentence = sentences[rand_sentence_ix]

# Generate consecutive windows to look at

window_sequences = [rand_sentence[max((ix-window_ size),0):(ix+window_size+1)] for ix, x in enumerate(rand_ sentence)]

# Denote which element of each window is the center word f interest

label_indices = [ix if ix<window_size else window_size for ix,x in enumerate(window_sequences)]

# Pull out center word of interest for each window and create a tuple for each window

if method=='skip_gram':

...

elif method=='cbow':

...

elif method=='doc2vec':

# For doc2vec we keep LHS window only to predict target word

batch_and_labels = [(rand_sentence[i:i+window_size], rand_sentence[i+window_size]) for i in range(0, len(rand_ sentence)-window_size)]

batch, labels = [list(x) for x in zip(*batch_and_ labels)]

# Add document index to batch!! Remember that we must extract the last index in batch for the doc-index

batch = [x + [rand_sentence_ix] for x in batch]

else:

raise ValueError('Method {} not implmented yet.'.format(method))

# extract batch and labels

batch_data.extend(batch[:batch_size])

label_data.extend(labels[:batch_size])

# Trim batch and label at the end

batch_data = batch_data[:batch_size]

label_data = label_data[:batch_size]

# Convert to numpy array

batch_data = np.array(batch_data)

label_data = np.transpose(np.array([label_data]))

return(batch_data, label_data) [/mw_shl_code]

这个怎么运作

在这个配方中,我们进行了两个训练循环。 第一个是适合Doc2vec嵌入,第二个循环是为了适应电影情绪的逻辑回归。

虽然我们没有将情绪预测准确度提高很多(仍然略低于60%),但我们已经成功地在电影语料库中实现了Doc2vec的连接版本。 为了提高我们的准确性,我们应该为Doc2vec嵌入和可能更复杂的模型尝试不同的参数,因为逻辑回归可能无法捕获自然语言中的所有非线性行为。

最新经典文章,欢迎关注公众号

原文:

Making Predictions with Word2vec

In this recipe, we use the previously learned embedding strategies to perform classification.

Getting ready

Now that we have created and saved CBOW word embeddings, we need to use them to make sentiment predictions on the movie data set. In this recipe, we will learn how to load and use prior-trained embeddings and use these embeddings to perform sentiment analysis by training a logistic linear model to predict a good or bad review.

Sentiment analysis is a really hard task to do because human language makes it very hard to grasp the subtleties and nuances of the true meaning. Sarcasm, jokes, and ambiguous references all make the task exponentially harder. We will create a simple logistic regression on the movie review data set to see whether we can get any information out of the CBOW embeddings we created and saved in the prior recipe. Since the focus of this recipe is in the loading and usage of saved embeddings, we will not pursue more complicated models.

How to do it…

1.We begin by loading the necessary libraries and starting a graph session:

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import random

import os

import pickle

import string

import requests

import collections

import io

import tarfile

import urllib.request

import text_helpers

from nltk.corpus import stopwords

sess = tf.Session()

2.Now we declare the model parameters. We should note that the embedding size should be the same as the embedding size we used to create the prior CBOW embeddings. Use the following code:

embedding_size = 200

vocabulary_size = 2000

batch_size = 100

max_words = 100

stops = stopwords.words('english')

3.We load and transform the text data from our text_helpers.py file we have created. Use the following code:

data_folder_name = 'temp'

texts, target = text_helpers.load_movie_data(data_folder_name)

# Normalize text

print('Normalizing Text Data')

texts = text_helpers.normalize_text(texts, stops)

# Texts must contain at least 3 words

target = [target[ix] for ix, x in enumerate(texts) if len(x. split()) > 2]

texts = [x for x in texts if len(x.split()) > 2]

train_indices = np.random.choice(len(target), round(0.8*len(target)), replace=False)

test_indices = np.array(list(set(range(len(target))) - set(train_ indices)))

texts_train = [x for ix, x in enumerate(texts) if ix in train_ indices]

texts_test = [x for ix, x in enumerate(texts) if ix in test_ indices]

target_train = np.array([x for ix, x in enumerate(target) if ix in train_indices])

target_test = np.array([x for ix, x in enumerate(target) if ix in test_indices])

4.We now load our word dictionary we created while fitting the CBOW embeddings. This is important to load so that we have the same exact mapping from word to embedding index, as follows:

dict_file = os.path.join(data_folder_name, 'movie_vocab.pkl')

word_dictionary = pickle.load(open(dict_file, 'rb'))

5.We can now convert our loaded sentence data to a numerical numpy array with our word dictionary:

text_data_train = np.array(text_helpers.text_to_numbers(texts_ train, word_dictionary))

text_data_test = np.array(text_helpers.text_to_numbers(texts_test, word_dictionary))

6.Since movie reviews are of different lengths, we standardize them to be all the same length, and in our case we set it to 100 words. If a review has less than 100 words, we will pad it with zeros. Use the following code:

text_data_train = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_train]])

text_data_test = np.array([x[0:max_words] for x in [y+[0]*max_ words for y in text_data_test]])

7.Now we declare our model variables and placeholders for the logistic regression. Use the following code:

A = tf.Variable(tf.random_normal(shape=[embedding_size,1]))

b = tf.Variable(tf.random_normal(shape=[1,1]))

# Initialize placeholders

x_data = tf.placeholder(shape=[None, max_words], dtype=tf.int32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32)

8.In order for TensorFlow to restore our prior-trained embeddings, we must first give the saver method a variable to restore into, so we create a embedding variable that is of the same shape as the embeddings we will load:

embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

9.Now we put our embedding lookup function on the graph and take the average embeddings of all the words in the sentence. Use the following code:

embed = tf.nn.embedding_lookup(embeddings, x_data)

# Take average of all word embeddings in documents

embed_avg = tf.reduce_mean(embed, 1)

10.Next, we declare our model operations and our loss function, remembering that our loss function has the sigmoid operation built in already, as follows:

model_output = tf.add(tf.matmul(embed_avg, A), b)

# Declare loss function (Cross Entropy loss)

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_ logits(model_output, y_target))

11.Now we add prediction and accuracy functions to the graph so that we can evaluate the accuracy as the model is training. Use the following code:

prediction = tf.round(tf.sigmoid(model_output))

predictions_correct = tf.cast(tf.equal(prediction, y_target), tf.float32)

accuracy = tf.reduce_mean(predictions_correct)

12.We declare an optimizer function and initialize the following model variables:

my_opt = tf.train.AdagradOptimizer(0.005)

train_step = my_opt.minimize(loss)

init = tf.initialize_all_variables()

sess.run(init)

13.Now that we have a random initialized embedding, we can tell the Saver method to load our prior CBOW embeddings into our embedding variable. Use the following code:

model_checkpoint_path = os.path.join(data_folder_name,'cbow_movie_ embeddings.ckpt')

saver = tf.train.Saver({"embeddings": embeddings})

saver.restore(sess, model_checkpoint_path)

14.Now we can start the training generations. Note that every 100 generations, we save the training and test loss and accuracy. We will only print out the model status every 500 generations. Use the following code:

train_loss = []

test_loss = []

train_acc = []

test_acc = []

i_data = []

for i in range(10000):

rand_index = np.random.choice(text_data_train.shape[0], size=batch_size)

rand_x = text_data_train[rand_index]

rand_y = np.transpose([target_train[rand_index]])

sess.run(train_step, feed_dict={x_data: rand_x, y_target: rand_y})

# Only record loss and accuracy every 100 generations

if (i+1)%100==0:

i_data.append(i+1)

train_loss_temp = sess.run(loss, feed_dict={x_data: rand_x, y_target: rand_y})

train_loss.append(train_loss_temp)

test_loss_temp = sess.run(loss, feed_dict={x_data: text_ data_test, y_target: np.transpose([target_test])})

test_loss.append(test_loss_temp)

train_acc_temp = sess.run(accuracy, feed_dict={x_data: rand_x, y_target: rand_y})

train_acc.append(train_acc_temp)

test_acc_temp = sess.run(accuracy, feed_dict={x_data: text_data_test, y_target: np.transpose([target_test])})

test_acc.append(test_acc_temp)

if (i+1)%500==0:

acc_and_loss = [i+1, train_loss_temp, test_loss_temp, train_acc_temp, test_acc_temp]

acc_and_loss = [np.round(x,2) for x in acc_and_loss]

print('Generation # {}. Train Loss (Test Loss): {:.2f} ({:.2f}). Train Acc (Test Acc): {:.2f} ({:.2f})'.format(*acc_and_ loss))

15.This results in the following output:

Generation # 500. Train Loss (Test Loss): 0.70 (0.71). Train Acc (Test Acc): 0.52 (0.48)

Generation # 1000. Train Loss (Test Loss): 0.69 (0.72). Train Acc (Test Acc): 0.56 (0.47)

...

Generation # 9500. Train Loss (Test Loss): 0.69 (0.70). Train Acc (Test Acc): 0.57 (0.55)

Generation # 10000. Train Loss (Test Loss): 0.70 (0.70). Train Acc (Test Acc): 0.59 (0.55)

16.Here is the code to plot the training and test loss and accuracy that we saved every 100 generations. Use the following code:

# Plot loss over time

plt.plot(i_data, train_loss, 'k-', label='Train Loss')

plt.plot(i_data, test_loss, 'r--', label='Test Loss', linewidth=4)

plt.title('Cross Entropy Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Cross Entropy Loss')

plt.legend(loc='upper right')

plt.show()

# Plot train and test accuracy

plt.plot(i_data, train_acc, 'k-', label='Train Set Accuracy')

plt.plot(i_data, test_acc, 'r--', label='Test Set Accuracy', linewidth=4)

plt.title('Train and Test Accuracy')

plt.xlabel('Generation')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

plt.show()

Figure 6: Here we observe the train and test loss over 10,000 generations.

Figure 7: We can observe that the train and test set accuracy is slowly improving over 10,000 generations. It is worthwhile to note that this model performs very poorly and is only slightly better than a random predictor.

How it works…

We loaded our prior CBOW embeddings, and performed logistic regression on the average embedding of a review. The important methods to note here are how we load model variables from the disk onto already initialized variables in our current model. We also have to remember to store and load our vocabulary dictionary that was created prior to training the embeddings. It is very important to have the same mapping from words to embedding indices when using the same embedding.

There's more…

We can see we almost achieve a 60% accuracy on predicting the sentiment. For example, it is a hard task to know the meaning behind the word great; it could be used in a negative or positive context within the review.

To tackle this problem, we want to somehow create embeddings for the documents themselves that can address the sentiment issue. Usually, a whole review is positive or a whole review is negative. We can use this to our advantage in the Using Doc2vec for sentiment analysis recipe.

Using Doc2vec for Sentiment Analysis

Now that we know how to train word embeddings, we can also extend those methodologies to have a document embedding. We explore how to do this in this recipe with TensorFlow.

Getting ready

In the prior sections about Word2vec methods, we have managed to capture positional relationships between words. What we have not done is capture the relationship of words to the document (or movie review) that they come from. One extension of Word2vec that captures a document effect is called Doc2vec.

The basic idea of Doc2vec is to introduce document embedding, along with the word embeddings that may help to capture the tone of the document. For example, just knowing that the words movie and love are nearby to each other may not help us determine the sentiment of the review. The review may be talking about how they love the movie or how they do not love the movie. But if the review is long enough and more negative words are found in the document, maybe we can pick up on an overall tone that may help us predict the next words.

Doc2vec simply adds an additional embedding matrix for the documents and uses a window of words plus the document index to predict the next word. All word windows in a document have the same document index. It is worthwhile to mention that it is important to think about how we will combine the document embedding and the word embeddings. We combine the word embeddings in the word window by taking the sum and there are two main ways to combine these embeddings with the document embedding. Commonly, the document embedding is either added to the word embeddings, or concatenated to the end of the word embeddings. If we add the two embeddings, we limit the document embedding size to be the same size as the word embedding size. If we concatenate, we lift that restriction, but increase the number of variables that the logistic regression must deal with. For illustrative purposes, we show how to deal with concatenation in this recipe. But in general, for smaller datasets, addition is the better choice.

The first step will be to fit both the document and word embeddings on the whole corpus of movie reviews, then we perform a train-test split, train a logistic model, and see whether we can improve upon the accuracy of predicting the review sentiment.

How to do it…

1.We start by loading the necessary libraries and starting a graph session, as follows:

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import random

import os

import pickle

import string

import requests

import collections

import io

import tarfile

import urllib.request

import text_helpers

from nltk.corpus import stopwords

sess = tf.Session()

2.We load the movie review corpus, just as we have done in the prior two recipes. Use the following code:

data_folder_name = 'temp'

if not os.path.exists(data_folder_name):

os.makedirs(data_folder_name)

texts, target = text_helpers.load_movie_data(data_folder_name)

3.We declare the model parameters. See the following:

batch_size = 500

vocabulary_size = 7500

generations = 100000

model_learning_rate = 0.001

embedding_size = 200 # Word embedding size

doc_embedding_size = 100 # Document embedding size

concatenated_size = embedding_size + doc_embedding_size

num_sampled = int(batch_size/2)

window_size = 3 # How many words to consider to the left.

# Add checkpoints to training

save_embeddings_every = 5000

print_valid_every = 5000

print_loss_every = 100

# Declare stop words

stops = stopwords.words('english')

# We pick a few test words.

valid_words = ['love', 'hate', 'happy', 'sad', 'man', 'woman']

4.We normalize the movie reviews and make sure that each movie review is larger than the desired window size. Use the following code:

texts = text_helpers.normalize_text(texts, stops)

# Texts must contain at least as much as the prior window size

target = [target[ix] for ix, x in enumerate(texts) if len(x. split())

> window_size]

texts = [x for x in texts if len(x.split()) > window_size]

assert(len(target)==len(texts))

5.Now we create our word dictionary. It is important to note that we do not have to create a document dictionary. The document indices will be just the index of the document; each document will have a unique index. See the following code:

word_dictionary = text_helpers.build_dictionary(texts, vocabulary_ size)

word_dictionary_rev = dict(zip(word_dictionary.values(), word_ dictionary.keys()))

text_data = text_helpers.text_to_numbers(texts, word_dictionary)

# Get validation word keys

valid_examples = [word_dictionary[x] for x in valid_words]

6.Next we define our word embeddings and document embeddings. Then we declare our noise-contrastive loss parameters. Use the following code:

embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

doc_embeddings = tf.Variable(tf.random_uniform([len(texts), doc_ embedding_size], -1.0, 1.0))

# NCE loss parameters

nce_weights = tf.Variable(tf.truncated_normal([vocabulary_size, concatenated_size],

stddev=1.0 / np.sqrt(concatenated_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

7.We now declare our placeholders for the Doc2vec indices and target word index. Note that the size of the input indices is the window size plus one. This is because every data window we generate will have an additional document index with it, as follows:

x_inputs = tf.placeholder(tf.int32, shape=[None, window_size + 1])

y_target = tf.placeholder(tf.int32, shape=[None, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

8.Now we have to create our embedding function that adds together the word embeddings and then concatenates the document embedding at the end. Use the following code:

embed = tf.zeros([batch_size, embedding_size])

for element in range(window_size):

embed += tf.nn.embedding_lookup(embeddings, x_inputs[:, element])

doc_indices = tf.slice(x_inputs, [0,window_size],[batch_size,1])

doc_embed = tf.nn.embedding_lookup(doc_embeddings,doc_indices)

# concatenate embeddings

final_embed = tf.concat(1, [embed, tf.squeeze(doc_embed)])

9.We also need to declare the cosine distance from a set of validation words that we can print out every so often to observe the progress of our Doc2vec model. See the following code:

loss = tf.reduce_mean(tf.nn.nce_loss(nce_weights, nce_biases,

final_embed, y_target, num_sampled, vocabulary_size))

# Create optimizer

optimizer =

tf.train.GradientDescentOptimizer(learning_rate=model_learning_ rate)

train_step = optimizer.minimize(loss)

10.We also need to declare the cosine distance from a set of validation words that we can print out every so often to observe the progress of our Doc2vec model. Use the following code:

norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1,

keep_dims=True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings,

valid_dataset)

similarity = tf.matmul(valid_embeddings, normalized_embeddings,

transpose_b=True)

11.To save our embeddings for later, we create a model saver function. Then we can initialize the variables, the last step before we commence training on the word embeddings:

saver = tf.train.Saver({"embeddings": embeddings, "doc_ embeddings":

doc_embeddings})

init = tf.initialize_all_variables()

sess.run(init)

Test

loss_vec = []

loss_x_vec = []

for i in range(generations):

batch_inputs, batch_labels = text_helpers.generate_batch_ data(text_data, batch_size,

window_size, method='doc2vec')

feed_dict = {x_inputs : batch_inputs, y_target : batch_labels}

# Run the train step

sess.run(train_step, feed_dict=feed_dict)

# Return the loss

if (i+1) % print_loss_every == 0:

loss_val = sess.run(loss, feed_dict=feed_dict)

loss_vec.append(loss_val)

loss_x_vec.append(i+1)

print('Loss at step {} : {}'.format(i+1, loss_val))

# Validation: Print some random words and top 5 related words

if (i+1) % print_valid_every == 0:

sim = sess.run(similarity, feed_dict=feed_dict)

for j in range(len(valid_words)):

valid_word = word_dictionary_rev[valid_examples[j]]

top_k = 5 # number of nearest neighbors

nearest = (-sim[j, :]).argsort()[1:top_k+1]

log_str = "Nearest to {}:".format(valid_word)

for k in range(top_k):

close_word = word_dictionary_rev[nearest[k]]

log_str = '{} {},'.format(log_str, close_word)

print(log_str)

# Save dictionary + embeddings

if (i+1) % save_embeddings_every == 0:

# Save vocabulary dictionary

with open(os.path.join(data_folder_name,'movie_vocab. pkl'), 'wb') as f:

pickle.dump(word_dictionary, f)

# Save embeddings

model_checkpoint_path = os.path.join(os.getcwd(),data_ folder_name,'doc2vec_movie_embeddings.ckpt')

save_path = saver.save(sess, model_checkpoint_path)

print('Model saved in file: {}'.format(save_path))

happy: queen, chaos, them, succumb, elegance,

Nearest to sad: terms, pity, chord, wallet, morality,

Nearest to man: of, teen, an, our, physical,

Nearest to woman: innocuous, scenes, prove, except, lady,

Model saved in file: /.../temp/doc2vec_movie_embeddings.ckpt

12.Now that we have trained the Doc2vec embeddings, we can use these embeddings in a logistic regression to predict the review sentiment. First we set some parameters for the logistic regression. Use the following code:

max_words = 20 # maximum review word length

logistic_batch_size = 500 # training batch size

13.We now split the data set into a train and test set:

train_indices = np.sort(np.random.choice(len(target),

round(0.8*len(target)), replace=False))

test_indices = np.sort(np.array(list(set(range(len(target))) –

set(train_indices))))

texts_train = [x for ix, x in enumerate(texts) if ix in train_ indices]

texts_test = [x for ix, x in enumerate(texts) if ix in test_ indices]

target_train = np.array([x for ix, x in enumerate(target) if ix in train_indices])

target_test = np.array([x for ix, x in enumerate(target) if ix in test_indices])

14.Next we convert the reviews to numerical word indices and pad or crop each review to be 20 words, as follows:

text_data_train = np.array(text_helpers.text_to_numbers(texts_ train,

word_dictionary))

text_data_test = np.array(text_helpers.text_to_numbers(texts_test,

word_dictionary))

# Pad/crop movie reviews to specific length

text_data_train = np.array([x[0:max_words] for x in [y+[0]*max_ words

for y in text_data_train]])

text_data_test = np.array([x[0:max_words] for x in [y+[0]*max_ words

for y in text_data_test]])

15.Now we declare the parts of the graph that pertain to the logistic regression model. We add the data placeholders, the variables, model operations, and the loss function as follows:

# Define Logistic placeholders

log_x_inputs = tf.placeholder(tf.int32, shape=[None, max_words + 1])

log_y_target = tf.placeholder(tf.int32, shape=[None, 1])

A = tf.Variable(tf.random_normal(shape=[concatenated_size,1]))

b = tf.Variable(tf.random_normal(shape=[1,1]))

# Declare logistic model (sigmoid in loss function)

model_output = tf.add(tf.matmul(log_final_embed, A), b)

# Declare loss function (Cross Entropy loss)

logistic_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_ logits(model_output,

tf.cast(log_y_target, tf.float32)))

16.We need to create another embedding function. The embedding function in the first half is trained on a smaller window of three words (and a document index) to predict the next word. Here we will do the same but with the 20-word review. Use the following code:

# Add together element embeddings in window:

log_embed = tf.zeros([logistic_batch_size, embedding_size])

for element in range(max_words):

log_embed += tf.nn.embedding_lookup(embeddings, log_x_ inputs[:, element])

log_doc_indices = tf.slice(log_x_inputs, [0,max_words],[logistic_ batch_size,1])

log_doc_embed = tf.nn.embedding_lookup(doc_embeddings,log_doc_ indices)

# concatenate embeddings

log_final_embed = tf.concat(1, [log_embed, tf.squeeze(log_doc_ embed)])

17.Next we create a prediction function and accuracy method on the graph so that we can evaluate the performance of the model as we run through the training generations. Then we declare an optimizing function and initialize all the variables:

prediction = tf.round(tf.sigmoid(model_output))

predictions_correct = tf.cast(tf.equal(prediction, tf.cast(log_y_ target, tf.float32)), tf.float32)

accuracy = tf.reduce_mean(predictions_correct)

# Declare optimizer

logistic_opt = tf.train.GradientDescentOptimizer(learning_ rate=0.01)

logistic_train_step = logistic_opt.minimize(logistic_loss, var_ list=[A, b])

# Intitialize Variables

init = tf.initialize_all_variables()

sess.run(init)

18.Now we can start the logistic model training:

train_loss = []

test_loss = []

train_acc = []

test_acc = []

i_data = []

for i in range(10000):

rand_index = np.random.choice(text_data_train.shape[0], size=logistic_batch_size)

rand_x = text_data_train[rand_index]

# Append review index at the end of text data

rand_x_doc_indices = train_indices[rand_index]

rand_x = np.hstack((rand_x, np.transpose([rand_x_doc_ indices])))

rand_y = np.transpose([target_train[rand_index]])

feed_dict = {log_x_inputs : rand_x, log_y_target : rand_y}

sess.run(logistic_train_step, feed_dict=feed_dict)

# Only record loss and accuracy every 100 generations

if (i+1)%100==0:

rand_index_test = np.random.choice(text_data_test. shape[0], size=logistic_batch_size)

rand_x_test = text_data_test[rand_index_test]

# Append review index at the end of text data

rand_x_doc_indices_test = test_indices[rand_index_test]

rand_x_test = np.hstack((rand_x_test, np.transpose([rand_x_doc_indices_test])))

rand_y_test = np.transpose([target_test[rand_index_test]])

test_feed_dict = {log_x_inputs: rand_x_test, log_y_target: rand_y_test}

i_data.append(i+1)

train_loss_temp = sess.run(logistic_loss, feed_dict=feed_

dict)

train_loss.append(train_loss_temp)

test_loss_temp = sess.run(logistic_loss, feed_dict=test_ feed_dict)

test_loss.append(test_loss_temp)

train_acc_temp = sess.run(accuracy, feed_dict=feed_dict)

train_acc.append(train_acc_temp)

test_acc_temp = sess.run(accuracy, feed_dict=test_feed_ dict)

test_acc.append(test_acc_temp)

if (i+1)%500==0:

acc_and_loss = [i+1, train_loss_temp, test_loss_temp, train_acc_temp, test_acc_temp]

acc_and_loss = [np.round(x,2) for x in acc_and_loss]

print('Generation # {}. Train Loss (Test Loss): {:.2f} ({:.2f}). Train Acc (Test Acc): {:.2f} ({:.2f})'.format(*acc_and_ loss))

20.This results in the following output:

Generation # 500. Train Loss (Test Loss): 5.62 (7.45). Train Acc (Test Acc): 0.52 (0.48)

Generation # 10000. Train Loss (Test Loss): 2.35 (2.51). Train Acc (Test Acc): 0.59 (0.58)

21.We should also note that we have created a separate data batch generating method in the text_helpers.generate_batch_data() function called Doc2vec, which we used in the first part of this recipe to train the Doc2vec embeddings. Here is the excerpt from that function that pertains to this method:

def generate_batch_data(sentences, batch_size, window_size, method='skip_gram'):

# Fill up data batch

batch_data = []

label_data = []

while len(batch_data) < batch_size:

# select random sentence to start

rand_sentence_ix = int(np.random.choice(len(sentences), size=1))

rand_sentence = sentences[rand_sentence_ix]

# Generate consecutive windows to look at

window_sequences = [rand_sentence[max((ix-window_ size),0):(ix+window_size+1)] for ix, x in enumerate(rand_ sentence)]

# Denote which element of each window is the center word

f interest

label_indices = [ix if ix<window_size else window_size for ix,x in enumerate(window_sequences)]

# Pull out center word of interest for each window and create a tuple for each window

if method=='skip_gram':

...

elif method=='cbow':

...

elif method=='doc2vec':

# For doc2vec we keep LHS window only to predict target word

batch_and_labels = [(rand_sentence[i:i+window_size], rand_sentence[i+window_size]) for i in range(0, len(rand_ sentence)-window_size)]

batch, labels = [list(x) for x in zip(*batch_and_ labels)]

# Add document index to batch!! Remember that we must extract the last index in batch for the doc-index

batch = [x + [rand_sentence_ix] for x in batch]

else:

raise ValueError('Method {} not implmented yet.'.format(method))

# extract batch and labels

batch_data.extend(batch[:batch_size])

label_data.extend(labels[:batch_size])

# Trim batch and label at the end

batch_data = batch_data[:batch_size]

label_data = label_data[:batch_size]

# Convert to numpy array

batch_data = np.array(batch_data)

label_data = np.transpose(np.array([label_data]))

return(batch_data, label_data)

How it works…

In this recipe, we performed two training loops. The first was to fit the Doc2vec embeddings, and the second loop was to fit the logistic regression on the movie sentiment.

While we did not increase the sentiment prediction accuracy by much (still slightly under 60%), we have successfully implemented the concatenation version of Doc2vec on the movie corpus. To increase our accuracy, we should try different parameters for the Doc2vec embeddings and possibly a more complicated model, as logistic regression may not be able to capture all the non-linear behavior in natural language.

|

/2

/2