本帖最后由 levycui 于 2019-7-2 15:41 编辑

问题导读:

1、如何声明一些图像参数,高度和宽度,以及随机裁剪图像的大小?

2、如何使用read_cifar_files()函数返回随机扭曲的图像?

3、如何声明我们的模型函数及设置两个卷积层?

4、如何初始化我们的损耗和测试精度函数?

上一篇:TensorFlow ML cookbook 第八章1节 卷积神经网络-实施更简单的CNN

实施高级CNN

能够扩展CNN模型以进行图像识别非常重要,这样我们才能理解如何增加网络的深度。如果我们有足够的数据,这可能会提高我们预测的准确性。扩展CNN网络的深度是以标准方式完成的:我们只需重复卷积,maxpool,ReLU系列,直到我们对深度感到满意为止。许多更精确的图像识别网络以这种方式操作。

做好准备

在本文中,我们将实现一种更先进的读取图像数据的方法,并使用更大的CNN在CIFAR10数据集上进行图像识别(https://www.cs.toronto.edu/~kriz/cifar.html)。此数据集包含60,000个32x32图像,这些图像恰好属于十个可能类别中的一个。图像的潜在类别是飞机,汽车,鸟,猫,鹿,狗,青蛙,马,船和卡车。您还可以参阅“另请参阅”部分的第一个要点。

大多数图像数据集太大而无法放入内存中。我们可以使用TensorFlow设置一个图像管道,一次从文件中批量读取。我们通过设置图像阅读器,然后创建在图像阅读器上运行的批处理队列来实现此目的。

此外,对于图像识别数据,通常在将图像发送之前随机扰动图像以进行训练。在这里,我们将随机裁剪,翻转和更改亮度。

此配方是官方TensorFlow CIFAR-10教程的改编版本,可在本章末尾的“另请参阅”部分中找到。我们将教程浓缩为一个脚本,并逐行完成,并解释所有必要的代码。我们还将一些常量和参数恢复为原始引用的纸张值,我们将在以下适当的步骤中指出。

怎么做…

1.首先,我们加载必要的库并启动图形会话:

[mw_shl_code=python,true]import os

import sys

import tarfile

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from six.moves import urllib

sess = tf.Session() [/mw_shl_code]

2,现在我们将声明一些模型参数。 我们的批量大小为128(用于火车和测试)。 我们将每50代输出一次状态,总共运行20,000代。 每500代,我们将评估一批测试数据。 然后我们将声明一些图像参数,高度和宽度,以及随机裁剪图像的大小。 有三个通道(红色,绿色和蓝色),我们有十个不同的目标。 然后,我们将声明我们将从队列中存储数据和图像批次的位置:

[mw_shl_code=python,true]batch_size = 128

output_every = 50

generations = 20000

eval_every = 500

image_height = 32

image_width = 32

crop_height = 24

crop_width = 24

num_channels = 3

num_targets = 10

data_dir = 'temp'

extract_folder = 'cifar-10-batches-bin'[/mw_shl_code]

3,建议在我们向好的模型迈进时降低学习率,因此我们将以指数方式降低学习率:初始学习率将设置为0.1,并且我们将按指数方式将其降低10% 250代。 确切的公式将由x表示当前世代数。 默认值是为了不断减少,但TensorFlow确实接受了阶梯参数,该参数仅更新学习速率:

[mw_shl_code=python,true]learning_rate = 0.1

lr_decay = 0.9

num_gens_to_wait = 250.

[/mw_shl_code]

4.现在我们将设置参数,以便我们可以读取二进制CIFAR-10图像:

[mw_shl_code=python,true]image_vec_length = image_height * image_width * num_channels

record_length = 1 + image_vec_length [/mw_shl_code]

5,接下来,我们将设置数据目录和URL以下载CIFAR-10图像,如果我们还没有它们:

[mw_shl_code=python,true]data_dir = 'temp'

if not os.path.exists(data_dir):

os.makedirs(data_dir)

cifar10_url = 'http://www.cs.toronto.edu/~kriz/cifar-10-binary. tar.gz'

data_file = os.path.join(data_dir, 'cifar-10-binary.tar.gz')

if not os.path.isfile(data_file):

# Download file

filepath, _ = urllib.request.urlretrieve(cifar10_url, data_ file, progress)

# Extract file

tarfile.open(filepath, 'r:gz').extractall(data_dir) [/mw_shl_code]

6,我们将设置记录阅读器并使用以下read_cifar_files()函数返回随机扭曲的图像。 首先,我们需要声明一个读取固定字节长度的记录读取器对象。 在我们读取图像队列之后,我们将图像和标签分开。 最后,我们将使用TensorFlow的内置图像修改功能随机扭曲图像:

[mw_shl_code=python,true]def read_cifar_files(filename_queue, distort_images = True):

reader = tf.FixedLengthRecordReader(record_bytes=record_ length)

key, record_string = reader.read(filename_queue)

record_bytes = tf.decode_raw(record_string, tf.uint8)

# Extract label

image_label = tf.cast(tf.slice(record_bytes, [0], [1]), tf.int32)

# Extract image

image_extracted = tf.reshape(tf.slice(record_bytes, [1], [image_vec_length]), [num_channels, image_height, image_width])

# Reshape image

image_uint8image = tf.transpose(image_extracted, [1, 2, 0])

reshaped_image = tf.cast(image_uint8image, tf.float32)

# Randomly Crop image

final_image = tf.image.resize_image_with_crop_or_pad(reshaped_ image, crop_width, crop_height)

if distort_images:

# Randomly flip the image horizontally, change the brightness and contrast

final_image = tf.image.random_flip_left_right(final_image)

final_image = tf.image.random_brightness(final_image,max_ delta=63)

final_image = tf.image.random_contrast(final_ image,lower=0.2, upper=1.8)

# Normalize whitening

final_image = tf.image.per_image_whitening(final_image)

return(final_image, image_label) [/mw_shl_code]

7,现在我们将声明一个函数,它将填充我们的图像管道供批处理器使用。 我们首先需要设置我们想要读取的图像的文件列表,并定义如何使用通过预构建的TensorFlow函数创建的输入生成器对象来读取它们。 输入生成器可以传递给我们在上一步中创建的读取函数read_cifar_files()。 然后我们将在队列中设置一个批处理阅读器shuffle_batch():

[mw_shl_code=python,true]def input_pipeline(batch_size, train_logical=True):

if train_logical:

files = [os.path.join(data_dir, extract_folder, 'data_ batch_{}.bin'.format(i)) for i in range(1,6)]

else:

files = [os.path.join(data_dir, extract_folder, 'test_ batch.bin')]

filename_queue = tf.train.string_input_producer(files)

image, label = read_cifar_files(filename_queue)

min_after_dequeue = 1000

capacity = min_after_dequeue + 3 * batch_size

example_batch, label_batch = tf.train.shuffle_batch([image, label], batch_size, capacity, min_after_dequeue)

return(example_batch, label_batch)[/mw_shl_code]

正确设置min_after_dequeue很重要。 此参数负责设置用于采样的图像缓冲区的最小大小。 官方TensorFlow文档建议将其设置为(#threads + error margin)* batch_size。 请注意,将其设置为更大的大小会导致更均匀的混洗,因为它正在从队列中的更大数据集进行混洗,但是在此过程中也将使用更多内存。

8,接下来,我们可以声明我们的模型函数。 我们将使用的模型有两个卷积层,后面是三个完全连接的层。 为了使变量声明更容易,我们首先声明两个变量函数。 两个卷积层将分别创建64个特征。 第一个完全连接的层将连接第二个卷积层和384个隐藏节点。 第二个完全连接的操作将这384个隐藏节点连接到192个隐藏节点。 最后的隐藏层操作将192个节点连接到我们试图预测的10个输出类。 请参阅以下标有#的内联注释:

[mw_shl_code=python,true]def cifar_cnn_model(input_images, batch_size, train_logical=True):

def truncated_normal_var(name, shape, dtype):

return(tf.get_variable(name=name, shape=shape, dtype=dtype, initializer=tf.truncated_normal_ initializer(stddev=0.05)))

def zero_var(name, shape, dtype):

return(tf.get_variable(name=name, shape=shape, dtype=dtype, initializer=tf.constant_initializer(0.0)))

# First Convolutional Layer

with tf.variable_scope('conv1') as scope:

# Conv_kernel is 5x5 for all 3 colors and we will create 64 features

conv1_kernel = truncated_normal_var(name='conv_kernel1', shape=[5, 5, 3, 64], dtype=tf.float32)

# We convolve across the image with a stride size of 1

conv1 = tf.nn.conv2d(input_images, conv1_kernel, [1, 1, 1, 1], padding='SAME')

# Initialize and add the bias term

conv1_bias = zero_var(name='conv_bias1', shape=[64], dtype=tf.float32)

conv1_add_bias = tf.nn.bias_add(conv1, conv1_bias)

# ReLU element wise

relu_conv1 = tf.nn.relu(conv1_add_bias)

# Max Pooling

pool1 = tf.nn.max_pool(relu_conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1],padding='SAME', name='pool_layer1')

# Local Response Normalization

norm1 = tf.nn.lrn(pool1, depth_radius=5, bias=2.0, alpha=1e-3, beta=0.75, name='norm1')

# Second Convolutional Layer

with tf.variable_scope('conv2') as scope:

# Conv kernel is 5x5, across all prior 64 features and we create 64 more features

conv2_kernel = truncated_normal_var(name='conv_kernel2', shape=[5, 5, 64, 64], dtype=tf.float32)

# Convolve filter across prior output with stride size of 1

conv2 = tf.nn.conv2d(norm1, conv2_kernel, [1, 1, 1, 1], padding='SAME')

# Initialize and add the bias

conv2_bias = zero_var(name='conv_bias2', shape=[64], dtype=tf.float32)

conv2_add_bias = tf.nn.bias_add(conv2, conv2_bias)

# ReLU element wise

relu_conv2 = tf.nn.relu(conv2_add_bias)

# Max Pooling

pool2 = tf.nn.max_pool(relu_conv2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME', name='pool_layer2')

# Local Response Normalization (parameters from paper)

norm2 = tf.nn.lrn(pool2, depth_radius=5, bias=2.0, alpha=1e-3, beta=0.75, name='norm2')

# Reshape output into a single matrix for multiplication for the fully connected layers

reshaped_output = tf.reshape(norm2, [batch_size, -1])

reshaped_dim = reshaped_output.get_shape()[1].value

# First Fully Connected Layer

with tf.variable_scope('full1') as scope:

# Fully connected layer will have 384 outputs.

full_weight1 = truncated_normal_var(name='full_mult1', shape=[reshaped_dim, 384], dtype=tf.float32)

full_bias1 = zero_var(name='full_bias1', shape=[384], dtype=tf.float32)

full_layer1 = tf.nn.relu(tf.add(tf.matmul(reshaped_output, full_weight1), full_bias1))

# Second Fully Connected Layer

with tf.variable_scope('full2') as scope:

# Second fully connected layer has 192 outputs.

full_weight2 = truncated_normal_var(name='full_mult2', shape=[384, 192], dtype=tf.float32)

full_bias2 = zero_var(name='full_bias2', shape=[192], dtype=tf.float32)

full_layer2 = tf.nn.relu(tf.add(tf.matmul(full_layer1, full_weight2), full_bias2))

# Final Fully Connected Layer -> 10 categories for output (num_targets)

with tf.variable_scope('full3') as scope:

# Final fully connected layer has 10 (num_targets) outputs.

full_weight3 = truncated_normal_var(name='full_mult3', shape=[192, num_targets], dtype=tf.float32)

full_bias3 = zero_var(name='full_bias3', shape=[num_ targets], dtype=tf.float32)

final_output = tf.add(tf.matmul(full_layer2, full_ weight3), full_bias3)

return(final_output)[/mw_shl_code]

我们的本地响应归一化参数取自论文,参见(3)。

9,现在我们将创建损失函数。 我们将使用softmax函数,因为图片只能占用一个类别,因此输出应该是十个目标的概率分布:

[mw_shl_code=python,true]def cifar_loss(logits, targets):

# Get rid of extra dimensions and cast targets into integers

targets = tf.squeeze(tf.cast(targets, tf.int32))

# Calculate cross entropy from logits and targets

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_ logits(logits, targets)

# Take the average loss across batch size

cross_entropy_mean = tf.reduce_mean(cross_entropy)

return(cross_entropy_mean)[/mw_shl_code]

10,接下来,我们宣布我们的培训步骤。 学习率将以指数阶跃函数降低:

[mw_shl_code=python,true]def train_step(loss_value, generation_num):

# Our learning rate is an exponential decay (stepped down)

model_learning_rate = tf.train.exponential_decay(learning_ rate, generation_num, num_gens_to_wait, lr_decay, staircase=True)

# Create optimizer

my_optimizer = tf.train.GradientDescentOptimizer(model_ learning_rate)

# Initialize train step

train_step = my_optimizer.minimize(loss_value)

return(train_step)[/mw_shl_code]

11,我们还必须具有精确度函数,可以计算一批图像的精度。 我们将输入logits和目标向量,并输出平均精度。 然后我们可以将它用于列车和测试批次:

[mw_shl_code=python,true]def accuracy_of_batch(logits, targets):

# Make sure targets are integers and drop extra dimensions

targets = tf.squeeze(tf.cast(targets, tf.int32))

# Get predicted values by finding which logit is the greatest

batch_predictions = tf.cast(tf.argmax(logits, 1), tf.int32)

# Check if they are equal across the batch

predicted_correctly = tf.equal(batch_predictions, targets)

# Average the 1's and 0's (True's and False's) across the batch size

accuracy = tf.reduce_mean(tf.cast(predicted_correctly, tf.float32))

return(accuracy) [/mw_shl_code]

12,现在我们有了一个imagepipeline函数,我们可以初始化训练图像管道和测试图像管道:

[mw_shl_code=python,true]images, targets = input_pipeline(batch_size, train_logical=True)

test_images, test_targets = input_pipeline(batch_size, train_ logical=False) [/mw_shl_code]

13,接下来,我们将初始化训练输出和测试输出的模型。 重要的是要注意,在创建训练模型之后我们必须声明scope.reuse_variables(),这样,当我们为测试网络声明模型时,它将使用相同的模型参数:

[mw_shl_code=python,true]with tf.variable_scope('model_definition') as scope:

# Declare the training network model

model_output = cifar_cnn_model(images, batch_size)

# Use same variables within scope

scope.reuse_variables()

# Declare test model output

test_output = cifar_cnn_model(test_images, batch_size) [/mw_shl_code]

14,我们现在可以初始化我们的损耗和测试精度函数。 然后我们将声明生成变量。 此变量需要声明为不可训练,并传递给我们的训练函数,该函数在学习速率指数衰减计算中使用它:

[mw_shl_code=python,true]loss = cifar_loss(model_output, targets)

accuracy = accuracy_of_batch(test_output, test_targets)

generation_num = tf.Variable(0, trainable=False)

train_op = train_step(loss, generation_num)[/mw_shl_code]

15,我们现在将初始化所有模型的变量,然后通过运行TensorFlow函数start_queue_runners()来启动图像管道。 当我们开始训练或测试模型输出时,管道将输入一批图像来代替饲料字典:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init)

tf.train.start_queue_runners(sess=sess) [/mw_shl_code]

16,我们现在循环培训我们的培训,节省培训损失和测试准确性:

[mw_shl_code=python,true]train_loss = []

test_accuracy = []

for i in range(generations):

_, loss_value = sess.run([train_op, loss])

if (i+1) % output_every == 0:

train_loss.append(loss_value)

output = 'Generation {}: Loss = {:.5f}'.format((i+1), loss_value)

print(output)

if (i+1) % eval_every == 0:

[temp_accuracy] = sess.run([accuracy])

test_accuracy.append(temp_accuracy)

acc_output = ' --- Test Accuracy= {:.2f}%.'.format(100.*temp_accuracy)

print(acc_output) [/mw_shl_code]

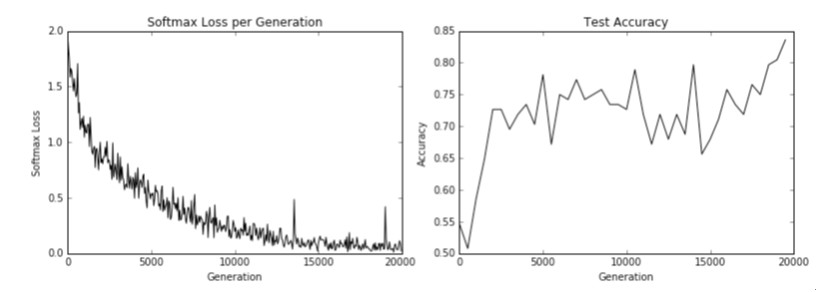

17,这导致以下输出:

[mw_shl_code=python,true]Generation 19500: Loss = 0.04461

--- Test Accuracy = 80.47%.

Generation 19550: Loss = 0.01171

Generation 19600: Loss = 0.06911

Generation 19650: Loss = 0.08629

Generation 19700: Loss = 0.05296

Generation 19750: Loss = 0.03462

Generation 19800: Loss = 0.03182

Generation 19850: Loss = 0.07092

Generation 19900: Loss = 0.11342

Generation 19950: Loss = 0.08751

Generation 20000: Loss = 0.02228

--- Test Accuracy = 83.59%.[/mw_shl_code]

18,最后,这里有一些matplotlib代码,它将绘制培训过程中的损失和测试准确度:

[mw_shl_code=python,true]eval_indices = range(0, generations, eval_every)

output_indices = range(0, generations, output_every)

# Plot loss over time

plt.plot(output_indices, train_loss, 'k-')

plt.title('Softmax Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Softmax Loss')

plt.show()

# Plot accuracy over time

plt.plot(eval_indices, test_accuracy, 'k-')

plt.title('Test Accuracy')

plt.xlabel('Generation')

plt.ylabel('Accuracy')

plt.show()[/mw_shl_code]

图5:训练损失在左侧,测试精度在右侧。 对于CIFAR-10图像识别CNN,我们能够实现在测试集上达到约75%准确度的模型。

这个怎么运作

在我们下载了CIFAR-10数据之后,我们建立了一个图像管道而不是使用源字典。 有关图像管道的更多信息,请参阅官方TensorFlow CIFAR-10教程。 我们使用此列车和测试管道来尝试预测图像的正确类别。 最后,该模型在测试集上达到了约75%的准确度。

也可以看看

有关CIFAR-10数据集的更多信息,请参阅学习Tiny Images的多个特征层,Alex Krizhevsky,2009。https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf

要查看原始TensorFlow代码,请访问https://github.com/tensorflow/te ... odels/image/cifar10

有关局部响应归一化的更多信息,请参阅使用深度卷积神经网络的ImageNet分类,Krizhevsky,A。等人。 2012.http://paper.nips.cc/paper/4824- ... nal-neural-networks

最新经典文章,欢迎关注公众号

原文:

Implementing an Advanced CNN

It is important to be able to extend CNN models for image recognition so that we understand how to increase the depth of the network. This may increase the accuracy of our predictions if we have enough data. Extending the depth of CNN networks is done in a standard fashion: we just repeat the convolution, maxpool, ReLU series until we are satisfied with the depth. Many of the more accurate image recognition networks operate in this fashion.

Getting ready

In this recipe, we will implement a more advanced method of reading image data and use a larger CNN to do image recognition on the CIFAR10 dataset (https://www.cs.toronto. edu/~kriz/cifar.html). This dataset has 60,000 32x32 images that fall into exactly one of ten possible classes. The potential classes for the images are airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck. You can also refer to the first bullet point of the See also section.

Most image datasets will be too large to fit into memory. What we can do with TensorFlow is set up an image pipeline to read in a batch at a time from a file. We do this by essentially setting up an image reader and then creating a batch queue that operates on the image reader.

Also, with image recognition data, it is common to randomly perturb the image before sending it through for training. Here, we will randomly crop, flip, and change the brightness.

This recipe is an adapted version of the official TensorFlow CIFAR-10 tutorial, which is available under the See also section at the end of this chapter. We have condensed the tutorial into one script and will go through it line-by-line and explain all the code that is necessary. We also revert some constants and parameters to the original cited paper values, which we will point out in the following appropriated steps.

How to do it…

1、 To start with, we load the necessary libraries and start a graph session:

import os

import sys

import tarfile

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from six.moves import urllib

sess = tf.Session()

2、 Now we'll declare some of the model parameters. Our batch size will be 128 (for train and test). We will output a status every 50 generations and run for a total of 20,000 generations. Every 500 generations, we'll evaluate on a batch of the test data. We'll then declare some image parameters, height and width, and what size the random cropped images will take. There are three channels (red, green, and blue), and we have ten different targets. Then, we'll declare where we will store the data and image batches from the queue:

batch_size = 128

output_every = 50

generations = 20000

eval_every = 500

image_height = 32

image_width = 32

crop_height = 24

crop_width = 24

num_channels = 3

num_targets = 10

data_dir = 'temp'

extract_folder = 'cifar-10-batches-bin'

3、 It is recommended to lower the learning rate as we progress towards a good model, so we will exponentially decrease the learning rate: the initial learning rate will be set at 0.1, and we will exponentially decrease it by a factor of 10% every 250 generations. The exact formula will be given by where x is the current generation number. The default is for this to continually decrease, but TensorFlow does accept a staircase argument which only updates the learning rate:

learning_rate = 0.1

lr_decay = 0.9

num_gens_to_wait = 250.

4、 Now we'll set up parameters so that we can read in the binary CIFAR-10 images:

image_vec_length = image_height * image_width * num_channels

record_length = 1 + image_vec_length

5、 Next, we'll set up the data directory and the URL to download the CIFAR-10 images, if we don't have them already:

data_dir = 'temp'

if not os.path.exists(data_dir):

os.makedirs(data_dir)

cifar10_url = 'http://www.cs.toronto.edu/~kriz/cifar-10-binary. tar.gz'

data_file = os.path.join(data_dir, 'cifar-10-binary.tar.gz')

if not os.path.isfile(data_file):

# Download file

filepath, _ = urllib.request.urlretrieve(cifar10_url, data_ file, progress)

# Extract file

tarfile.open(filepath, 'r:gz').extractall(data_dir)

6、 We'll set up the record reader and return a randomly distorted image with the following read_cifar_files() function. First, we need to declare a record reader object that will read in a fixed length of bytes. After we read the image queue, we'll split apart the image and label. Finally, we will randomly distort the image with TensorFlow's built in image modification functions:

def read_cifar_files(filename_queue, distort_images = True):

reader = tf.FixedLengthRecordReader(record_bytes=record_ length)

key, record_string = reader.read(filename_queue)

record_bytes = tf.decode_raw(record_string, tf.uint8)

# Extract label

image_label = tf.cast(tf.slice(record_bytes, [0], [1]), tf.int32)

# Extract image

image_extracted = tf.reshape(tf.slice(record_bytes, [1], [image_vec_length]), [num_channels, image_height, image_width])

# Reshape image

image_uint8image = tf.transpose(image_extracted, [1, 2, 0])

reshaped_image = tf.cast(image_uint8image, tf.float32)

# Randomly Crop image

final_image = tf.image.resize_image_with_crop_or_pad(reshaped_ image, crop_width, crop_height)

if distort_images:

# Randomly flip the image horizontally, change the brightness and contrast

final_image = tf.image.random_flip_left_right(final_image)

final_image = tf.image.random_brightness(final_image,max_ delta=63)

final_image = tf.image.random_contrast(final_ image,lower=0.2, upper=1.8)

# Normalize whitening

final_image = tf.image.per_image_whitening(final_image)

return(final_image, image_label)

7、 Now we'll declare a function that will populate our image pipeline for the batch processor to use. We first need to set up the file list of images we want to read through, and to define how to read them with an input producer object, created through prebuilt TensorFlow functions. The input producer can be passed into the reading function that we created in the preceding step, read_cifar_files(). We'll then set a batch reader on the queue, shuffle_batch():

def input_pipeline(batch_size, train_logical=True):

if train_logical:

files = [os.path.join(data_dir, extract_folder, 'data_ batch_{}.bin'.format(i)) for i in range(1,6)]

else:

files = [os.path.join(data_dir, extract_folder, 'test_ batch.bin')]

filename_queue = tf.train.string_input_producer(files)

image, label = read_cifar_files(filename_queue)

min_after_dequeue = 1000

capacity = min_after_dequeue + 3 * batch_size

example_batch, label_batch = tf.train.shuffle_batch([image, label], batch_size, capacity, min_after_dequeue)

return(example_batch, label_batch)

It is important to set the min_after_dequeue properly. This parameter is responsible for setting the minimum size of an image buffer for sampling. The official TensorFlow documentation recommends setting it to (#threads + error margin)*batch_size. Note that setting it to a larger size results in more uniform shuffling, as it is shuffling from a larger set of data in the queue, but that more memory will also be used in the process.

8、 Next, we can declare our model function. The model we will use has two convolutional layers, followed by three fully connected layers. To make variable declaration easier, we'll start by declaring two variable functions. The two convolutional layers will create 64 features each. The first fully connected layer will connect the 2nd convolutional layer with 384 hidden nodes. The second fully connected operation will connect those 384 hidden nodes to 192 hidden nodes. The final hidden layer operation will then connect the 192 nodes to the 10 output classes we are trying to predict. See the following inline comments marked with #:

def cifar_cnn_model(input_images, batch_size, train_logical=True):

def truncated_normal_var(name, shape, dtype):

return(tf.get_variable(name=name, shape=shape, dtype=dtype, initializer=tf.truncated_normal_ initializer(stddev=0.05)))

def zero_var(name, shape, dtype):

return(tf.get_variable(name=name, shape=shape, dtype=dtype, initializer=tf.constant_initializer(0.0)))

# First Convolutional Layer

with tf.variable_scope('conv1') as scope:

# Conv_kernel is 5x5 for all 3 colors and we will create 64 features

conv1_kernel = truncated_normal_var(name='conv_kernel1', shape=[5, 5, 3, 64], dtype=tf.float32)

# We convolve across the image with a stride size of 1

conv1 = tf.nn.conv2d(input_images, conv1_kernel, [1, 1, 1, 1], padding='SAME')

# Initialize and add the bias term

conv1_bias = zero_var(name='conv_bias1', shape=[64], dtype=tf.float32)

conv1_add_bias = tf.nn.bias_add(conv1, conv1_bias)

# ReLU element wise

relu_conv1 = tf.nn.relu(conv1_add_bias)

# Max Pooling

pool1 = tf.nn.max_pool(relu_conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1],padding='SAME', name='pool_layer1')

# Local Response Normalization

norm1 = tf.nn.lrn(pool1, depth_radius=5, bias=2.0, alpha=1e-3, beta=0.75, name='norm1')

# Second Convolutional Layer

with tf.variable_scope('conv2') as scope:

# Conv kernel is 5x5, across all prior 64 features and we create 64 more features

conv2_kernel = truncated_normal_var(name='conv_kernel2', shape=[5, 5, 64, 64], dtype=tf.float32)

# Convolve filter across prior output with stride size of 1

conv2 = tf.nn.conv2d(norm1, conv2_kernel, [1, 1, 1, 1], padding='SAME')

# Initialize and add the bias

conv2_bias = zero_var(name='conv_bias2', shape=[64], dtype=tf.float32)

conv2_add_bias = tf.nn.bias_add(conv2, conv2_bias)

# ReLU element wise

relu_conv2 = tf.nn.relu(conv2_add_bias)

# Max Pooling

pool2 = tf.nn.max_pool(relu_conv2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME', name='pool_layer2')

# Local Response Normalization (parameters from paper)

norm2 = tf.nn.lrn(pool2, depth_radius=5, bias=2.0, alpha=1e-3, beta=0.75, name='norm2')

# Reshape output into a single matrix for multiplication for the fully connected layers

reshaped_output = tf.reshape(norm2, [batch_size, -1])

reshaped_dim = reshaped_output.get_shape()[1].value

# First Fully Connected Layer

with tf.variable_scope('full1') as scope:

# Fully connected layer will have 384 outputs.

full_weight1 = truncated_normal_var(name='full_mult1', shape=[reshaped_dim, 384], dtype=tf.float32)

full_bias1 = zero_var(name='full_bias1', shape=[384], dtype=tf.float32)

full_layer1 = tf.nn.relu(tf.add(tf.matmul(reshaped_output, full_weight1), full_bias1))

# Second Fully Connected Layer

with tf.variable_scope('full2') as scope:

# Second fully connected layer has 192 outputs.

full_weight2 = truncated_normal_var(name='full_mult2', shape=[384, 192], dtype=tf.float32)

full_bias2 = zero_var(name='full_bias2', shape=[192], dtype=tf.float32)

full_layer2 = tf.nn.relu(tf.add(tf.matmul(full_layer1, full_weight2), full_bias2))

# Final Fully Connected Layer -> 10 categories for output (num_targets)

with tf.variable_scope('full3') as scope:

# Final fully connected layer has 10 (num_targets) outputs.

full_weight3 = truncated_normal_var(name='full_mult3', shape=[192, num_targets], dtype=tf.float32)

full_bias3 = zero_var(name='full_bias3', shape=[num_ targets], dtype=tf.float32)

final_output = tf.add(tf.matmul(full_layer2, full_ weight3), full_bias3)

return(final_output)

Our local response normalization parameters are taken from the paper and are referenced in See also (3).

9、 Now we'll create the loss function. We will use the softmax function because a picture can only take on exactly one category, so the output should be a probability distribution over the ten targets:

def cifar_loss(logits, targets):

# Get rid of extra dimensions and cast targets into integers

targets = tf.squeeze(tf.cast(targets, tf.int32))

# Calculate cross entropy from logits and targets

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_ logits(logits, targets)

# Take the average loss across batch size

cross_entropy_mean = tf.reduce_mean(cross_entropy)

return(cross_entropy_mean)

10、 Next, we declare our training step. The learning rate will decrease in an exponential step function:

def train_step(loss_value, generation_num):

# Our learning rate is an exponential decay (stepped down)

model_learning_rate = tf.train.exponential_decay(learning_ rate, generation_num, num_gens_to_wait, lr_decay, staircase=True)

# Create optimizer

my_optimizer = tf.train.GradientDescentOptimizer(model_ learning_rate)

# Initialize train step

train_step = my_optimizer.minimize(loss_value)

return(train_step)

11、 We must also have an accuracy function that calculates the accuracy across a batch of images. We'll input the logits and target vectors, and output an averaged accuracy. We can then use this for both the train and test batches:

def accuracy_of_batch(logits, targets):

# Make sure targets are integers and drop extra dimensions

targets = tf.squeeze(tf.cast(targets, tf.int32))

# Get predicted values by finding which logit is the greatest

batch_predictions = tf.cast(tf.argmax(logits, 1), tf.int32)

# Check if they are equal across the batch

predicted_correctly = tf.equal(batch_predictions, targets)

# Average the 1's and 0's (True's and False's) across the batch size

accuracy = tf.reduce_mean(tf.cast(predicted_correctly, tf.float32))

return(accuracy)

12、Now that we have an imagepipeline function, we can initialize both the training image pipeline and the test image pipeline:

images, targets = input_pipeline(batch_size, train_logical=True)

test_images, test_targets = input_pipeline(batch_size, train_ logical=False)

13、 Next, we'll initialize the model for the training output and the test output. It is important to note that we must declare scope.reuse_variables() after we create the training model so that, when we declare the model for the test network, it will use the same model parameters:

with tf.variable_scope('model_definition') as scope:

# Declare the training network model

model_output = cifar_cnn_model(images, batch_size)

# Use same variables within scope

scope.reuse_variables()

# Declare test model output

test_output = cifar_cnn_model(test_images, batch_size)

14、 We can now initialize our loss and test accuracy functions. Then we'll declare the generation variable. This variable needs to be declared as non-trainable, and passed to our training function that uses it in the learning rate exponential decay calculation:

loss = cifar_loss(model_output, targets)

accuracy = accuracy_of_batch(test_output, test_targets)

generation_num = tf.Variable(0, trainable=False)

train_op = train_step(loss, generation_num)

15、 We'll now initialize all of the model's variables and then start the image pipeline by running the TensorFlow function, start_queue_runners(). When we start the train or test model output, the pipeline will feed in a batch of images in place of a feed dictionary:

init = tf.initialize_all_variables()

sess.run(init)

tf.train.start_queue_runners(sess=sess)

16、 We now loop through our training generations and save the training loss and the test accuracy:

train_loss = []

test_accuracy = []

for i in range(generations):

_, loss_value = sess.run([train_op, loss])

if (i+1) % output_every == 0:

train_loss.append(loss_value)

output = 'Generation {}: Loss = {:.5f}'.format((i+1), loss_value)

print(output)

if (i+1) % eval_every == 0:

[temp_accuracy] = sess.run([accuracy])

test_accuracy.append(temp_accuracy)

acc_output = ' --- Test Accuracy= {:.2f}%.'.format(100.*temp_accuracy)

print(acc_output)

17、This results in the following output:

Generation 19500: Loss = 0.04461

--- Test Accuracy = 80.47%.

Generation 19550: Loss = 0.01171

Generation 19600: Loss = 0.06911

Generation 19650: Loss = 0.08629

Generation 19700: Loss = 0.05296

Generation 19750: Loss = 0.03462

Generation 19800: Loss = 0.03182

Generation 19850: Loss = 0.07092

Generation 19900: Loss = 0.11342

Generation 19950: Loss = 0.08751

Generation 20000: Loss = 0.02228

--- Test Accuracy = 83.59%.

18、Finally, here is some matplotlib code that will plot the loss and test accuracy over the course of the training:

eval_indices = range(0, generations, eval_every)

output_indices = range(0, generations, output_every)

# Plot loss over time

plt.plot(output_indices, train_loss, 'k-')

plt.title('Softmax Loss per Generation')

plt.xlabel('Generation')

plt.ylabel('Softmax Loss')

plt.show()

# Plot accuracy over time

plt.plot(eval_indices, test_accuracy, 'k-')

plt.title('Test Accuracy')

plt.xlabel('Generation')

plt.ylabel('Accuracy')

plt.show()

Figure 5: The training loss is on the left and the test accuracy is on the right. For the CIFAR-10 image recognition CNN, we were able to achieve a model that reaches around 75% accuracy on the test set.

How it works…

After we downloaded the CIFAR-10 data, we established an image pipeline instead of using a feed dictionary. For more information on the image pipeline, please see the official TensorFlow CIFAR-10 tutorials. We used this train and test pipeline to try to predict the correct category of the images. By the end, the model had achieved around 75% accuracy on the test set.

See also

For more information about the CIFAR-10 dataset, please see Learning Multiple Layers of Features from Tiny Images, Alex Krizhevsky, 2009. https://www. cs.toronto.edu/~kriz/learning-features-2009-TR.pdf

To see original TensorFlow code, visit https://github.com/tensorflow/ tensorflow/tree/r0.11/tensorflow/models/image/cifar10

For more on local response normalization, please see, ImageNet Classification with Deep Convolutional Neural Networks, Krizhevsky, A., et. al. 2012. http:// papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks

|

/2

/2