本帖最后由 levycui 于 2019-12-18 19:57 编辑

问题导读:

1、如何使用RNN单元列表的MultiRNNCell()函数轻松实现多层?

2、如何使用TensorFlow的MultiRNNCel()函数创建多层RNN模型?

3、如何使用RNN序列以预测其他可变长度的序列?

4、TensorFlow的序列模型如何使用get_batch()函数?

上一篇:TensorFlow ML cookbook 第九章2节 实施LSTM模型

堆叠多个LSTM层

就像我们可以增加神经网络或CNN的深度一样,我们可以增加RNN网络的深度。 在本食谱中,我们应用了三层深LSTM来改进莎士比亚语言的生成。

做好准备

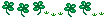

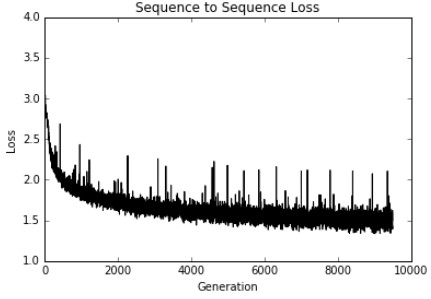

我们可以通过将递归神经网络彼此堆叠来增加深度。 本质上,我们将采用目标输出并将其馈送到另一个网络。 要了解仅两层的工作方式,请参见下图:

图5:在前面的图中,我们将单层RNN扩展为两层。对于原始的单层版本,请参阅上一章介绍中的图。

TensorFlow可以使用接受RNN单元列表的MultiRNNCell()函数轻松实现多层。通过这种行为,很容易使用MultiRNNCell([rnn_cell] * num_layers)从Python中的一个单元创建多层RNN。

对于此配方,我们将执行与上一个配方相同的莎士比亚预测,将有两个更改:第一个更改将具有三层LSTM模型而不是仅一个层,第二个更改将是角色级预测,而不是单词。进行字符级预测将大大减少我们的潜在词汇量,使其只有40个字符(26个字母,10个数字,1个空格和3个特殊字符)。

怎么做…

与其重新列出所有相同的代码,我们将说明本节中的代码与上一节的不同之处,有关完整代码,请参见GitHub存储库,网址为https://github.com/nfmcclure/tensorflow_cookbook:

1.首先,我们需要设置模型的层数。将其作为参数放在脚本的开头,并带有其他模型参数:

[mw_shl_code=python,true]num_layers = 3

min_word_freq = 5

rnn_size = 128

epochs = 10 [/mw_shl_code]

2.第一个主要变化是我们将按字符而不是单词加载,处理和输入文本。 为了做到这一点,在清理文本之后,我们可以使用Python的list()命令按字符分隔整个文本:

[mw_shl_code=python,true]s_text = re.sub(r'[{}]'.format(punctuation), ' ', s_text)

s_text = re.sub('\s+', ' ', s_text ).strip().lower()

# Split up by characters

char_list = list(s_text) [/mw_shl_code]

3.现在,我们需要将LSTM模型更改为具有多层。我们使用num_layers变量,并使用TensorFlow的MultiRNNCell()函数创建多层RNN模型,如下所示:

[mw_shl_code=python,true]class LSTM_Model():

def __init__(self, rnn_size, num_layers, batch_size, learning_ rate,

training_seq_len, vocab_size, infer_ sample=False):

self.rnn_size = rnn_size

self.num_layers = num_layers

self.vocab_size = vocab_size

self.infer_sample = infer_sample

self.learning_rate = learning_rate

…

self.lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(rnn_size)

self.lstm_cell = tf.nn.rnn_cell.MultiRNNCell([self.lstm_ cell] * self.num_layers)

self.initial_state = self.lstm_cell.zero_state(self.batch_ size, tf.float32)

self.x_data = tf.placeholder(tf.int32, [self.batch_size, self.training_seq_len])

self.y_output = tf.placeholder(tf.int32, [self.batch_size, self.training_seq_len]) [/mw_shl_code]

注意TensorFlow的MultiRNNCell()函数接受一个RNN单元列表。在这个项目中,RNN层都是相同的,但是您可以列出要堆叠的RNN层的列表。

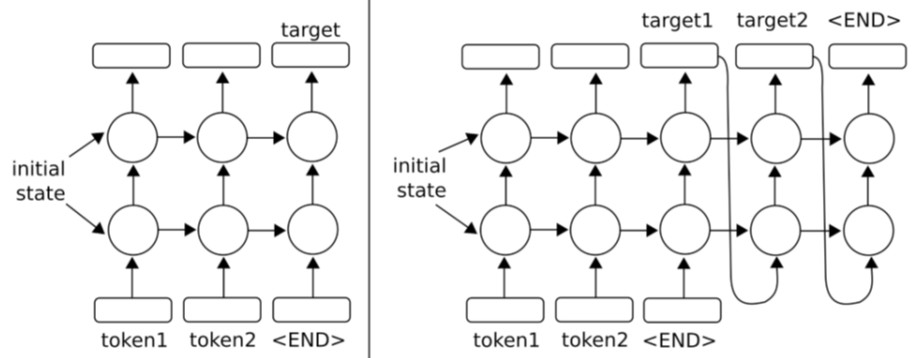

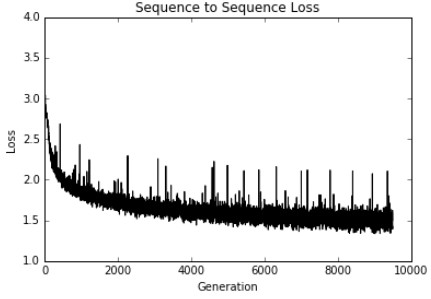

4.最后,这是我们如何绘制代代相传的训练损失的方法:

[mw_shl_code=python,true]plt.plot(train_loss, 'k-')

plt.title('Sequence to Sequence Loss')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.show()[/mw_shl_code]

图6:多层LSTM莎士比亚模型的训练损失与世代的关系图。

怎么运行的

使用TensorFlow可以轻松地将RNN层扩展为仅包含RNN单元列表的多层。对于本食谱,我们使用了与之前食谱相同的莎士比亚数据,但通过字符而不是单词对其进行了处理,并通过三层LSTM模型将其输入以生成莎士比亚文本,我们可以看到仅10个历元之后能够产生像单词一样的古英语。

创建序列到序列模型

由于我们使用的每个RNN单元都有一个输出,因此我们可以训练RNN序列以预测其他可变长度的序列。对于本食谱,我们将利用这一事实来创建英语到德语的翻译模型。

做好准备

对于本食谱,我们将尝试建立一种语言翻译模型,以将英语翻译成德语。

TensorFlow具有用于序列到序列训练的内置模型类。我们将说明如何在下载的英语-德语句子上进行训练和使用。我们将使用的数据来自编译后的文件http://www.manythings.org/,它编译了Tatoeba项目的数据(http://tatoeba.org/home)。此数据是制表符分隔的英语-德语句子翻译。例如,一行可能包含句子hello. /t hallo。数据包含数千个不同长度的句子。

怎么做…

1.我们首先加载必要的库并开始一个图形会话:

[mw_shl_code=python,true]import os

import string

import requests

import io

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from zipfile import ZipFile

from collections import Counter

from tensorflow.models.rnn.translate import seq2seq_model

sess = tf.Session() [/mw_shl_code]

2.现在,我们设置模型参数。将学习率设置为0.1。在这里,我们还将每100代将学习率降低1%。这将有助于在后代中对模型进行微调。 -off也为参数设置最大梯度大小。我们使用RNN大小为500,并将英语和德语词汇限制为最常用的10,000个单词。我们将小写两个词汇并删除标点符号。 通过将变音符号(ä,ë,ï,ö和ü)和eszetts(ß)更改为字母数字来更改词汇表:

[mw_shl_code=python,true]learning_rate = 0.1

lr_decay_rate = 0.99

lr_decay_every = 100

max_gradient = 5.0

batch_size = 50

num_layers = 3

rnn_size = 500

layer_size = 512

generations = 10000

vocab_size = 10000

save_every = 1000

eval_every = 500

output_every = 50

punct = string.punctuation

data_dir = 'temp'

data_file = 'eng_ger.txt'

model_path = 'seq2seq_model'

full_model_dir = os.path.join(data_dir, model_path) [/mw_shl_code]

3.现在,我们设置了三个英语短语来测试翻译模型并查看其效果:

[mw_shl_code=python,true]test_english = ['hello where is my computer',

'the quick brown fox jumped over the lazy dog',

'is it going to rain tomorrow'] [/mw_shl_code]

4.接下来,我们创建模型目录。然后检查数据是否已下载,然后下载并保存或从文件中加载:

[mw_shl_code=python,true]if not os.path.exists(full_model_dir):

os.makedirs(full_model_dir)

# Make data directory

if not os.path.exists(data_dir):

os.makedirs(data_dir)

print('Loading English-German Data')

# Check for data, if it doesn't exist, download it and save it

if not os.path.isfile(os.path.join(data_dir, data_file)):

print('Data not found, downloading Eng-Ger sentences from www. manythings.org')

sentence_url = 'http://www.manythings.org/anki/deu-eng.zip'

r = requests.get(sentence_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('deu.txt')

# Format Data

eng_ger_data = file.decode()

eng_ger_data = eng_ger_data.encode('ascii',errors='ignore')

eng_ger_data = eng_ger_data.decode().split('\n')

# Write to file

with open(os.path.join(data_dir, data_file), 'w') as out_conn:

for sentence in eng_ger_data:

out_conn.write(sentence + '\n')

else:

eng_ger_data = []

with open(os.path.join(data_dir, data_file), 'r') as in_conn:

for row in in_conn:

eng_ger_data.append(row[:-1])[/mw_shl_code]

5.我们通过删除标点符号,拆分英语和德语句子并降低所有字母的大小来处理数据:

[mw_shl_code=python,true]eng_ger_data = [''.join(char for char in sent if char not in punct) for sent in eng_ger_data]

# Split each sentence by tabs

eng_ger_data = [x.split('\t') for x in eng_ger_data if len(x)>=1]

[english_sentence, german_sentence] = [list(x) for x in zip(*eng_ ger_data)]

english_sentence = [x.lower().split() for x in english_sentence]

german_sentence = [x.lower().split() for x in german_sentence] [/mw_shl_code]

6.现在,我们创建英语和德语词汇,从每个词汇中挑选出10,000个最常用的单词。 未知的其余单词将标记为0。 有时这不是一个限制因素,因为大多数不常用词都是全称代词(名称和位置):

[mw_shl_code=python,true]all_english_words = [word for sentence in english_sentence for word in sentence]

all_english_counts = Counter(all_english_words)

eng_word_keys = [x[0] for x in all_english_counts.most_ common(vocab_size-1)] #-1 because 0=unknown is also in there

eng_vocab2ix = dict(zip(eng_word_keys, range(1,vocab_size)))

eng_ix2vocab = {val:key for key, val in eng_vocab2ix.items()}

english_processed = []

for sent in english_sentence:

temp_sentence = []

for word in sent:

try:

temp_sentence.append(eng_vocab2ix[word])

except:

temp_sentence.append(0)

english_processed.append(temp_sentence)

all_german_words = [word for sentence in german_sentence for word in sentence]

all_german_counts = Counter(all_german_words)

ger_word_keys = [x[0] for x in all_german_counts.most_ common(vocab_size-1)]

ger_vocab2ix = dict(zip(ger_word_keys, range(1,vocab_size)))

ger_ix2vocab = {val:key for key, val in ger_vocab2ix.items()}

german_processed = []

for sent in german_sentence:

temp_sentence = []

for word in sent:

try:

temp_sentence.append(ger_vocab2ix[word])

except:

temp_sentence.append(0)

german_processed.append(temp_sentence) [/mw_shl_code]

7.我们还必须处理测试词汇并将我们选择的测试句子更改为词汇索引:

[mw_shl_code=python,true]test_data = []

for sentence in test_english:

temp_sentence = []

for word in sentence.split(' '):

try:

temp_sentence.append(eng_vocab2ix[word])

except:

# Use '0' if the word isn't in our vocabulary

temp_sentence.append(0)

test_data.append(temp_sentence) [/mw_shl_code]

8.由于某些句子很短而有些则很长,我们将要为长度不同的句子创建单独的模型。 这样做的原因是为了最小化填充字符对较短句子的影响。 一种方法是将数据存储到大小相似的存储桶中。 我们为存储桶选择了以下截止值,以使每个存储桶中的句子数量相似:

[mw_shl_code=python,true]x_maxs = [5, 7, 11, 50]

y_maxs = [10, 12, 17, 60]

buckets = [x for x in zip(x_maxs, y_maxs)]

bucketed_data = [[] for _ in range(len(x_maxs))]

for eng, ger in zip(english_processed, german_processed):

for ix, (x_max, y_max) in buckets:

if (len(eng) <= x_max) and (len(ger) <= y_max):

bucketed_data[ix].append([eng, ger])

break [/mw_shl_code]

9.现在,我们将TensorFlow的内置序列到序列模型与我们的参数一起使用,以创建一个函数,该函数将用于创建共享相同变量的可训练模型和可测试模型:

[mw_shl_code=python,true]def translation_model(sess, input_vocab_size, output_vocab_size,

buckets, rnn_size, num_layers, max_gradient,

learning_rate, lr_decay_rate, forward_only):

model = seq2seq_model.Seq2SeqModel(

input_vocab_size,

output_vocab_size,

buckets,

rnn_size,

num_layers,

max_gradient,

batch_size,

learning_rate,

lr_decay_rate,

forward_only=forward_only,

dtype=tf.float32)

return(model) [/mw_shl_code]

10.我们设置变量范围并创建可训练的模型,然后告诉TensorFlow重用该范围中的变量,并创建测试模型,其批处理大小为1:

[mw_shl_code=python,true]input_vocab_size = vocab_size

output_vocab_size = vocab_size

with tf.variable_scope('translate_model') as scope:

translate_model = translation_model(sess, vocab_size, vocab_ size,

buckets, rnn_size, num_ layers,

max_gradient, learning_ rate,

lr_decay_rate, False)

#Reuse the variables for the test model

scope.reuse_variables()

test_model = translation_model(sess, vocab_size, vocab_size,

buckets, rnn_size, num_layers,

max_gradient, learning_rate,

lr_decay_rate, True)

test_model.batch_size = 1 [/mw_shl_code]

11.接下来,我们初始化模型中的变量:

[mw_shl_code=python,true]init = tf.initialize_all_variables()

sess.run(init) [/mw_shl_code]

12.最后,我们可以通过为每一代调用step函数来训练序列到序列模型。 TensorFlow的序列到序列模型具有get_batch()函数,该函数将从给定存储桶索引中检索一批句子。 我们还会降低学习率,保存模型,并在适当时评估测试语句:

[mw_shl_code=python,true]train_loss = []

for i in range(generations):

rand_bucket_ix = np.random.choice(len(bucketed_data))

model_outputs = translate_model.get_batch(bucketed_data, rand_ bucket_ix)

encoder_inputs, decoder_inputs, target_weights = model_outputs

# Get the (gradient norm, loss, and outputs)

_, step_loss, _ = translate_model.step(sess, encoder_inputs, decoder_inputs, target_weights, rand_bucket_ix, False)

# Output status

if (i+1) % output_every == 0:

train_loss.append(step_loss)

print('Gen #{} out of {}. Loss: {:.4}'.format(i+1, generations, step_loss))

# Check if we should decay the learning rate

if (i+1) % lr_decay_every == 0:

sess.run(translate_model.learning_rate_decay_op)

# Save model

if (i+1) % save_every == 0:

print('Saving model to {}.'.format(full_model_dir))

model_save_path = os.path.join(full_model_dir, "eng_ger_ translation.ckpt")

translate_model.saver.save(sess, model_save_path, global_ step=i)

# Eval on test set

if (i+1) % eval_every == 0:

for ix, sentence in enumerate(test_data):

# Find which bucket sentence goes in

bucket_id = next(index for index, val in enumerate(x_ maxs) if val>=len(sentence))

# Get RNN model outputs

encoder_inputs, decoder_inputs, target_weights = test_ model.get_batch(

{bucket_id: [(sentence, [])]}, bucket_id)

# Get logits

_, test_loss, output_logits = test_model.step(sess, encoder_inputs, decoder_inputs, target_weights, bucket_id, True)

ix_output = [int(np.argmax(logit, axis=1)) for logit in output_logits]

# If there is a 0 symbol in outputs end the output there.

ix_output = ix_output[0:[ix for ix, x in enumerate(ix_ output+[0]) if x==0][0]]

# Get german words from indices

test_german = [ger_ix2vocab[x] for x in ix_output]

print('English: {}'.format(test_english[ix]))

print('German: {}'.format(test_german)) [/mw_shl_code]

13.这将产生以下输出:

[mw_shl_code=python,true]Gen #0 out of 10000. Loss: 7.377

Gen #9800 out of 10000. Loss: 3.875

Gen #9850 out of 10000. Loss: 3.748

Gen #9900 out of 10000. Loss: 3.615

Gen #9950 out of 10000. Loss: 3.889

Gen #10000 out of 10000. Loss: 3.071

Saving model to temp/seq2seq_model.

English: hello where is my computer

German: ['wo', 'ist', 'mein', 'ist']

English: the quick brown fox jumped over the lazy dog

German: ['die', 'alte', 'ist', 'von', 'mit', 'hund', 'zu']

English: is it going to rain tomorrow

German: ['ist', 'es', 'morgen', 'kommen'] [/mw_shl_code]

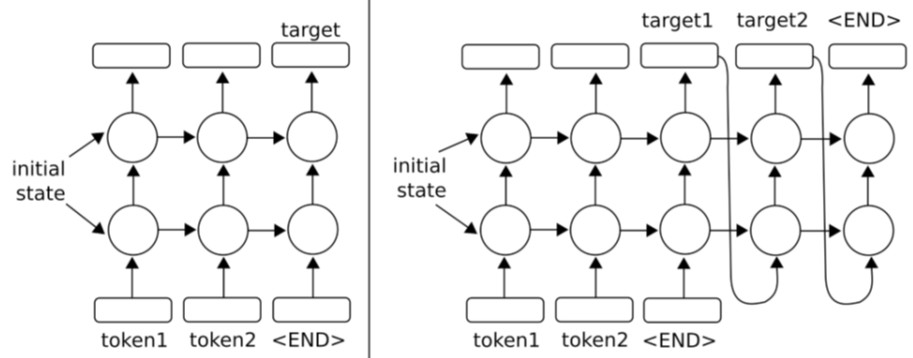

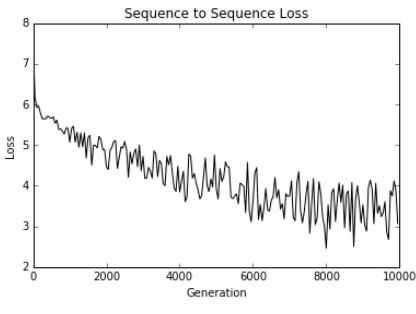

14.以下是matplotlib代码,以绘制我们定期保存的训练损失:

[mw_shl_code=python,true]loss_generations = [i for i in range(generations) if i%output_ every==0]

plt.plot(loss_generations, train_loss, 'k-')

plt.title('Sequence to Sequence Loss')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.show()[/mw_shl_code]

图7:在这里,我们绘制了10,000代英语-德语序列到序列模型的训练过程中的训练损失。

怎么运行的…

对于此食谱,我们使用TensorFlow的内置序列到序列模型将英语翻译为德语。

由于我们的测试句子没有得到完美的翻译,因此还有改进的空间。 如果我们训练的时间更长,并且有可能合并了一些存储桶(每个存储桶中都有较大的训练数据),我们也许可以改善翻译。

还有更多…

manythings网站(http://www.manythings.org/anki/)上还托管着其他类似的双语句子数据集。您可以随意替换任何吸引您的语言数据集。

最新经典文章,欢迎关注公众号

原文:

Stacking multiple LSTM Layers

Just like we can increase the depth of neural networks or CNNs, we can increase the depth of RNN networks. In this recipe we apply a three layer deep LSTM to improve our Shakespeare language generation.

Getting ready

We can increase the depth of recurrent neural networks by stacking them on top of each other. Essentially, we will be taking the target outputs and feeding them into another network. To get an idea of how this might work for just two layers, see the following figure:

Figure 5: In the preceding figures, we have extended the one-layer RNNs to have two layers. For the original one-layer versions, see the figures in the prior chapter introduction.

TensorFlow allows easy implementation of multiple layers with a MultiRNNCell() function that accepts a list of RNN cells.With this behavior, it is easy to create a multi-layer RNN from one cell in Python with MultiRNNCell([rnn_cell]*num_layers).

For this recipe, we will perform the same Shakespeare prediction that we performed in the previous recipe.There will be two changes:the first change will be having a three-stacked LSTM model instead of only one layer.The second change will be doing character-level predictions instead of words.Doing character-level predictions will greatly decrease our potential vocabulary to only 40 characters (26 letters, 10 numbers, 1 whitespace, and 3 special characters).

How to do it…

Instead of relisting all the same code, we will illustrate where our code in this section will differ from the previous section.For the full code, please see the GitHub repository at https://github.com/nfmcclure/tensorflow_cookbook:

1.We first need to set the number of layers for the model.We put this as a parameter at the beginning of our script, with the other model parameters:

num_layers = 3

min_word_freq = 5

rnn_size = 128

epochs = 10

2.The first major change is that we will load, process, and feed the text by characters, not by words. In order to accomplish this, after our cleaning of the text, we can separate the whole text by characters with Python's list() command:

s_text = re.sub(r'[{}]'.format(punctuation), ' ', s_text)

s_text = re.sub('\s+', ' ', s_text ).strip().lower()

# Split up by characters

char_list = list(s_text)

3.We now need to change our LSTM model to have multiple layers.We take in the num_layers variable and create a multiple layer RNN model with TensorFlow's MultiRNNCell() function, as shown here:

class LSTM_Model():

def __init__(self, rnn_size, num_layers, batch_size, learning_ rate,

training_seq_len, vocab_size, infer_ sample=False):

self.rnn_size = rnn_size

self.num_layers = num_layers

self.vocab_size = vocab_size

self.infer_sample = infer_sample

self.learning_rate = learning_rate

…

self.lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(rnn_size)

self.lstm_cell = tf.nn.rnn_cell.MultiRNNCell([self.lstm_ cell] * self.num_layers)

self.initial_state = self.lstm_cell.zero_state(self.batch_ size, tf.float32)

self.x_data = tf.placeholder(tf.int32, [self.batch_size, self.training_seq_len])

self.y_output = tf.placeholder(tf.int32, [self.batch_size, self.training_seq_len])

Note that TensorFlow's MultiRNNCell() function accepts a list of RNN cells.In this project, the RNN layers are all the same, but you can make a list of whichever RNN layers you would like to stack on top of each other.

4.Finally, here is how we plot the training loss over the generations:

plt.plot(train_loss, 'k-')

plt.title('Sequence to Sequence Loss')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.show()

Figure 6: A plot of the training loss versus generations for the multiple-layer LSTM Shakespeare model.

How it works…

TensorFlow makes it easy to extendan RNN layer to multiple layers with just a list of RNN cells. For this recipe, we used the same Shakespeare data as the previous recipe, but processed it by characters instead of words.We fed this through a triple-layered LSTM model to generate Shakespeare text.We can see that after only 10 epochs, we were able to generate archaic English like words.

Creating Sequence-to-Sequence Models

Since every RNN unit we use also has an output, we can train RNN sequences to predict other sequences of variable length. For this recipe, we will take advantage of this fact to create an English to German translation model.

Getting ready

For this recipe, we will attempt to build a language translation model to translate from English to German.

TensorFlow has a built-in model class for sequence-to-sequence training.We will illustrate how to train and use it on downloaded English–German sentences.The data we will use comes from a compiled ZIP file at http://www.manythings.org/, which compiles the data from the Tatoeba Project (http://tatoeba.org/home).This data is a tab-delimited English–German sentence translation. For example, a row might contain the sentence, hello. /t hallo.The data contains thousands of sentences of various lengths.

How to do it…

1.We start by loading the necessary libraries and starting a graph session:

import os

import string

import requests

import io

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from zipfile import ZipFile

from collections import Counter

from tensorflow.models.rnn.translate import seq2seq_model

sess = tf.Session()

2.Now we set the model parameters.We set the learning rate to be 0.1.Here, we will also decay the learning rate by 1% every 100 generations.This will help fine-tune the model in later generations.We set a cut-off for the maximum gradient size for the parameters as well.We use an RNN size of 500, and limit the English and German vocabulary to be the most frequent 10,000 words.We will lowercase both vocabularies and remove punctuation.We also normalize the German vocabulary by changing the umlauts (ä,ë,ï,ö, and ü) and eszetts (ß) to be alpha-numeric:

learning_rate = 0.1

lr_decay_rate = 0.99

lr_decay_every = 100

max_gradient = 5.0

batch_size = 50

num_layers = 3

rnn_size = 500

layer_size = 512

generations = 10000

vocab_size = 10000

save_every = 1000

eval_every = 500

output_every = 50

punct = string.punctuation

data_dir = 'temp'

data_file = 'eng_ger.txt'

model_path = 'seq2seq_model'

full_model_dir = os.path.join(data_dir, model_path)

3.We now set up three English phrases to test the translation model and see how it is performing:

test_english = ['hello where is my computer',

'the quick brown fox jumped over the lazy dog',

'is it going to rain tomorrow']

4.Next we create the model directories.We then check if the data has been downloaded, and either download and save it or load it from the file:

if not os.path.exists(full_model_dir):

os.makedirs(full_model_dir)

# Make data directory

if not os.path.exists(data_dir):

os.makedirs(data_dir)

print('Loading English-German Data')

# Check for data, if it doesn't exist, download it and save it

if not os.path.isfile(os.path.join(data_dir, data_file)):

print('Data not found, downloading Eng-Ger sentences from www. manythings.org')

sentence_url = 'http://www.manythings.org/anki/deu-eng.zip'

r = requests.get(sentence_url)

z = ZipFile(io.BytesIO(r.content))

file = z.read('deu.txt')

# Format Data

eng_ger_data = file.decode()

eng_ger_data = eng_ger_data.encode('ascii',errors='ignore')

eng_ger_data = eng_ger_data.decode().split('\n')

# Write to file

with open(os.path.join(data_dir, data_file), 'w') as out_conn:

for sentence in eng_ger_data:

out_conn.write(sentence + '\n')

else:

eng_ger_data = []

with open(os.path.join(data_dir, data_file), 'r') as in_conn:

for row in in_conn:

eng_ger_data.append(row[:-1])

5.We process the data by removing the punctuation, splitting the English and German sentences, and lowercasing all of them:

eng_ger_data = [''.join(char for char in sent if char not in punct) for sent in eng_ger_data]

# Split each sentence by tabs

eng_ger_data = [x.split('\t') for x in eng_ger_data if len(x)>=1]

[english_sentence, german_sentence] = [list(x) for x in zip(*eng_ ger_data)]

english_sentence = [x.lower().split() for x in english_sentence]

german_sentence = [x.lower().split() for x in german_sentence]

6.Now we create the English and German vocabulary, picking the top 10,000 most common words in each. The rest of the words will be labeled as 0, for unknown. This is sometimes not a limiting factor, as most of the infrequent words are all proper pronouns (names and places):

all_english_words = [word for sentence in english_sentence for word in sentence]

all_english_counts = Counter(all_english_words)

eng_word_keys = [x[0] for x in all_english_counts.most_ common(vocab_size-1)] #-1 because 0=unknown is also in there

eng_vocab2ix = dict(zip(eng_word_keys, range(1,vocab_size)))

eng_ix2vocab = {val:key for key, val in eng_vocab2ix.items()}

english_processed = []

for sent in english_sentence:

temp_sentence = []

for word in sent:

try:

temp_sentence.append(eng_vocab2ix[word])

except:

temp_sentence.append(0)

english_processed.append(temp_sentence)

all_german_words = [word for sentence in german_sentence for word in sentence]

all_german_counts = Counter(all_german_words)

ger_word_keys = [x[0] for x in all_german_counts.most_ common(vocab_size-1)]

ger_vocab2ix = dict(zip(ger_word_keys, range(1,vocab_size)))

ger_ix2vocab = {val:key for key, val in ger_vocab2ix.items()}

german_processed = []

for sent in german_sentence:

temp_sentence = []

for word in sent:

try:

temp_sentence.append(ger_vocab2ix[word])

except:

temp_sentence.append(0)

german_processed.append(temp_sentence)

7.We also have to process the test vocabulary and change the test sentences we have chosen to vocabulary indices:

test_data = []

for sentence in test_english:

temp_sentence = []

for word in sentence.split(' '):

try:

temp_sentence.append(eng_vocab2ix[word])

except:

# Use '0' if the word isn't in our vocabulary

temp_sentence.append(0)

test_data.append(temp_sentence)

8.Since some of the sentences are very short and some are very long, we will want to create separate models for sentences that are different lengths. A reason to do this is to minimize the effect the padding character has on shorter sentences. One way to do this is to bucket the data into similar sized buckets. We have chosen the following cut-offs for the buckets in order to have a similar amount of sentences in each bucket:

x_maxs = [5, 7, 11, 50]

y_maxs = [10, 12, 17, 60]

buckets = [x for x in zip(x_maxs, y_maxs)]

bucketed_data = [[] for _ in range(len(x_maxs))]

for eng, ger in zip(english_processed, german_processed):

for ix, (x_max, y_max) in buckets:

if (len(eng) <= x_max) and (len(ger) <= y_max):

bucketed_data[ix].append([eng, ger])

break

9.Now we use TensorFlow's built in sequence-to-sequence model with our parameters, to create a function that will be used to create a trainable model and a testable model that share the same variables:

def translation_model(sess, input_vocab_size, output_vocab_size,

buckets, rnn_size, num_layers, max_gradient,

learning_rate, lr_decay_rate, forward_only):

model = seq2seq_model.Seq2SeqModel(

input_vocab_size,

output_vocab_size,

buckets,

rnn_size,

num_layers,

max_gradient,

batch_size,

learning_rate,

lr_decay_rate,

forward_only=forward_only,

dtype=tf.float32)

return(model)

10.We set a variable scope and create our trainable model, then tell TensorFlow to reuse the variables in that scope, and create the test model, which will have a batch size of 1:

input_vocab_size = vocab_size

output_vocab_size = vocab_size

with tf.variable_scope('translate_model') as scope:

translate_model = translation_model(sess, vocab_size, vocab_ size,

buckets, rnn_size, num_ layers,

max_gradient, learning_ rate,

lr_decay_rate, False)

#Reuse the variables for the test model

scope.reuse_variables()

test_model = translation_model(sess, vocab_size, vocab_size,

buckets, rnn_size, num_layers,

max_gradient, learning_rate,

lr_decay_rate, True)

test_model.batch_size = 1

11.Next we initialize the variables in the model:

init = tf.initialize_all_variables()

sess.run(init)

12.Finally, we can train our sequence-to-sequence model by calling the step function for every generation. TensorFlow's sequence-to-sequence model has a get_batch() function that will retrieve a batch of sentences from a given bucket index. We also decay the learning rate, save the model, and evaluate on the test sentences when appropriate:

train_loss = []

for i in range(generations):

rand_bucket_ix = np.random.choice(len(bucketed_data))

model_outputs = translate_model.get_batch(bucketed_data, rand_ bucket_ix)

encoder_inputs, decoder_inputs, target_weights = model_outputs

# Get the (gradient norm, loss, and outputs)

_, step_loss, _ = translate_model.step(sess, encoder_inputs, decoder_inputs, target_weights, rand_bucket_ix, False)

# Output status

if (i+1) % output_every == 0:

train_loss.append(step_loss)

print('Gen #{} out of {}. Loss: {:.4}'.format(i+1, generations, step_loss))

# Check if we should decay the learning rate

if (i+1) % lr_decay_every == 0:

sess.run(translate_model.learning_rate_decay_op)

# Save model

if (i+1) % save_every == 0:

print('Saving model to {}.'.format(full_model_dir))

model_save_path = os.path.join(full_model_dir, "eng_ger_ translation.ckpt")

translate_model.saver.save(sess, model_save_path, global_ step=i)

# Eval on test set

if (i+1) % eval_every == 0:

for ix, sentence in enumerate(test_data):

# Find which bucket sentence goes in

bucket_id = next(index for index, val in enumerate(x_ maxs) if val>=len(sentence))

# Get RNN model outputs

encoder_inputs, decoder_inputs, target_weights = test_ model.get_batch(

{bucket_id: [(sentence, [])]}, bucket_id)

# Get logits

_, test_loss, output_logits = test_model.step(sess, encoder_inputs, decoder_inputs, target_weights, bucket_id, True)

ix_output = [int(np.argmax(logit, axis=1)) for logit in output_logits]

# If there is a 0 symbol in outputs end the output there.

ix_output = ix_output[0:[ix for ix, x in enumerate(ix_ output+[0]) if x==0][0]]

# Get german words from indices

test_german = [ger_ix2vocab[x] for x in ix_output]

print('English: {}'.format(test_english[ix]))

print('German: {}'.format(test_german))

13.This results in the following output:

Gen #0 out of 10000. Loss: 7.377

Gen #9800 out of 10000. Loss: 3.875

Gen #9850 out of 10000. Loss: 3.748

Gen #9900 out of 10000. Loss: 3.615

Gen #9950 out of 10000. Loss: 3.889

Gen #10000 out of 10000. Loss: 3.071

Saving model to temp/seq2seq_model.

English: hello where is my computer

German: ['wo', 'ist', 'mein', 'ist']

English: the quick brown fox jumped over the lazy dog

German: ['die', 'alte', 'ist', 'von', 'mit', 'hund', 'zu']

English: is it going to rain tomorrow

German: ['ist', 'es', 'morgen', 'kommen']

14.Here is the matplotlib code to plot the training loss that we saved on regular intervals:

loss_generations = [i for i in range(generations) if i%output_ every==0]

plt.plot(loss_generations, train_loss, 'k-')

plt.title('Sequence to Sequence Loss')

plt.xlabel('Generation')

plt.ylabel('Loss')

plt.show()

Figure 7: Here, we plot the training loss over the course of 10,000 generations of training the English–German sequence-to-sequence model.

How it works…

For this recipe, we used TensorFlow's built in sequence-to-sequence model to translate from English to German.

As we did not get perfect translations on our test sentences, there is room for improvement. If we trained for longer, and potentially combined some buckets (for larger training data in each bucket), we might be able to improve our translations.

There's more…

There are other similar bilingual sentence datasets hosted on the manythings website (http://www.manythings.org/anki/).Feel free to substitute any language dataset that appeals to you.

|

/2

/2