2015-09-21 22:08:46,148 INFO [Thread-7]: hdfs.DFSClient (DFSOutputStream.java:createBlockOutputStream(1378)) - Exception in createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:599)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:529)

at org.apache.hadoop.hdfs.DFSOutputStream.createSocketForPipeline(DFSOutputStream.java:1516)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1318)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1272)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:525)

2015-09-21 22:08:46,150 INFO [Thread-7]: hdfs.DFSClient (DFSOutputStream.java:nextBlockOutputStream(1275)) - Abandoning BP-1942161036-127.0.0.1-1422629557699:blk_1073741826_1002

2015-09-21 22:08:46,163 INFO [Thread-7]: hdfs.DFSClient (DFSOutputStream.java:nextBlockOutputStream(1278)) - Excluding datanode 10.1.65.122:50010

2015-09-21 22:08:46,192 WARN [Thread-7]: hdfs.DFSClient (DFSOutputStream.java:run(627)) - DataStreamer Exception

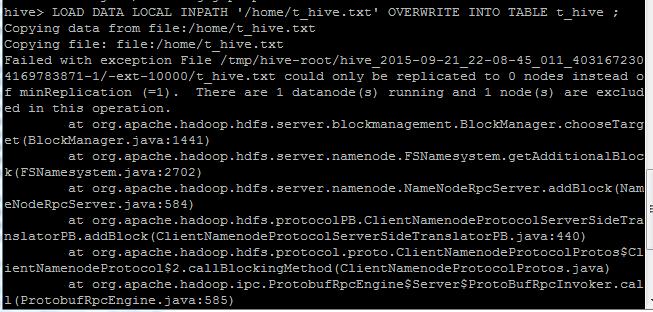

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/hive-root/hive_2015-09-21_22-08-45_011_4031672304169783871-1/-ext-10000/t_hive.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget(BlockManager.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:584)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:440)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1556)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.Client.call(Client.java:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:190)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:103)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:361)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1439)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1261)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:525)

2015-09-21 22:08:46,193 ERROR [main]: exec.Task (SessionState.java:printError(545)) - Failed with exception File /tmp/hive-root/hive_2015-09-21_22-08-45_011_4031672304169783871-1/-ext-10000/t_hive.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget(BlockManager.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:584)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:440)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1556)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007)

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/hive-root/hive_2015-09-21_22-08-45_011_4031672304169783871-1/-ext-10000/t_hive.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget(BlockManager.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:584)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:440)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1556)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.Client.call(Client.java:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:190)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:103)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:361)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1439)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1261)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:525)

2015-09-21 22:08:46,211 ERROR [main]: ql.Driver (SessionState.java:printError(545)) - FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.CopyTask

2015-09-21 22:08:46,211 INFO [main]: log.PerfLogger (PerfLogger.java:PerfLogEnd(135)) - </PERFLOG method=Driver.execute start=1442844525888 end=1442844526211 duration=323 from=org.apache.hadoop.hive.ql.Driver>

2015-09-21 22:08:46,211 INFO [main]: log.PerfLogger (PerfLogger.java:PerfLogBegin(108)) - <PERFLOG method=releaseLocks from=org.apache.hadoop.hive.ql.Driver>

2015-09-21 22:08:46,212 INFO [main]: log.PerfLogger (PerfLogger.java:PerfLogEnd(135)) - </PERFLOG method=releaseLocks start=1442844526211 end=1442844526212 duration=1 from=org.apache.hadoop.hive.ql.Driver>

2015-09-21 22:08:46,242 INFO [main]: log.PerfLogger (PerfLogger.java:PerfLogBegin(108)) - <PERFLOG method=releaseLocks from=org.apache.hadoop.hive.ql.Driver>

2015-09-21 22:08:46,242 INFO [main]: log.PerfLogger (PerfLogger.java:PerfLogEnd(135)) - </PERFLOG method=releaseLocks start=1442844526242 end=1442844526242 duration=0 from=org.apache.hadoop.hive.ql.Driver>

尝试了好久还是不行,在线求解

|

/2

/2