日志

Ceph与OpenStack整合文档

ceph cluster有两个节点,openstack作为ceph的client。

ceph-node1(admin node)devstack ubuntu12.04 192.168.88.15

ceph-node2 compute ubuntu12.04 192.168.88.16

cephclient openstack centos6.4 192.168.88.18

参考http://ceph.com/docs/master/start/, ceph的官方快速安装文档

安装前准备1 Ceph 节点设置

1.1 在每个ceph节点上创建一个用户。

ssh user@ceph-server

sudo useradd -d /home/ceph -m ceph

sudo passwd ceph

1.2 在每个Ceph节点中为用户增加 root 权限

echo "ceph ALL = (root) NOPASSWD:ALL" | sudo tee/etc/sudoers.d/ceph

sudo chmod 0440 /etc/sudoers.d/ceph

1.3 用无密码的SSH连接到每个Ceph节点来配置你的ceph-deploy管理节点. 保留密码为空

ssh-keygen

Generating public/private key pair.

Enter file in which to save the key (/ceph-client/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /ceph-client/.ssh/id_rsa.

Your public key has been saved in /ceph-client/.ssh/id_rsa.pub.

1.4 复制秘钥至每个Ceph节点

ssh-copy-id ceph@ceph-server

2 Ceph部署设置

2.1 添加发行密钥

wget -q -O-'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc' | sudoapt-key add -

2.2增加Ceph包至ceph-deploy管理节点

echo deb http://ceph.com/debian-dumpling/ $(lsb_release-sc) main | sudo tee /etc/apt/sources.list.d/ceph.list

(dumpling 是ceph的版本代号)

2.3更新你的仓库并安装ceph-deploy

sudo apt-get update

sudo apt-get install ceph-deploy

Ceph-deploy部署cephcluster的过程中,会产生一些配置文件,所以最好创建一个文件夹

mkdirmy-cluster

cdmy-cluster

重要:如果你是用不同的用户登录的,就不要用sudo或者root权限运行ceph-deploy,因为在远程的主机上不能发出sudo命令

1. 创建一个集群

如果部署失败了可以用下面的命令恢复

ceph-deploy purgedata {ceph-node} [{ceph-node}]

ceph-deploy forgetkeys

在admin node 上用ceph-deploy部署

1.1 创建一个集群

ceph-deploy new {ceph-node}

ceph-deploy new ceph-node1

1.2 安装ceph

ceph-deploy install {ceph-node}[{ceph-node} ...]

ceph-deploy installceph-node1 ceph-node2

1.3 增加一个ceph monitor

ceph-deploy mon create {ceph-node}

ceph-deploy mon create ceph-node1

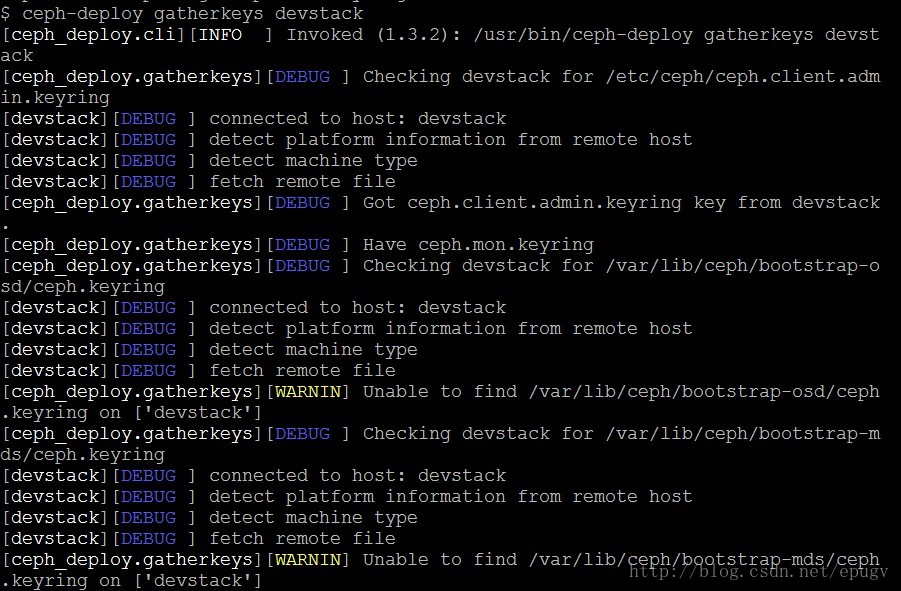

1.4 收集密钥

ceph-deploy gatherkeys {ceph-node}

ceph-deploy gatherkeys ceph-node1

可能会产生如下错误:

可将其他节点上的/var/lib/ceph/boot-strap-mds/ceph.keyring拷贝过去

scp/var/lib/ceph/boot-strap-mds/ceph.keyring

root@xxx:/var/lib/ceph/boot-strap-mds/

scp/var/lib/ceph/boot-strap-osd/ceph.keyring

root@xxx:/var/lib/ceph/boot-strap-osd/

1.5 增加两个osd,这里是两个目录,在独立的分区上比较好

sshceph-node2

sudo mkdir /tmp/osd0

exit

sshceph-node1

sudo mkdir /tmp/osd1

exit

1.6 准备osd

ceph-deploy osd prepare {ceph-node}:/path/to/directory

ceph-deploy osd prepare ceph-node2:/tmp/osd0 ceph-node1:/tmp/osd1

可能会产生下错误:

openstack@monitor3:~/cluster1$ ceph-deploy -v osdprepare ceph1:sde:/dev/sdb

[ceph_deploy.cli][INFO ] Invoked (1.3):/usr/bin/ceph-deploy -v osd prepare ceph1:sde:/dev/sdb

[ceph_deploy.osd][DEBUG ] Preparing cluster ceph disksceph1:/dev/sde:/dev/sdb

[ceph1][DEBUG ] connected to host: ceph1

[ceph1][DEBUG ] detect platform information from remote host

[ceph1][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 12.04precise

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph1

[ceph1][DEBUG ] write cluster configuration to/etc/ceph/{cluster}.conf

[ceph1][WARNIN] osd keyring does not exist yet, creating one

[ceph_deploy.osd][ERROR ] OSError: [Errno 2] No such file ordirectory

[ceph_deploy][ERROR ] GenericError: Failed to create 1 OSDs

Step 2 - We then ran the following commands:

Deploy-node : ceph-deploy uninstall ceph1

Ceph1-node : sudo rm –fr /etc/ceph/*

Deploy-node : ceph-deploy gatherkeys ceph1

Deploy-node : ceph-deploy -v install ceph1

1.7 激活osd

ceph-deploy osd activate {ceph-node}:/path/to/directory

ceph-deploy osd activate ceph-node2:/tmp/osd0 ceph-node1:/tmp/osd1

1.8 命令将配置文件和管理密钥复制到管理节点和你的Ceph节点

ceph-deploy admin {ceph-node}

ceph-deploy admin admin-node ceph-node1 ceph-node2

1.9 检查集群健康状况

Ceph health

2. 扩展你的集群

2.1 增加一个osd

ssh ceph-node2

sudo mkdir /tmp/osd2

exit

2.2 准备osd

ceph-deploy osd prepare {ceph-node}:/path/to/directory

ceph-deploy osd prepare ceph-node2:/tmp/osd2

2.3 激活osd

ceph-deploy osd activate {ceph-node}:/path/to/directory

ceph-deploy osd activate ceph-node2:/tmp/osd2

3. 增加一个元数据服务器

3.1 为了使用CephFS文件系统,你需要至少一台元数据服务器。执行如下步骤以创建一台元数据服务器

ceph-deploy mds create {ceph-node}

ceph-deploy mds createceph-node1

4. 增加监控器

一个Ceph存储集群至少需要一台Ceph监视器。为了保证高可用性,Ceph存储集群中通常会运行多个Ceph监视器,任意单台Ceph监视器的宕机都不会影响整个Ceph存储集群正常工作

ceph-deploy mon create {ceph-node}

ceph-deploy mon create ceph-node2

至此,一个简单的ceph集群就准备就绪了,现在就可把ceph整合进OpenStack中了

参考http://ceph.com/docs/master/rbd/rbd-openstack/

1. 创建pool

ceph osd pool createvolumes 128

ceph osd poolcreate images 128

2. 配置OpenStack的ceph client

把运行glance-api、cinder-volume的节点作为ceph’s client,每个都需要一个ceph.conf

ssh {your-openstack-server}sudo tee /etc/ceph/ceph.conf </etc/ceph/ceph.conf

把ceph.conf的属组改成openstack-server的当前用户

3. 安装ceph的client

Cinder-volume、glance-api 节点需要安装

sudo yum installpython-ceph

cinder-volume需要client command line tools:

sudo yum install ceph

4. 设置cephclient认证

ceph auth get-or-createclient.volumes mon 'allow r' osd 'allow class-read object_prefixrbd_children,allow rwx pool=volumes,allow rx pool=images'

ceph authget-or-create client.images mon 'allow r' osd 'allow class-read object_prefixrbd_children,allow rwx pool=images'

5. 为client.volumes client.images 增加到合适的节点并改变他们的属组

ceph auth get-or-createclient.images | ssh {your-glance-api-server} sudo tee/etc/ceph/ceph.client.images.keyring

ssh{your-glance-api-server}sudo chown glance:glance /etc/ceph/ceph.client.images.keyring

ceph authget-or-create client.volumes | ssh {your-volume-server} sudo tee/etc/ceph/ceph.client.volumes.keyring

ssh{your-volume-server}sudo chown cinder:cinder /etv/ceph/ceph.client.volumes.keyring

()

6. 运行NOVA计算的主机不需要的钥匙圈。相反,他们的密钥存储在libvirt的

ssh {your-compute-host}client.volumes.key <`ceph auth get-key client.volumes`

7. 在compute-node, 为libvirt增加密钥

cat > secret.xml<< EOF

<secret ephemeral = 'no' private = 'no'>

<usage type = 'ceph'>

<name>client.volumes secret</name>

</usage>

</secret>

EOF

sudo virsh secret-define --file secret.xml

<uuid of seccet is output here>

sudo virsh secret-set-value --secret {uuid of secret} --base64$(cat client.volumes.key) &&rm client.volumes.key secret.xml

8. 配置cinder

volume_driver =cinder.volume.driver.rbd.RBDDriver

rbd_pool=volumes

glance_api_version= 2

rbd_user = volumes

rbd_secret_uuid = {uuid ofsecret}

rbd_ceph_conf=/etc/ceph/ceph.conf

9. 重启服务

10. Ubuntu作为client可以顺利的创建卷和attachvolume, 但是redhat系的不行,愿意是redhat需要特殊的qemu来支持rbd.

参考:http://openstack.redhat.com/Using_Ceph_for_Block_Storage_with_RDO

11. centOS6.4 作为cephclient的解决办法

在http://ceph.com/packages/ceph-extras/rpm/centos6.3/x86_64/下载qemu-img和qemu-kvm包替换你系统中的包。

/2

/2