问题导读:

1.如何配置 local_reposity ?

2.如何进行编译?

3.如何使用?

软件版本:

hive1.2.1 ,eclipse4.5,maven3.2 ,JDK1.7

软件准备:

hive:

环境准备:

(1). 安装好的Hadoop集群(伪分布式亦可);

(2) linux 下maven环境;(这里需要说下,maven编译hive,在windows下是不通的,因为里面需要bash的支持,所以直接使用linux编译hive就好)

0. 编译前,建议把maven的local_reposity 配置下,同时配置源如下(开源中国的maven源,相对国外的源较快):

[mw_shl_code=java,true]<mirror>

<id>nexus-osc</id>

<mirrorOf>central</mirrorOf>

<name>Nexus osc</name>

<url>http://maven.oschina.net/content/groups/public/</url>

</mirror>

<mirror>

<id>nexus-osc-thirdparty</id>

<mirrorOf>thirdparty</mirrorOf>

<name>Nexus osc thirdparty</name>

<url>http://maven.oschina.net/content ... dparty/</url>

</mirror>[/mw_shl_code]

1. 编译Hive,下载hive1.2.1的源码,并解压到linux某目录,按照下面的命令进行编译(进入hive源码解压后路径):

(1)mvn clean install -DskipTests -Phadoop-2

[mw_shl_code=java,true]

[INFO] Reactor Summary:

[INFO]

[INFO] Hive ............................................... SUCCESS [ 4.457 s]

[INFO] Hive Shims Common .................................. SUCCESS [ 5.047 s]

[INFO] Hive Shims 0.20S ................................... SUCCESS [ 2.017 s]

[INFO] Hive Shims 0.23 .................................... SUCCESS [ 7.157 s]

[INFO] Hive Shims Scheduler ............................... SUCCESS [ 1.796 s]

[INFO] Hive Shims ......................................... SUCCESS [ 1.674 s]

[INFO] Hive Common ........................................ SUCCESS [ 5.711 s]

[INFO] Hive Serde ......................................... SUCCESS [ 7.577 s]

[INFO] Hive Metastore ..................................... SUCCESS [ 18.044 s]

[INFO] Hive Ant Utilities ................................. SUCCESS [ 1.373 s]

[INFO] Spark Remote Client ................................ SUCCESS [ 10.962 s]

[INFO] Hive Query Language ................................ SUCCESS [05:12 min]

[INFO] Hive Service ....................................... SUCCESS [ 42.408 s]

[INFO] Hive Accumulo Handler .............................. SUCCESS [01:40 min]

[INFO] Hive JDBC .......................................... SUCCESS [ 9.021 s]

[INFO] Hive Beeline ....................................... SUCCESS [ 12.194 s]

[INFO] Hive CLI ........................................... SUCCESS [ 12.576 s]

[INFO] Hive Contrib ....................................... SUCCESS [ 3.031 s]

[INFO] Hive HBase Handler ................................. SUCCESS [01:54 min]

[INFO] Hive HCatalog ...................................... SUCCESS [ 28.797 s]

[INFO] Hive HCatalog Core ................................. SUCCESS [ 5.609 s]

[INFO] Hive HCatalog Pig Adapter .......................... SUCCESS [ 23.254 s]

[INFO] Hive HCatalog Server Extensions .................... SUCCESS [01:15 min]

[INFO] Hive HCatalog Webhcat Java Client .................. SUCCESS [ 2.036 s]

[INFO] Hive HCatalog Webhcat .............................. SUCCESS [ 49.390 s]

[INFO] Hive HCatalog Streaming ............................ SUCCESS [ 4.387 s]

[INFO] Hive HWI ........................................... SUCCESS [ 1.768 s]

[INFO] Hive ODBC .......................................... SUCCESS [ 1.053 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.111 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 0.550 s]

[INFO] Hive Packaging ..................................... SUCCESS [ 3.195 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 14:31 min

[INFO] Finished at: 2015-10-15T05:25:11-07:00

[INFO] Final Memory: 89M/416M[/mw_shl_code]

(2)清空相关输出:mvn eclipse:clean

(3)编译成eclipse工程:

mvn eclipse:eclipse -DdownloadSources -DdownloadJavadocs -Phadoop-2

[mw_shl_code=java,true][INFO] Reactor Summary:

[INFO]

[INFO] Hive ............................................... SUCCESS [ 7.396 s]

[INFO] Hive Shims Common .................................. SUCCESS [ 3.983 s]

[INFO] Hive Shims 0.20S ................................... SUCCESS [ 2.734 s]

[INFO] Hive Shims 0.23 .................................... SUCCESS [ 16.801 s]

[INFO] Hive Shims Scheduler ............................... SUCCESS [ 2.143 s]

[INFO] Hive Shims ......................................... SUCCESS [ 1.958 s]

[INFO] Hive Common ........................................ SUCCESS [ 4.495 s]

[INFO] Hive Serde ......................................... SUCCESS [ 6.760 s]

[INFO] Hive Metastore ..................................... SUCCESS [ 3.512 s]

[INFO] Hive Ant Utilities ................................. SUCCESS [ 0.252 s]

[INFO] Spark Remote Client ................................ SUCCESS [ 6.719 s]

[INFO] Hive Query Language ................................ SUCCESS [ 7.988 s]

[INFO] Hive Service ....................................... SUCCESS [ 55.204 s]

[INFO] Hive Accumulo Handler .............................. SUCCESS [11:49 min]

[INFO] Hive JDBC .......................................... SUCCESS [ 1.607 s]

[INFO] Hive Beeline ....................................... SUCCESS [35:22 min]

[INFO] Hive CLI ........................................... SUCCESS [01:28 min]

[INFO] Hive Contrib ....................................... SUCCESS [ 1.797 s]

[INFO] Hive HBase Handler ................................. SUCCESS [10:35 min]

[INFO] Hive HCatalog ...................................... SUCCESS [ 5.775 s]

[INFO] Hive HCatalog Core ................................. SUCCESS [01:23 min]

[INFO] Hive HCatalog Pig Adapter .......................... SUCCESS [01:10 min]

[INFO] Hive HCatalog Server Extensions .................... SUCCESS [07:20 min]

[INFO] Hive HCatalog Webhcat Java Client .................. SUCCESS [ 1.968 s]

[INFO] Hive HCatalog Webhcat .............................. SUCCESS [01:53 min]

[INFO] Hive HCatalog Streaming ............................ SUCCESS [ 2.089 s]

[INFO] Hive HWI ........................................... SUCCESS [ 1.816 s]

[INFO] Hive ODBC .......................................... SUCCESS [ 1.284 s]

[INFO] Hive Shims Aggregator .............................. SUCCESS [ 0.064 s]

[INFO] Hive TestUtils ..................................... SUCCESS [ 2.947 s]

[INFO] Hive Packaging ..................................... SUCCESS [ 2.837 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:13 h

[INFO] Finished at: 2015-10-15T19:53:05-07:00

[INFO] Final Memory: 68M/306M[/mw_shl_code]

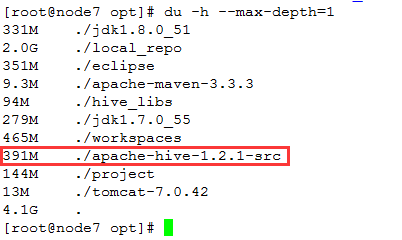

这个过程会比较慢;编译后,文件大小大概有:391M左右

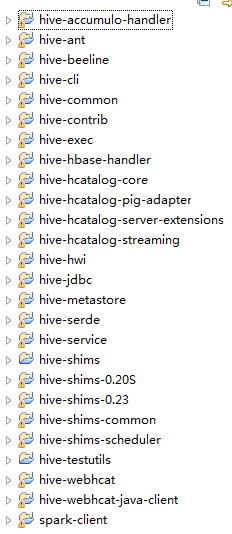

2. 编译后工程导入eclipse中

这里导入需要分为两种情况,分为导入windows下的eclipse和导入linux下的eclipse中;(因为一般使用机器都是windows的,所以如果可以使用windows,则最好)

2.1 工程导入windows的eclipse中;

打开eclipse,右键-> Import ,选择编译后的文件夹(这里需要把编译后的文件下载到windows上);即可看到如下的界面:

当然,这里会有些错误,比如jdk/tool.jar找不到等等,这个是因为编译的jdk和windows的jdk不一样,调整下即可。

(1)运行 hive-cli 工程的CliDriver(当然,要先启动hive相关进行,hive --service metastore & ; hive --service hiveserver2 &)

运行后会直接报错,说 driver “hive-site.xml ”not in Classpath 什么的错误,修改方法:

打开hive-common工程的本地目录的target/test-classes路径

修改里面的core-site.xml 以及hive-site.xml ,这里面的配置就参考hadoop集群以及hive的配置即可

然后再次运行CliDriver,发现报下面的错误:

[mw_shl_code=text,true]Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException: Server IPC version 9 cannot communicate with client version 4

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:677)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621)[/mw_shl_code]

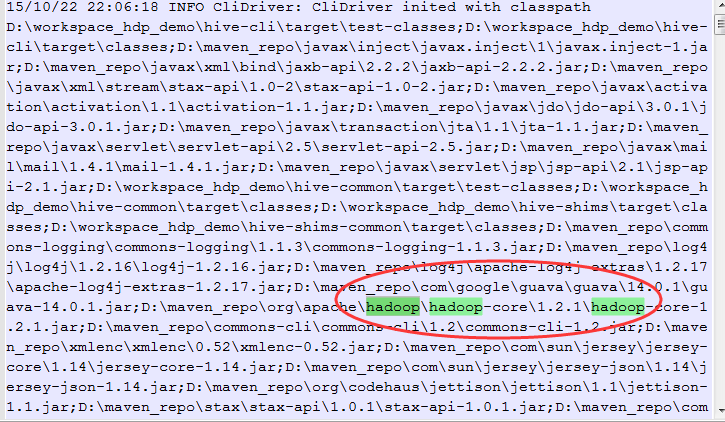

这个是版本不匹配的错误,通过打印java的classpath,发现hadoop1的jar包在hadoop2的jar包前面

这样子肯定是有 问题的,集群都使用的是hadoop2的版本,但是代码却用的hadoop1,这样报这个错就没啥奇怪的了。那问题是出在了哪呢?

通过查看hive-shims-common工程,发现其工程的依赖只有hadoop1,没有hadoop2,而hive-cli工程也是依赖hive-shims-common工程,这也就解释了为什么Java的classpath里面hadoop1的jar包在hadoop2前面了。

此路不通!

(2)运行hive-beeline工程的BeeLine

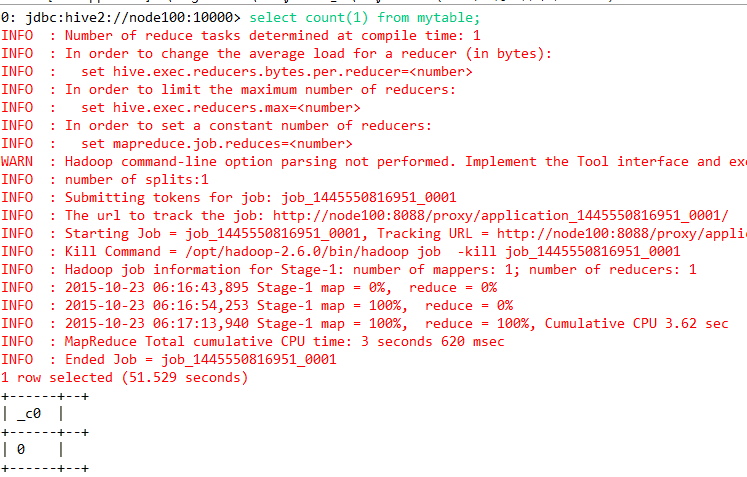

直接运行,进入beeline交互式命令终端,如下图:

发现是可以连接hive的,比如mr查询:

但是这个不可以调试,即使用debug模式,仍然不能调试。

所以对于阅读源码,查看调用关系来说这种模式也不是很好。

2.2 导入到linux的eclipse工程

此导入和windows不无差别。

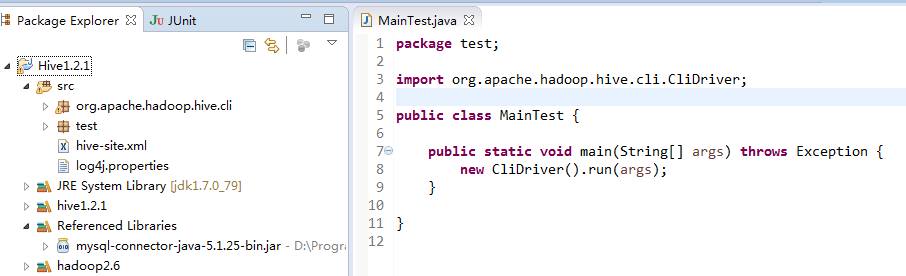

3. 直接新建工程,使用编译后的hive jar包(此处的jar包不是指自己编译的,而是官网直接提供的),就apache-hive-1.2.1-bin.tar.gz文件。

3.1 在windows的eclipse中新建hive工程

同时新建一个类,如上图所示。

这里还需要注意:

a. 引入编译后的hive的lib包的所有jar包;

b. 引入MySQL的连接jar包;

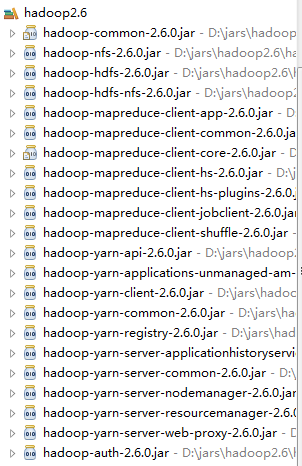

c. 引入hadoop的相关jar包

(1)写一个测试程序,调用CliDriver,出现下面的错误:

[mw_shl_code=java,true]2015-10-22 22:25:19,837 INFO [main] (HiveMetaStoreClient.java:376) - Trying to connect to metastore with URI thrift://192.168.0.100:9083

Exception in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:662)

at test.MainTest.main(MainTest.java:8)

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:86)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:503)

... 2 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1521)

... 8 more

Caused by: java.lang.NullPointerException

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1010)

at org.apache.hadoop.util.Shell.runCommand(Shell.java:482)

at org.apache.hadoop.util.Shell.run(Shell.java:455)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:715)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:808)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:791)

at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getUnixGroups(ShellBasedUnixGroupsMapping.java:84)

at org.apache.hadoop.security.ShellBasedUnixGroupsMapping.getGroups(ShellBasedUnixGroupsMapping.java:52)

at org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback.getGroups(JniBasedUnixGroupsMappingWithFallback.java:51)

at org.apache.hadoop.security.Groups.getGroups(Groups.java:176)

at org.apache.hadoop.security.UserGroupInformation.getGroupNames(UserGroupInformation.java:1488)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:436)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:236)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:74)

... 13 more[/mw_shl_code]

通过跟踪排查,发现是windows中的hadoop没有配置winutils.exe 所致也就是最开始的错误:

[mw_shl_code=applescript,true]Could not locate executable D:\jars\hadoop2.6\hadoop-2.6.0\bin\winutils.exe in the Hadoop binarie[/mw_shl_code]

(如果只是windows提交mr任务,这个没有配置,也是可以的,但是在hive源码里面有一个检查,如果是windows提交查询的话,需要检查,没有就会报空指针异常);这个错误可以google之来修改,这里不说,下面也就没有再在这条路下面走了。

(2)写一个调用程序,运行BeeLine,这个没有测试。

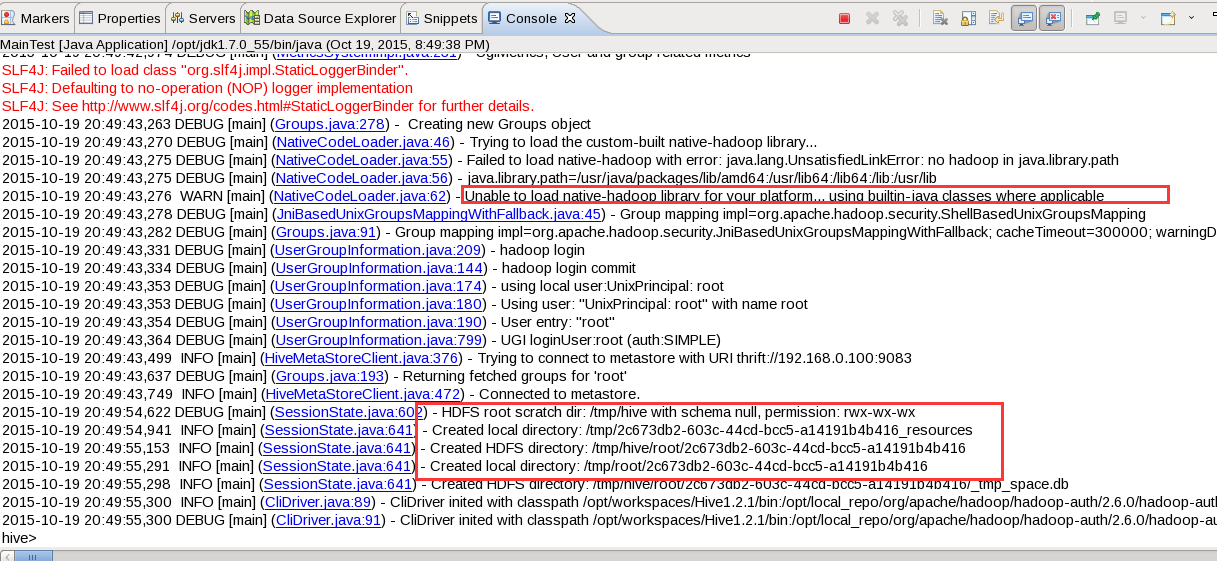

3.2 linux的eclipse新建hive工程:

建立的工程和windows并无二致,如下:

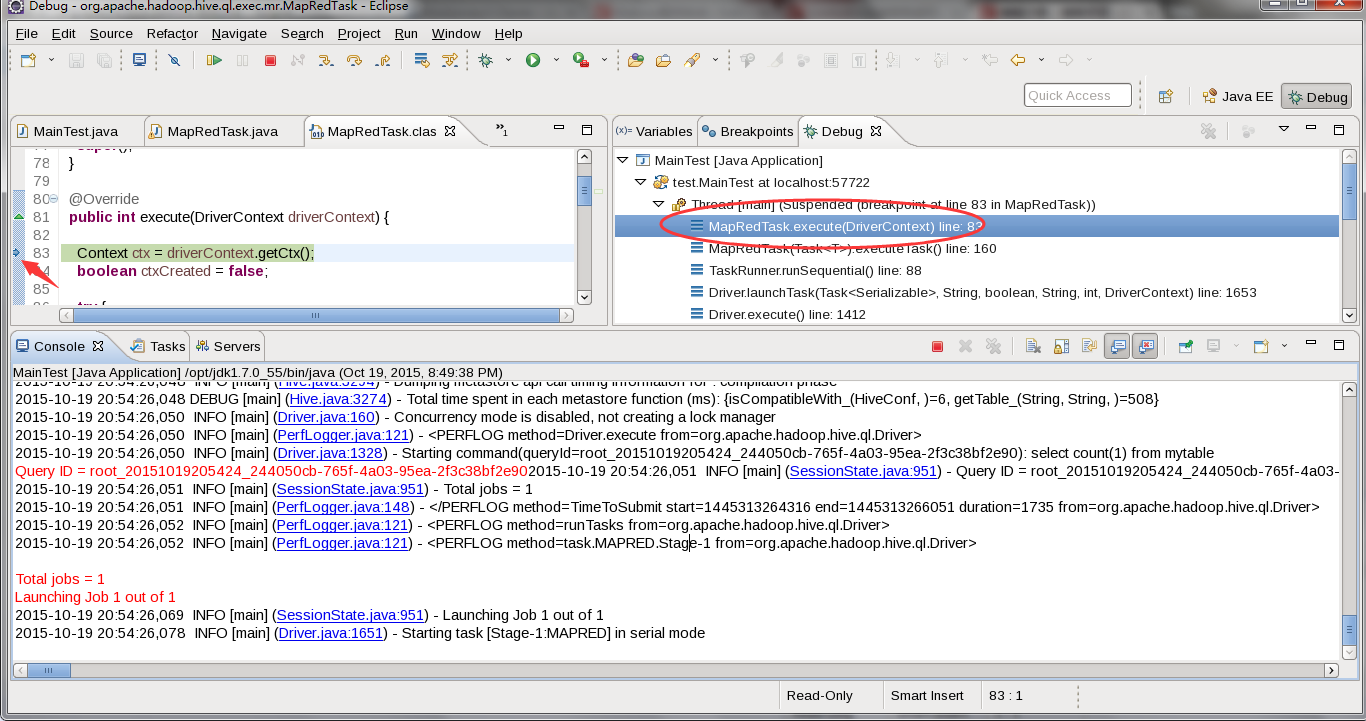

同样编写程序调用CliDriver,Debug运行,如下所示(需要注意我在这里并没有配置hadoop相关目录):

做一个查询,看是否可以启动debug模式:

这里看到的确是进入了debug模式。

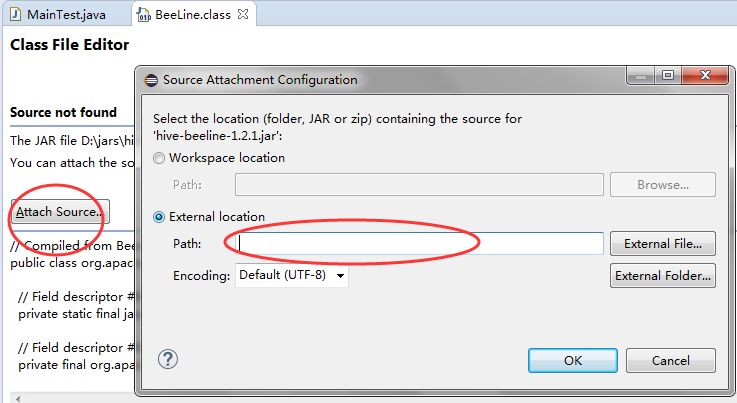

如何添加源码?看下图

4. 总结:

(1) hive1.2.1目前使用源码编译得到的版本,并不支持hadoop2的调试;(就个人所作的工作的结果来看);

(2)hive1.2.1使用eclipse调试源码可以使用新建工程的方式,然后导入官网编译的hive包及hadoop包进行调试,同时需要注意一般需要在linux环境下调试,如果需要在windows下调试,需要安装winutils.exe ;

|

/2

/2