本帖最后由 Kevin517 于 2016-10-15 20:30 编辑

问题说明:在 Eclipse 中 Upload files to DFS ,上传成功后,在 eclipse 中打开文件,为空。运行 单词统计案例,就没有结果。

环境说明:

采用云平台中的两台云主机搭建的 master 和 slaver 作为 Namenode 和 Datanode 集群。

云平台使用 GRE 网络。内网为 10.0.0.0/24;外网为192.168.200.0/24

两个节点通过 内网 (10.0.0.0/24 )通信。windows通过云主机的 floatIP (192.168.200.0/24)连接。

Hadoop 版本为 Hadoop 2.7.0

IP如下:

| master | 192.168.200.105 | 10.0.0.7 | | slaver | 192.168.200.106 | 10.0.0.8 | | windows 7 (Kevin) | 192.168.200.5 | |

集群使用 root 用户搭建,以 root 用户启动 hadoop

集群启动正常,两个节点进程启动成功,日志无错误输出。

windows上访问 192.168.200.105:50070,网页正常打开。检查 namenode 和 datanode 信息正常。

windows上访问 192.168.200.105::8088,网页打开正常。检查 Yarn 正常。

在 master 对 HDFS 操作正常。

并且执行

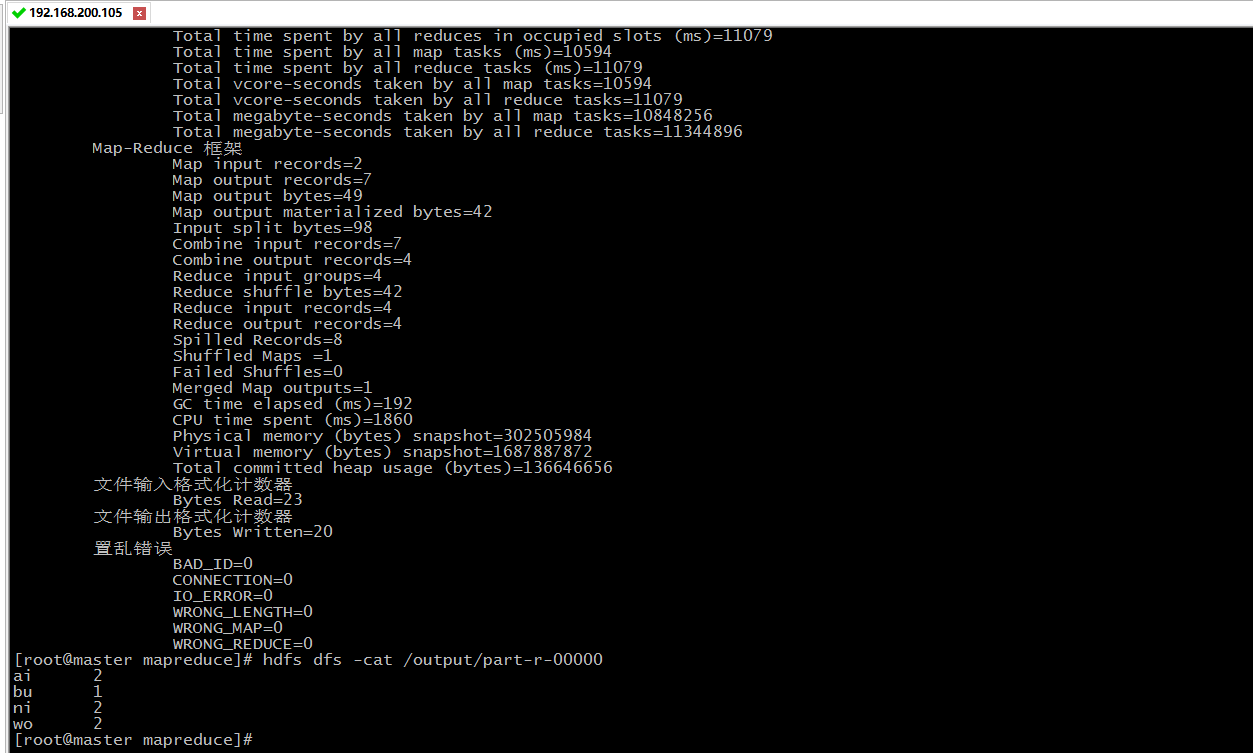

[size=13.3333px][root@master mapreduce]# hadoop jar hadoop-mapreduce-examples-2.7.0.jar wordcount /input /output可以正常运行,正常输出。

MapReduce

================================================================

问题描述:

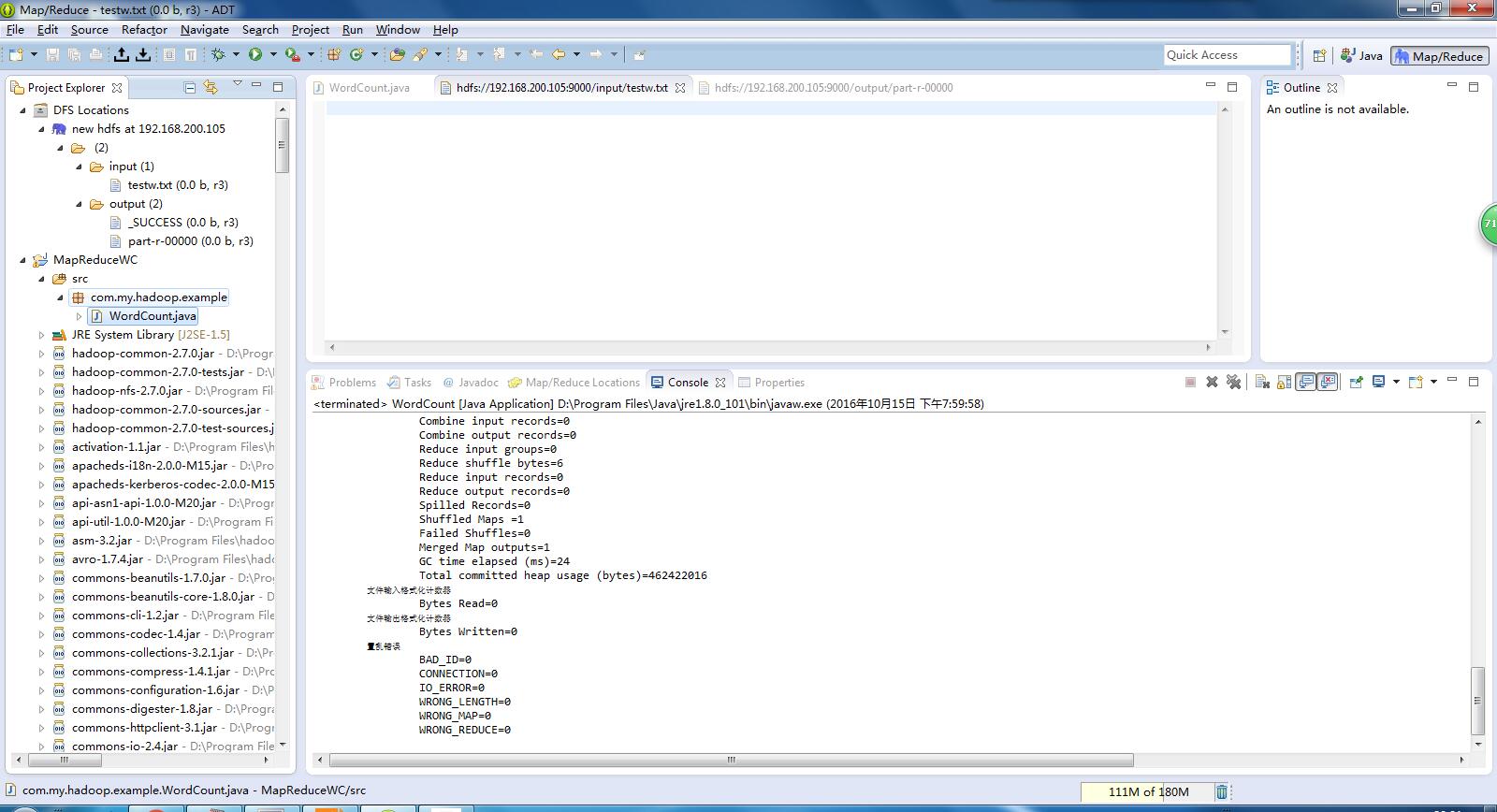

windows 上 Eclipse 搭建Hadoop开发环境成功。

但是在创建成功 input 文件夹后,上传文件,也能上传成功。就是成功后,文件没有信息。

然后运行案例。 控制台正常输出运行过程信息。没有报错。也输出了统计结果。

因为上传文件没有内容,所以统计结果也没有信息。

WordCount

上面截图是运行成功截图。

======================================================

下面贴出 重新上传到 input/ 目录的时候 namenode 的日志 :hadoop-root-namenode-master.log

两个时间

以下,是运行案例的日志。

2016-10-15 07:52:49,692 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:05,544 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:05,545 INFO org.apache.hadoop.ipc.Server: IPC Server handler 6 on 9000, call org.apache.hadoop.hdfs.protocol.ClientProtocol.getBlockLocations from 192.168.100.8:8819 Call#7 Retry#0: java.io.FileNotFoundException: File does not exist: /input/wordtest.txt

2016-10-15 07:59:17,400 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:18,082 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:18,084 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:19,813 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:30,958 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:31,146 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:31,168 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:31,439 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:31,440 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 16 Total time for transactions(ms): 1 Number of transactions batched in Syncs: 0 Number of syncs: 10 SyncTimes(ms): 412

2016-10-15 07:59:31,790 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:31,840 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,028 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,074 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,084 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /output/_temporary/0/_temporary/attempt_local29653720_0001_r_000000_0/part-r-00000 is closed by DFSClient_NONMAPREDUCE_-1631979872_1

2016-10-15 07:59:32,088 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,092 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,093 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,096 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,110 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,112 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,113 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,115 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,118 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,130 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,138 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,145 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:32,151 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /output/_SUCCESS is closed by DFSClient_NONMAPREDUCE_-1631979872_1

2016-10-15 07:59:42,822 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,665 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,667 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,667 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,668 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,668 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,669 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,669 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,670 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,671 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 07:59:43,672 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:00:26,501 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:00:28,220 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

以下,是重新上传文件的日志。

2016-10-15 08:04:32,059 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:04:32,060 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 26 Total time for transactions(ms): 1 Number of transactions batched in Syncs: 0 Number of syncs: 18 SyncTimes(ms): 482

2016-10-15 08:04:32,099 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:04:39,188 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:04:39,214 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:04:39,215 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741843_1019{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-20957220-9bf2-463a-88aa-88ed2446fd42:NORMAL:10.0.0.7:50010|RBW], ReplicaUC[[DISK]DS-a461d256-df88-473f-b0f8-08c7221a794b:NORMAL:10.0.0.8:50010|RBW]]} for /input/word.txt

2016-10-15 08:05:00,252 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:05:00,280 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:05:00,281 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 1 to reach 2 (unavailableStorages=[], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) For more information, please enable DEBUG log level on org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy

2016-10-15 08:05:00,281 WARN org.apache.hadoop.hdfs.protocol.BlockStoragePolicy: Failed to place enough replicas: expected size is 1 but only 0 storage types can be selected (replication=2, selected=[], unavailable=[DISK], removed=[DISK], policy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]})

2016-10-15 08:05:00,281 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 1 to reach 2 (unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) All required storage types are unavailable: unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}

2016-10-15 08:05:00,282 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741844_1020{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-a461d256-df88-473f-b0f8-08c7221a794b:NORMAL:10.0.0.8:50010|RBW]]} for /input/word.txt

2016-10-15 08:05:21,298 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:05:21,320 WARN org.apache.hadoop.security.UserGroupInformation: No groups available for user Kevin

2016-10-15 08:05:21,321 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 2 to reach 2 (unavailableStorages=[], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) For more information, please enable DEBUG log level on org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy

2016-10-15 08:05:21,321 WARN org.apache.hadoop.hdfs.protocol.BlockStoragePolicy: Failed to place enough replicas: expected size is 2 but only 0 storage types can be selected (replication=2, selected=[], unavailable=[DISK], removed=[DISK, DISK], policy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]})

2016-10-15 08:05:21,321 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 2 to reach 2 (unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) All required storage types are unavailable: unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}

2016-10-15 08:05:21,321 INFO org.apache.hadoop.ipc.Server: IPC Server handler 4 on 9000, call org.apache.hadoop.hdfs.protocol.ClientProtocol.addBlock from 192.168.100.8:9029 Call#33 Retry#0

java.io.IOException: File /input/word.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 2 datanode(s) running and 2 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1550)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3067)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:722)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:492)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

[root@master logs]#

下面是重新上传后查看 HDFS 目录

[root@master ~]# hdfs dfs -ls /

Found 1 items

drwxr-xr-x - Kevin supergroup 0 2016-10-15 08:23 /input

[root@master ~]# hdfs dfs -ls /input

Found 1 items

-rw-r--r-- 3 Kevin supergroup 0 2016-10-15 08:23 /input/word.txt

[root@master ~]#

===================================================

希望,能帮忙分析以下。万分感谢。

|

|

/2

/2