问题导读:

1、如何使用矩阵?

2、TensorFlow中如何进行加减法?

3、如何对张量进行标准操作?

4、TensorFlow有哪些数学函数?

上一篇:TensorFlow ML cookbook 第一章3、4节 关于Tensors

使用矩阵

了解TensorFlow如何使用矩阵,通过计算图来理解数据流是非常重要的。

准备好

许多算法依赖于矩阵运算。 TensorFlow为我们提供了易于使用的操作来执行这种矩阵计算。对于以下所有示例,我们可以通过运行以下代码来创建图表会话:

[mw_shl_code=python,true]import tensorflow as tf

sess = tf.Session() [/mw_shl_code]

如何做

1、创建矩阵:我们可以从numpy数组或嵌套列表创建二维矩阵,如前面在张量部分所述。 我们还可以使用张量创建函数,并为诸如zeros(),ones(),truncated_normal()等功能指定二维形状。 TensorFlow还允许我们从具有函数diag()的一维数组或列表创建一个对角矩阵,如下所示:

[mw_shl_code=python,true]identity_matrix = tf.diag([1.0, 1.0, 1.0])

A = tf.truncated_normal([2, 3])

B = tf.fill([2,3], 5.0)

C = tf.random_uniform([3,2])

D = tf.convert_to_tensor(np.array([[1., 2., 3.],[-3., -7., -1.],[0., 5., -2.]]))

print(sess.run(identity_matrix))

[[ 1. 0. 0.]

[ 0. 1. 0.]

[ 0. 0. 1.]]

print(sess.run(A))

[[ 0.96751703 0.11397751 -0.3438891 ]

[-0.10132604 -0.8432678 0.29810596]]

print(sess.run(B))

[[ 5. 5. 5.]

[ 5. 5. 5.]]

print(sess.run(C))

[[ 0.33184157 0.08907614]

[ 0.53189191 0.67605299]

[ 0.95889051 0.67061249]]

print(sess.run(D))

[[ 1. 2. 3.]

[-3. -7. -1.]

[ 0. 5. -2.]]

[/mw_shl_code]

请注意,如果我们再次运行sess.run(C),我们将重新初始化随机变量,并最终得到不同的随机值。

2、加法和减法使用以下功能:

[mw_shl_code=python,true]print(sess.run(A+B))

[[ 4.61596632 5.39771316 4.4325695 ]

[ 3.26702736 5.14477345 4.98265553]]

print(sess.run(B-B))

[[ 0. 0. 0.]

[ 0. 0. 0.]]

Multiplication

print(sess.run(tf.matmul(B, identity_matrix)))

[[ 5. 5. 5.]

[ 5. 5. 5.]] [/mw_shl_code]

3、另外,函数matmul()具有指定是否在乘法之前转置参数或者每个矩阵是稀疏的参数。

4、转换参数如下:

[mw_shl_code=python,true]print(sess.run(tf.transpose(C)))

[[ 0.67124544 0.26766731 0.99068872]

[ 0.25006068 0.86560275 0.58411312]] [/mw_shl_code]

5、再次,值得一提的是,重新初始化给了我们之前不同的值。

6、对于行列式,请使用以下内容:

[mw_shl_code=python,true]print(sess.run(tf.matrix_determinant(D)))

-38.0 [/mw_shl_code]

.Inverse:

[mw_shl_code=python,true]print(sess.run(tf.matrix_inverse(D)))

[[-0.5 -0.5 -0.5 ]

[ 0.15789474 0.05263158 0.21052632]

[ 0.39473684 0.13157895 0.02631579]][/mw_shl_code]

注意,逆方法是基于Cholesky分解,如果矩阵是对称正定或LU分解。

7,分解:对于Cholesky分解,使用以下内容:

[mw_shl_code=python,true]print(sess.run(tf.cholesky(identity_matrix)))

[[ 1. 0. 1.]

[ 0. 1. 0.]

[ 0. 0. 1.]] [/mw_shl_code]

8,对于特征值和特征向量,请使用以下代码:

[mw_shl_code=python,true]print(sess.run(tf.self_adjoint_eig(D))

[[-10.65907521 -0.22750691 2.88658212]

[ 0.21749542 0.63250104 -0.74339638]

[ 0.84526515 0.2587998 0.46749277]

[ -0.4880805 0.73004459 0.47834331]] [/mw_shl_code]

请注意,函数self_adjoint_eig()输出第一行中的特征值和剩余向量中的后续向量。 在数学中,这被称为矩阵的特征分解。

如何运行

TensorFlow提供了所有的工具,让我们开始进行数值计算,并将这些计算添加到我们的图表中。 对于简单的矩阵运算,这种符号似乎相当繁琐。 请记住,我们将这些操作添加到图表中,并告诉TensorFlow通过这些操作运行的张量。 虽然这可能现在看起来很冗长,但是有助于了解后续章节中的符号,当这种计算方式使得更容易实现我们的目标时。

声明操作

现在我们必须了解我们可以添加到TensorFlow图中的其他操作。

准备好

除了标准的算术运算,TensorFlow还为我们提供了我们应该注意的更多的操作。 在进行之前,我们需要知道如何使用它们。 再次,我们可以通过运行以下代码创建一个图表会话:

[mw_shl_code=python,true]import tensorflow as tf

sess = tf.Session()[/mw_shl_code]

如何做

TensorFlow对张量进行标准操作:add(),sub(),mul()和div()。

请注意,除非另有规定,否则本节中的所有这些操作都将逐项评估输入元素:

1、TensorFlow提供了div()和相关函数的一些变体。

2、值得一提的是,div()返回与输入相同的类型。

这意味着如果输入是整数,它真的返回分区的底层(类似于Python 2)。 为了返回Python 3版本,它在分割之前将整数转换成浮点,并始终返回一个浮点数,TensorFlow提供了truediv()

功能,如下图所示:

[mw_shl_code=python,true]print(sess.run(tf.div(3,4)))

0

print(sess.run(tf.truediv(3,4)))

0.75[/mw_shl_code]

3、如果我们有浮点数并且想要一个整数除法,我们可以使用函数floordiv()。

请注意,这仍将返回一个浮点数,但舍入到最接近的整数。 功能如下:

[mw_shl_code=python,true]print(sess.run(tf.floordiv(3.0,4.0)))

0.0[/mw_shl_code]

4、另一个重要的功能是mod()。 该函数返回除法后的余数。 如下所示:

[mw_shl_code=python,true]print(sess.run(tf.mod(22.0, 5.0)))

2.0[/mw_shl_code]

5、两个张量之间的交叉积由cross()函数来实现。

请记住,交叉产品仅定义为两个三维向量,因此它只接受两个三维张量。 功能如下:

[mw_shl_code=python,true]print(sess.run(tf.cross([1., 0., 0.], [0., 1., 0.])))

[ 0. 0. 1.0][/mw_shl_code]

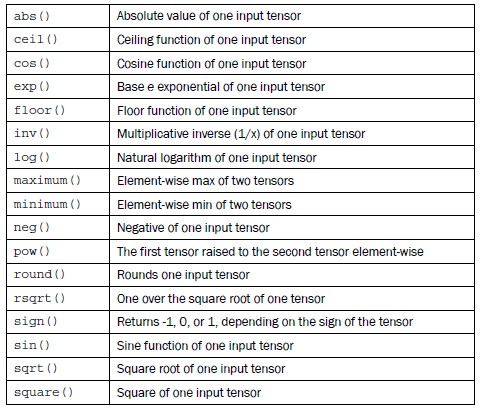

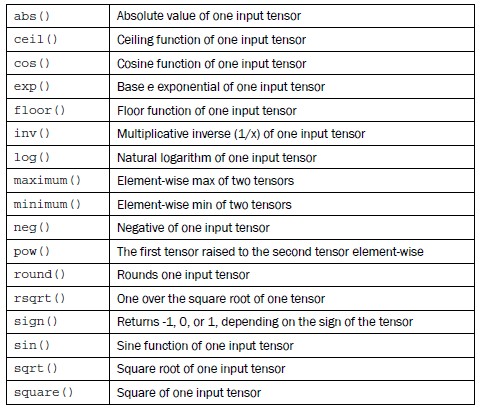

6、这是一个更常见的数学函数的紧凑列表。 所有这些功能均按元素运行。

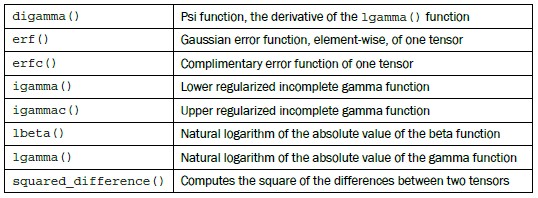

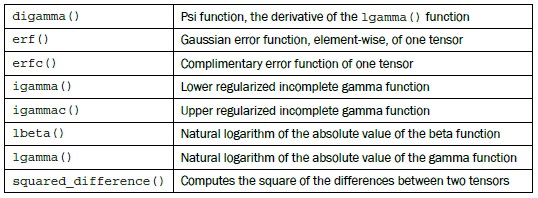

7、专业数学功能:有一些特别的数学函数可用于机器学习,值得一提,而TensorFlow已经为它们构建了功能。 同样,除非另有说明,这些功能以元素方式运行:

如何运行

重要的是要知道我们可以使用哪些功能添加到我们的计算图中。

大多数情况下,我们将会关注上述功能。 我们还可以生成许多不同的自定义函数作为上述功能的组合,如下所示:

[mw_shl_code=python,true]# Tangent function (tan(pi/4)=1)

print(sess.run(tf.div(tf.sin(3.1416/4.), tf.cos(3.1416/4.))))

1.0[/mw_shl_code]

更多

如果我们希望在这里没有列出的图形中添加其他操作,我们必须从上述功能创建我们自己的图形。 这是以前未列出的操作示例,我们可以添加到我们的图表中。 我们选择添加自定义多项式函数:

[mw_shl_code=python,true]def custom_polynomial(value):

return(tf.sub(3 * tf.square(value), value) + 10)

print(sess.run(custom_polynomial(11)))

362[/mw_shl_code]

原文:

Working with Matrices

Understanding how TensorFlow works with matrices is very important to understanding the flow of data through computational graphs.

Getting ready

Many algorithms depend on matrix operations. TensorFlow gives us easy-to-use operations to perform such matrix calculations. For all of the following examples, we can create a graph session by running the following code:

import tensorflow as tf

sess = tf.Session()

How to do it…

1、Creating matrices: We can create two-dimensional matrices from numpy arrays or nested lists, as we described in the earlier section on tensors. We can also use the tensor creation functions and specify a two-dimensional shape for functions such as zeros(), ones(), truncated_normal(), and so on. TensorFlow also allows us to create a diagonal matrix from a one-dimensional array or list with the function diag(), as follows:

identity_matrix = tf.diag([1.0, 1.0, 1.0])

A = tf.truncated_normal([2, 3])

B = tf.fill([2,3], 5.0)

C = tf.random_uniform([3,2])

D = tf.convert_to_tensor(np.array([[1., 2., 3.],[-3., -7., -1.],[0., 5., -2.]]))

print(sess.run(identity_matrix))

[[ 1. 0. 0.]

[ 0. 1. 0.]

[ 0. 0. 1.]]

print(sess.run(A))

[[ 0.96751703 0.11397751 -0.3438891 ]

[-0.10132604 -0.8432678 0.29810596]]

print(sess.run(B))

[[ 5. 5. 5.]

[ 5. 5. 5.]]

print(sess.run(C))

[[ 0.33184157 0.08907614]

[ 0.53189191 0.67605299]

[ 0.95889051 0.67061249]]

print(sess.run(D))

[[ 1. 2. 3.]

[-3. -7. -1.]

[ 0. 5. -2.]]

Note that if we were to run sess.run(C) again, we would reinitialize the random variables and end up with different random values.

2、Addition and subtraction uses the following function:

print(sess.run(A+B))

[[ 4.61596632 5.39771316 4.4325695 ]

[ 3.26702736 5.14477345 4.98265553]]

print(sess.run(B-B))

[[ 0. 0. 0.]

[ 0. 0. 0.]]

Multiplication

print(sess.run(tf.matmul(B, identity_matrix)))

[[ 5. 5. 5.]

[ 5. 5. 5.]]

3、Also, the function matmul() has arguments that specify whether or not to transpose the arguments before multiplication or whether each matrix is sparse.

4、Transpose the arguments as follows:

print(sess.run(tf.transpose(C)))

[[ 0.67124544 0.26766731 0.99068872]

[ 0.25006068 0.86560275 0.58411312]]

5、Again, it is worth mentioning the reinitializing that gives us different values than before.

6、For the determinant, use the following:

print(sess.run(tf.matrix_determinant(D)))

-38.0

.Inverse:

print(sess.run(tf.matrix_inverse(D)))

[[-0.5 -0.5 -0.5 ]

[ 0.15789474 0.05263158 0.21052632]

[ 0.39473684 0.13157895 0.02631579]]

Note that the inverse method is based on the Cholesky decomposition if the matrix is symmetric positive definite or the LU decomposition otherwise.

7、Decompositions: For the Cholesky decomposition, use the following:

print(sess.run(tf.cholesky(identity_matrix)))

[[ 1. 0. 1.]

[ 0. 1. 0.]

[ 0. 0. 1.]]

8、For Eigenvalues and eigenvectors, use the following code:

print(sess.run(tf.self_adjoint_eig(D))

[[-10.65907521 -0.22750691 2.88658212]

[ 0.21749542 0.63250104 -0.74339638]

[ 0.84526515 0.2587998 0.46749277]

[ -0.4880805 0.73004459 0.47834331]]

Note that the function self_adjoint_eig() outputs the eigenvalues in the first row and the subsequent vectors in the remaining vectors. In mathematics, this is known as the Eigen decomposition of a matrix.

How it works…

TensorFlow provides all the tools for us to get started with numerical computations and adding such computations to our graphs. This notation might seem quite heavy for simple matrix operations. Remember that we are adding these operations to the graph and telling TensorFlow what tensors to run through those operations. While this might seem verbose now, it helps to understand the notations in later chapters, when this way of computation will make it easier to accomplish our goals.

Declaring Operations

Now we must learn about the other operations we can add to a TensorFlow graph.

Getting ready

Besides the standard arithmetic operations, TensorFlow provides us with more operations that we should be aware of. We need to know how to use them before proceeding. Again, we can create a graph session by running the following code:

import tensorflow as tf

sess = tf.Session()

How to do it…

TensorFlow has the standard operations on tensors: add(), sub(), mul(), and div().

Note that all of these operations in this section will evaluate the inputs element-wise unless specified otherwise:

1. TensorFlow provides some variations of div() and relevant functions.

2. It is worth mentioning that div() returns the same type as the inputs.

This means it really returns the floor of the division (akin to Python 2) if the inputs are integers. To return the Python 3 version, which casts integers into floats before dividing and always returning a float, TensorFlow provides the function truediv()

function, as shown as follows:

print(sess.run(tf.div(3,4)))

0

print(sess.run(tf.truediv(3,4)))

0.75

3. If we have floats and want an integer division, we can use the function floordiv().

Note that this will still return a float, but rounded down to the nearest integer. The

function is shown as follows:

print(sess.run(tf.floordiv(3.0,4.0)))

0.0

4. Another important function is mod(). This function returns the remainder after the division. It is shown as follows:

print(sess.run(tf.mod(22.0, 5.0)))

2.0

5. The cross-product between two tensors is achieved by the cross() function.

Remember that the cross-product is only defined for two three-dimensional vectors, so it only accepts two three-dimensional tensors. The function is shown as follows:

print(sess.run(tf.cross([1., 0., 0.], [0., 1., 0.])))

[ 0. 0. 1.0]

6. Here is a compact list of the more common math functions. All of these functions

operate elementwise.

7. Specialty mathematical functions: There are some special math functions that get used in machine learning that are worth mentioning and TensorFlow has built in functions for them. Again, these functions operate element-wise, unless specifiedotherwise:

How it works…

It is important to know what functions are available to us to add to our computational graphs.

Mostly, we will be concerned with the preceding functions. We can also generate many different custom functions as compositions of the preceding functions, as follows:

# Tangent function (tan(pi/4)=1)

print(sess.run(tf.div(tf.sin(3.1416/4.), tf.cos(3.1416/4.))))

1.0

There's more…

If we wish to add other operations to our graphs that are not listed here, we must create our own from the preceding functions. Here is an example of an operation not listed previously that we can add to our graph. We choose to add a custom polynomial function, :

def custom_polynomial(value):

return(tf.sub(3 * tf.square(value), value) + 10)

print(sess.run(custom_polynomial(11)))

362

|

/2

/2