问题导读

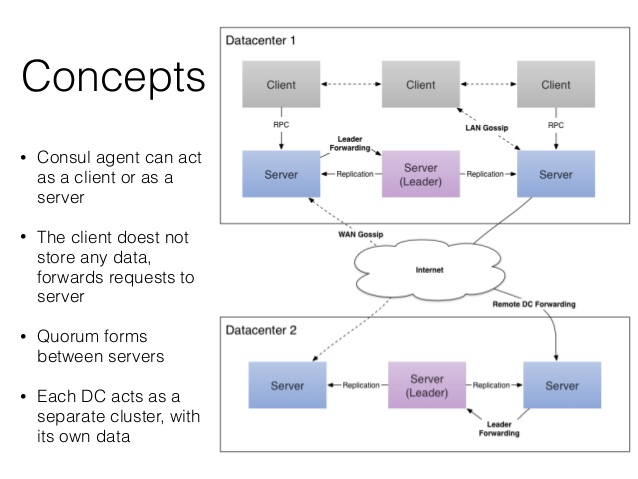

1.consul镜像的作用是什么?

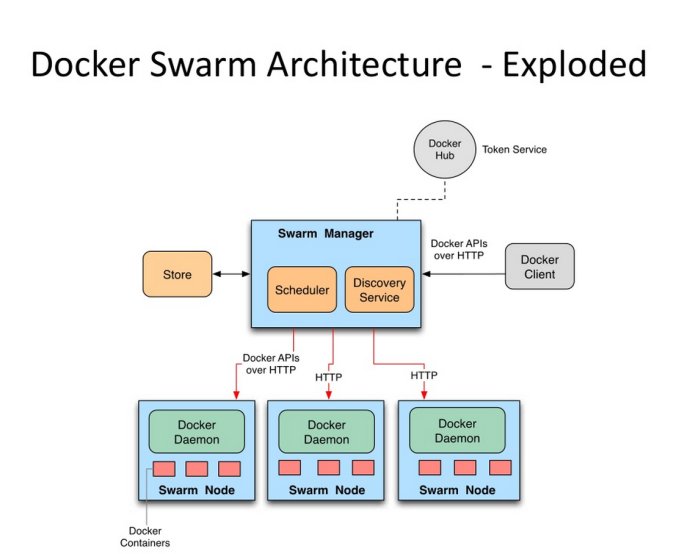

2.swarm的作用是什么?

3.如何实现管理docker节点?

官方网站:

https://www.consul.io

https://www.consul.io/docs/commands/

http://demo.consul.io/

功能:

https://www.consul.io/intro/

Service Discovery

Failure Detection

Multi Datacenter

Key/Value Storage

环境:

CentOS 7.0

consul-0.6.4

docker-engine-1.11.2

虚拟机测试,请参看Consul集群

本实验采用3容器节点consul server

consul-s1.example.com(192.168.8.101)

consul-s2.example.com(192.168.8.102)

consul-s3.example.com(192.168.8.103)

swarm(manager+agent),rethinkdb,shipyard(192.168.8.254)

一.安装docker

请参看CentOS6/7 docker安装

二.拉取consul镜像

docker pull progrium/consul

提示:目录没有官方出consul镜像,以上consul镜像是星数最高的,也是consul官方推荐的第三方docker image

https://github.com/gliderlabs/docker-consul

三.配置consul cluster

1.建用户(所有consul节点)

groupadd -g 1000 consul useradd -u 100 -g 1000 -s /sbin/nologin consul mkdir -p /opt/consul/{data,conf} chown -R consul: /opt/consul 说明:uid为100,gid为1000,是该镜像里写入文件的拥有人的uid,gid,如果不事先建好,启动时会报没有权限写入数据到data目录 2.配置consul server

consul-s1.example.com

docker run -d --restart=always \

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 8600:53 \

-p 8600:53/udp \

-h consul-s1 \

-v /opt/consul/data:/consul/data \

-v /opt/consul/conf:/consul/conf \

--name=consul-s1 consul:0.6.4 \

agent -server -bootstrap-expect=1 -ui \

-config-dir=/consul/conf \

-client=0.0.0.0 \

-node=consul-s1 \

-advertise=192.168.8.101

consul-s2.example.com

docker run -d --restart=always \

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 8600:53 \

-p 8600:53/udp \

-h consul-s2 \

-v /opt/consul/data:/consul/data \

-v /opt/consul/conf:/consul/conf \

--name=consul-s2 consul:0.6.4 \

agent -server -ui \

-config-dir=/consul/conf \

-client=0.0.0.0 \

-node=consul-s2 \

-join=192.168.8.101 \

-advertise=192.168.8.102

consul-s3.example.com

docker run -d --restart=always \

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 8600:53 \

-p 8600:53/udp \

-h consul-s3 \

-v /opt/consul/data:/consul/data \

-v /opt/consul/conf:/consul/conf \

--name=consul-s3 consul:0.6.4 \

agent -server -ui \

-config-dir=/consul/conf \

-client=0.0.0.0 \

-node=consul-s3 \

-join=192.168.8.101 \

-advertise=192.168.8.103

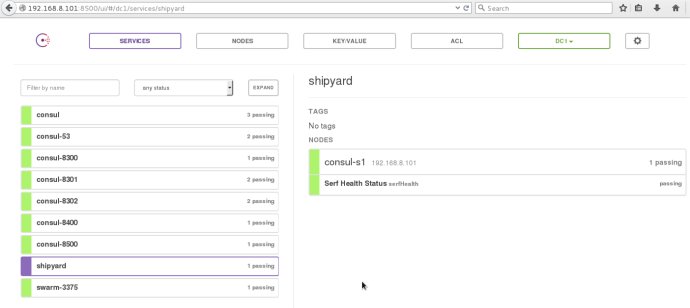

3.查看consul集群状态(HTTP REST API)

[root@ela-master1 ~]# curl localhost:8500/v1/status/leader "192.168.8.102:8300" [root@ela-master1 ~]# curl localhost:8500/v1/status/peers ["192.168.8.102:8300","192.168.8.101:8300","192.168.8.103:8300"]

root@router:~#curl 192.168.8.101:8500/v1/catalog/services {"consul":[]} root@router:~#curl 192.168.8.101:8500/v1/catalog/nodes|json_reformat % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 376 100 376 0 0 131k 0 --:--:-- --:--:-- --:--:-- 183k [ { "Node": "consul-s1", "Address": "192.168.8.101", "TaggedAddresses": { "wan": "192.168.8.101" }, "CreateIndex": 3, "ModifyIndex": 3783 }, { "Node": "consul-s2", "Address": "192.168.8.102", "TaggedAddresses": { "wan": "192.168.8.102" }, "CreateIndex": 6, "ModifyIndex": 3778 }, { "Node": "consul-s3", "Address": "192.168.8.103", "TaggedAddresses": { "wan": "192.168.8.103" }, "CreateIndex": 3782, "ModifyIndex": 3792 }

四.客户端注册

1.consul agent

consul-c1.example.com

docker run -d --restart=always \

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 8600:53 \

-p 8600:53/udp \

-h consul-c1 \

-v /opt/consul/data:/consul/data \

-v /opt/consul/conf:/consul/conf \

--name=consul-c1 consul:0.6.4 \

agent \

-config-dir=/consul/conf \

-client=0.0.0.0 \

-node=consul-c1 \

-join=192.168.8.101 \

-advertise=192.168.8.254

提示: 可以通过consul agent的DNS来发现和注册服务,测试DNS dig @172.17.0.2 -p8600 consul-s3.service.consul SRV 默认的domain为"节点名(-node指定的全局唯一名称).service.consul",对于跨主机容器服务集群是不错的解决方案

2.gliderlabs/registrator

http://artplustech.com/docker-consul-dns-registrator/

docker run -tid \

--restart=always \

--name=registrator \

--net=host \

-v /var/run/docker.sock:/tmp/docker.sock \

gliderlabs/registrator -ip 192.168.8.254 consul://192.168.8.101:8500

提示:可以简单地通过基于docker socket文件的gliderlabs/registrator容器来注册服务

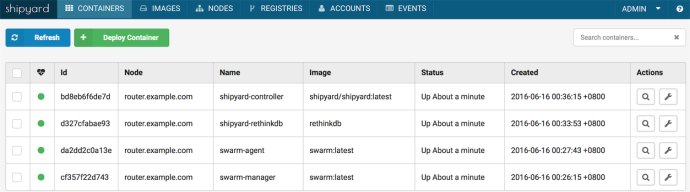

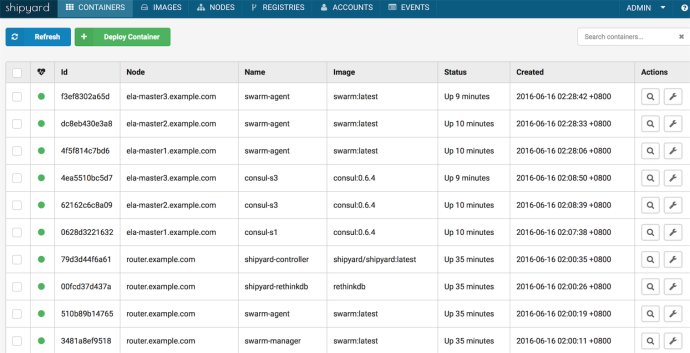

3.swarm+shipyard+rethinkdb

请参看Docker GUI之Shipyard, 这里swarm是通过consul的HTTP API来实现服务发现的,另外一种方式是通过consul agent的DNS服务来发现

a.swarm manager

docker run -tid --restart=always \

-p 3375:3375 \

--name swarm-manager \

swarm:latest manage --host tcp://0.0.0.0:3375 consul://192.168.8.101:8500

b.swarm agent

docker run -tid --restart=always \

--name swarm-agent \

swarm:latest join --addr 192.168.8.254:2375 consul://192.168.8.101:8500

c.rethinkdb

docker run -tid --restart=always \

--name shipyard-rethinkdb \

-p 28015:28015 \

-p 29015:29015 \

-v /opt/rethinkdb:/data \

rethinkdb

d.shipyard

docker run -tid --restart=always \

--name shipyard-controller \

--link shipyard-rethinkdb:rethinkdb \

--link swarm-manager:swarm \

-p 18080:8080 \

shipyard/shipyard:latest \

server \

-d tcp://swarm:3375

提示:

shipyard这里使用了--link和别名

rethinkdb默认监听28015,29015,8080,支持集群

[root@ela-client ~]# docker logs -f 3b381c89cdfc Recursively removing directory /data/rethinkdb_data/tmp Initializing directory /data/rethinkdb_data Running rethinkdb 2.3.4~0jessie (GCC 4.9.2)... Running on Linux 3.10.0-229.el7.x86_64 x86_64 Loading data from directory /data/rethinkdb_data Listening for intracluster connections on port 29015 Listening for client driver connections on port 28015 Listening for administrative HTTP connections on port 8080 Listening on cluster addresses: 127.0.0.1, 172.17.0.3, ::1, fe80::42:acff:fe11:3x Listening on driver addresses: 127.0.0.1, 172.17.0.3, ::1, fe80::42:acff:fe11:3x Listening on http addresses: 127.0.0.1, 172.17.0.3, ::1, fe80::42:acff:fe11:3x Server ready, "3b381c89cdfc_6bb" b9d1f2c6-9cd5-4702-935c-aa3bc2721261

提示:shipyard启动后,需要等一段时间(1分钟)才能找到rethinkdb,等待期间shipyard不可用,一旦rethinkdb连接成功即监听在8080,可以正常打开shipyard控制台

[root@ela-client ~]# docker logs -f 516e912629fb

FATA[0000] no connections were made when creating the session INFO[0000] shipyard version 3.0.5 WARN[0000] Error creating connection: gorethink: dial tcp 172.17.0.3:28015: getsockopt: connection refused FATA[0000] no connections were made when creating the session INFO[0000] shipyard version 3.0.5 WARN[0000] Error creating connection: gorethink: dial tcp 172.17.0.3:28015: getsockopt: connection refused FATA[0000] no connections were made when creating the session INFO[0000] shipyard version 3.0.5 WARN[0000] Error creating connection: gorethink: dial tcp 172.17.0.3:28015: getsockopt: connection refused FATA[0000] no connections were made when creating the session INFO[0000] shipyard version 3.0.5 WARN[0000] Error creating connection: gorethink: dial tcp 172.17.0.3:28015: getsockopt: connection refused FATA[0000] no connections were made when creating the session INFO[0000] shipyard version 3.0.5 INFO[0000] checking database INFO[0002] created admin user: username: admin password: shipyard INFO[0002] controller listening on :18080

e.修改docker监听方式(socket-->tcp)

sed -i '/-H/s#-H fd://#-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock#' /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

因为swarm agent需要监听在2375端口,所以需要重启docker才能生效,而我这里是将上面4个容器全部放全部放在了192.168.8.254这台主机上,重启docker会伴随重启docker里的所有容器,故建议生产环境科学拆分合理分布

注意:

1.CentOS7等比较新的系统,docker.server,docker.socket是拆分到两个文件里但又相互依赖,而CentOS6上则是合并,不然启动会报命令语法错误。

2.如果仅修改-H tcp://0.0.0.0:2375,则docker ps等子命令会全部卡住,所以要同时加上-H unix:///var/run/docker.sock

3.如果docker运行在socket方式,在运行如上4个容器后,无论怎么重启,shipyard控制台确实可以登录,但是容器,镜像,节点等里面的内容都是空的

4.添加swarm集群成员

节约资源,这里直接将是面的

consul-s1.example.com

consul-s2.example.com

consul-s3.example.com

加入到swarm集群里

consul-s1.example.com

docker run -tid \

--restart=always \

--name swarm-agent \

swarm:latest \

join --addr 192.168.8.101:2375 consul://192.168.8.101:8500

sed -i '/-H/s#-H fd://#-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock#' /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

consul-s2.example.com

docker run -tid \

--restart=always \

--name swarm-agent \

swarm:latest \

join --addr 192.168.8.102:2375 consul://192.168.8.101:8500

sed -i '/-H/s#-H fd://#-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock#' /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

consul-s3.example.com

docker run -tid \

--restart=always \

--name swarm-agent \

swarm:latest \

join --addr 192.168.8.103:2375 consul://192.168.8.101:8500

sed -i '/-H/s#-H fd://#-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock#' /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

5.管理docker节点

1.-H指定docker节点

docker -H tcp://192.168.8.101:2375 ps

直接通过-H指定集群中的docker节点,子命令和本地执行无异

2.shipyard控制台

来自:新浪

作者:liujun_live

|  /2

/2